Discussion on Blockchain Interoperability from Web System (1)

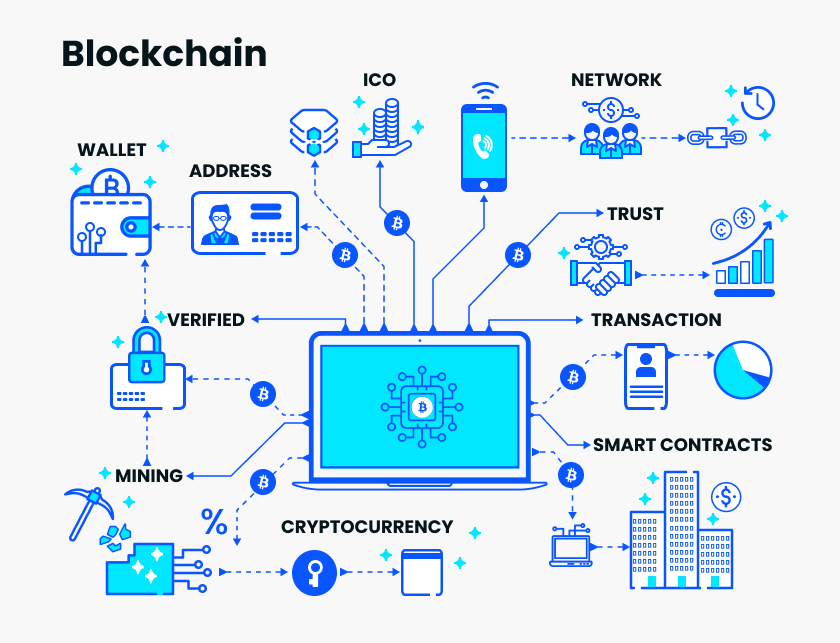

This article is the first in a series of articles exploring the state and future of interoperability in the blockchain ecosystem. Here, we define "interoperability" as the ability of a blockchain to exchange data between platforms, including non-chained data and transactions, without the help of third parties.

By studying the progress of the Web2 architecture from early theory to large-scale adoption, this series believes that blockchain protocol interoperability is a basic requirement for realizing the full potential of the technology. This series shows how the ecosystem is currently at risk of “Balkanization”. In the face of competition and commercial pressure, it has become a series of disconnected systems that run in parallel with each other but are isolated from each other. In order for the ecosystem to prioritize interoperability, it must establish a secure, fully decentralized, untrusted settlement layer, while running a blockchain can anchor its transactions to this settlement layer. Considering the current state of the blockchain system, Ethereum's architecture is very similar to the requirements of this generic rootchain.

Risk of “Balkanization”

The problems with today's Web2 architecture—especially user data silos (siloed information isolation), vulnerability, and mismanagement – can be traced back to the industry's departure from the roots of early Internet values, which initially interoperable as sustainable. And the key to a fair network connection to the world. At the current rate, the blockchain ecosystem is facing a similar “balkanization” risk, and protocol interoperability is deprived as companies compete to showcase their blockchain use cases faster than their competitors.

- Do you know Facebook's car, and there are 71 empty seats?

- Xiao Lei: Is the US Internet giant announcing the issue of currency, conspiracy or strategy?

- 6 highlights to see first! Facebook cryptocurrency project Libra white paper interpretation

The risk is that the pressure from mainstream adoption is likely to come before the Web3 infrastructure has enough interoperability and security to show the full vision of its original architect. Web3 may end up being very similar to today's Web2 in terms of financial exclusion, information silos, and data insecurity—but instead it is covered by a series of blockchains that are not interoperable at the protocol level.

Lessons from the early Internet

Developed as a publicly funded academic research project, the Web began in the 1960s with the goal of empowering humans to create, transmit, and share information. Early online information appeared in the form of basic text and images, connected and shared by hyperlinked networks. Since then, "information" on the Web has evolved to represent asset ownership (especially money), user profiles, and identities (especially discrete digital segments of personal identity).

Regardless of the broad definition of digital representation information, online information management theory can be traced back to early network theory. In the next evolutionary process of building information dissemination, early Internet pioneers attempted to ensure that information on the network could flow in a way that mimics the natural patterns of human behavior. Tim Berners-Lee, the inventor of the World Wide Web, has positioned his mission to create a network structure that makes humanized transmission of information possible, rather than the company's hierarchical structure. Until then, the network structure was one of the dominant structures for human production and management of large amounts of information.

The company's rigid, top-down structure attempts to govern the flow of information in a defined, modal, and traceable way, and the reality of how people communicate and share is much more chaotic and more uncertain. To simulate natural peer-to-peer social information exchange, Berners-Lee recommends simplicity in the Web architecture. By providing a basic framework for digital systems, information can grow and evolve in the most natural way, and must therefore be extensible. Once the "storage method … sets its own restrictions on the way things are transmitted", the information will be affected. Berners-Lee firmly believes that the network should mimic the natural structure . He describes the growth of the network as "cells formed in the global brain" and hopes that one day the network can "reflect" the way human interaction, socialization and daily life.

The goal of achieving scalable, user-friendly digital information depends on a key concept: “end-to-end effects” . The "end-to-end" effect is that Internet users (that is, at both ends of information dissemination) experience information in a consistent manner. Humans need to be able to adopt repetitive behaviors that will allow them to retrieve, process, and submit information in much the same way each time they interact with the Web. In other words, the technology that provides information to consumers must do this in a consistent way, again, across regions, across content types.

End-to-end effects can be achieved in two ways :

1) A third party can establish itself as an intermediary, providing services to present information in a consistent form, as it is from point a to point b. These companies and their engineers will “need to learn the art system of design” to negotiate and control information through the boundaries of digital channels, separating incompatible protocols.

2) The second option is all protocols, and information may need to be interoperable through these protocols to ensure that data can be seamlessly transferred from one user to another without the barriers that require additional negotiation to break. Native protocol interoperability will automatically create "end-to-end effects" rather than relying on exploitative third parties to provide this consistency behind the scenes.

Of the two approaches, interoperability is the preferred method for those who are at the forefront of early Web development. Berners-Lee often describes this goal as “universal.” He believes that the future of the Web will include a range of different protocols, but they all exist in the same macro world to ensure compatibility. Berners-Lee pleaded with technicians to view general interoperability as a more important goal than "fancy graphics technology and complex additional facilities." He believes that succumbing to the growing demand for profit and commercialization (which requires fancy graphics and additional facilities) is less important than focusing on protocol design.

As commercialization accelerates and the public origins of the Internet fade away, it introduces a new set of incentives for previously predominantly academic industries. As a result, as private companies compete to surpass rivals, a series of silo-style standards are beginning to emerge, threatening the irreparable fragmentation of the network ecosystem. Establishing an independent system runs counter to long-term economic optimization. In a basic paper on the Internet in 1964, Paul Baran pointed out: "In the field of communications, as in the transportation sector, sharing resources for many users is more economical than building their own systems." The World Wide Web Consortium was established to establish industry-wide standards to ensure interoperability information remains a core priority for Web development. The goal of the WWW Alliance “to realize the full potential of the web” relies on the belief that this full potential can only be met through interoperability – through standardization across agreements.

Information incentive

Content management on the Web provides a classic example of early interoperability and standardization thinking. The issue of content management —specifically, the issue of value, ownership, and copyright protection—is often called upon to highlight the potential shortcomings of the Internet and to encourage developers, regulators, and technicians to start discussing these issues as early as possible.

“Information wants freedom” can often be traced back to a conference in 1984 by Stewart Brand. They believe that information should be openly and organically distributed in digital form, just as it spreads among members of species throughout human history.

The network allows for near-infinite information dissemination, providing the ultimate place to express its desire for freedom, beyond the limitations of analog communication methods to date. The Internet provides an augmented stage for the dissemination of information, but at the expense of ownership, scarcity and a clear definition of the values that the global market has become accustomed to. The network allows information to be free, but it also exposes the opportunity for information to be used economically. (This is also true in other periods of information technology advancement, such as the 15th century printing revolution and the early 20th century radios – of course, much smaller).

This result is related to the second part of Brand's famous quote, and is also the part of the less frequently cited: "Information is expensive." Looking back, Brand's views may be more accurately re-stated as " information wants to be measured by value, " which means that sometimes, though not always, information is expensive.

Under the impetus of the network, new modes and new capabilities of information circulation make it impossible to correctly evaluate digital information. For example, the origin of the content cannot be accurately tracked, providing the original creator with appropriate compensation. Due to the lack of a standard content ownership agreement, third parties can intervene and provide standardization, or more accurately, provide the illusion of standardization to promote end-to-end effects , which is considered to be the key to large-scale use of the Internet. They do this for all types of information, not just visual and written content. The illusion of back-end protocol interoperability is enhanced by the increasing elimination experienced by users at the front end.

When third parties intervene and become the key to the transmission of information standards, they begin to determine the "value" of the information. This early economic dynamic stimulated the scarcity of human information. Denying the natural tendency of information to be free, artificially labeling different data with high-priced labels, rather than letting information be valued by its value. These companies do a good job by limiting the flow of information they control. They try to treat information like most other commodities on Earth. On Earth, simple supply and demand theory states that scarcity equals value. However, as John Barlow pointed out in his 1994 book The Economy of Ideas, " digital technology is separating information from the physical level. " By treating information as a tangible product and controlling or restricting its ability to move freely, third parties suppress the unique quality of the information, ie the more common the information, the higher its value. Barlow believes: “If we assume that value is based on scarcity, just like physical things.” Then, the world will face the risks of technology, agreements, laws, and economics that are contrary to the true human nature of information (source).

"The meaning of (the Internet) is not in network technology, but in the fundamental transformation of human practice," Peter Denning wrote in his reflection on the Internet for the first 20 years. In the end, the popularity of Web2 was achieved because the end-to-end effect was successfully implemented, enabling large-scale adoption and giving everyday users the illusion of a single global Internet. While interoperability is a core aspiration of Berners-Lee and other early Internet architects, the most important thing for end consumers (and companies seeking to profit from it) is that the Internet can be extended to everyday use as quickly as possible. Information appears to be organic and humane; content appears to be sourced and verified; and data appears to be widely available and credible. However, behind the scenes, the same third-party companies (or their descendants) have been the gateway to information transmission on the Internet since the earliest days, with significant consequences.

Early Internet theorists did not intend to make this technology permanently independent of the private sector. In fact, the realization of the potential of the Internet relies on the assumption that the desire for large-scale use will drive private sector involvement and fund faster, more global development. However, the arrival of the private sector has accelerated the final segregation of the ecosystem.

The emergence of Balkanization

The original vision of the Internet architect was an open, distributed, and decentralized "network of networks." Funded by billions of dollars in US public research funding, originally envisioned as an academic project, the first 20 years of Internet development have been relatively unknown. Its original funders, most notably ARPA (Advanced Research Projects Agency, later became DARPA) and the National Science Foundation (NSF), did not necessarily expect to profit from this project, so the early Internet slowly and intentionally expanded .

The first instance of the network is practical: large computers in research universities are very expensive, so sharing resources between them will lead to better research. The government controls these networks, which means that all participants are encouraged to share their code to ensure continued funding and open source. The agreement emerged in the mid-1970s, and interoperable digital communication standards emerged shortly after that, for practical reasons: machines must be able to communicate with each other . By 1985, the NSFNET network had connected all major university mainframes, forming the first backbone of the Internet we know. In the late 1980s, more and more participants flooded into this backbone network— flows began to exceed network capacity .

As people's activities and enthusiasm for the technology increase, network congestion is a major problem. NSFNET prohibits commercial activities, but it is still not enough to limit traffic. The ban promotes the parallel development of private networks to carry commercial activities.

In response to this parallel network trend and the pressure of NSFNET, NSF Chairman Stephen Wolff proposed to privatize the infrastructure layer. This will increase network capacity by introducing private investments, thereby alleviating network congestion, allowing NSFNET to integrate with private networks into a single interoperable system, freeing the project from government control and making the Internet a mass media.

By 1995, NSFNET was completely eliminated and replaced by a private network ecosystem. After that, five companies (UUNET, ANS, SprintLink, BBN, and MCI) emerged, forming a new Internet infrastructure layer. They have no real competitors, no regulatory oversight, no policies or governance to guide their interactions, and no minimum performance requirements issued by any government entity. This completely open and competitive environment, although unprecedented, is hardly opposed by the early Internet thought leaders because they always hope that when there are enough mainstream interests to support these networks, these networks will be handed over. For private infrastructure providers. In other words, they hope that when the public accepts the technology, the incentives will change. The protocol layer and link layer of the Web were developed with relatively little knowledge; the market was formed only at the network or infrastructure level.

These five new major suppliers connect and integrate local and small networks across the United States. In essence, these companies were initially mediators and later became de facto providers because they oversaw all the data in the system at some point during the system's transmission. So far, this organization seems to be counter-intuitively concentrated compared to the priority of a distributed, resilient system architecture, but the internet architect has realized this. However, with more than one provider involved, privatization advocates believe that there will be sufficient competition to prevent the segregation of infrastructure services. In the years after the removal of NSFNET, this was not the case. The privatization of the infrastructure layer has led to the supplier's oligopoly , which essentially controls the entire Internet's data flow completely by controlling the movement and throughput of information. They can provide shortcuts to each other to overcome overall network congestion and offer preferential treatment for sites that pay for faster content delivery. The agreements between these suppliers are completely unknown because they are not obligated to disclose their terms, so smaller supplier networks cannot compete in the market.

Therefore, in the early 1990s, in order to avoid the Balkanization of the Internet, a conspiracy group consisting of five infrastructure providers eventually unexpectedly gained control over the entire protocol layer. In a sense, this is a lesson that illustrates the importance of local governance agreements and sound regulation in developing healthy markets for new technologies. Good governance can lead to fairer, more open competition and ultimately a more holistic market. To a certain extent, the retention of public interest also introduces a feedback loop to review the development of a new technology. One of the disadvantages of the formation of the private infrastructure layer is that there is insufficient attention to the security transfer from NSFNET. In NSFNET, security is not a critical issue; research and development without any security mechanisms or security issues is usually introduced today. There are loopholes. The almost complete lack of conscious governance has also led to the extreme lack of so-called “network neutrality”, so it is unfair to assign the priority of network speed to the highest price, and the overall access to the network is extremely unequal. On the contrary, the measures taken to prevent the Balkanization have led to a completely irreversible Balkanization infrastructure layer.

The lessons learned from the concentration of suppliers in the early 1990s are very relevant to the current stage of blockchain ecosystem development. The establishment of interoperability standards is likely to appear on a large scale as a necessary condition for functionality. This is true at the protocol level of the Internet, and in Web3, it is also possible to achieve sufficient network pressure to generate economic incentives. But the protocol layer of the network is publicly funded, so it is expected to get rid of profits for more than two decades. The first wave of blockchains is essentially financial in nature, and financial incentives exist from their birth and core to the protocol layer. Therefore, despite the sharing patterns in Web2 and Web3 development, the risks of Balkanization on their timelines are very different.

Priority interoperability

Although the prophecy about the existence of blockchains has existed for decades, and cryptography theory has existed for decades, blockchain technology is in practical use—not to mention programmable, usable blockchains. Technology – still in the bud. In such an early stage, risky innovation and competition are very important for the development of ecosystems. However, the blockchain industry of the early days faced the same pressure as the Internet industry of the early 1980s and early 1990s. The opportunity of the blockchain is to change the world, so the risk is also.

As this article will discuss, the opportunities for blockchain technology depend on interoperability between all major blockchain projects, which is the basis for the development of these protocols. Only by ensuring that all blockchains, whether completely unrelated or fiercely competing with one another, can embed compatibility into their basic functions in order to extend the capabilities of the technology to global use and impact.

Due to the rapid growth of the encryption, Toekn sales and Token markets over the past two years, blockchain companies are under tremendous pressure to demonstrate the utility, profitability and commercialization of the technology. In this sense, the motivation to push the Internet to place interoperability in a secondary position and focus on the daily availability of technology is no different from today. If there is any difference, that is, we can maintain connectivity and receive real-time updates anywhere in the world today, ensuring that the blockchain ecosystem is under greater pressure than the early Internet at a similar stage of development, requiring it. Show your business abilities. As companies compete to prove themselves "better" or "more adaptable to the market" than other existing agreements, they have given up on interoperability and instead focused on repeating the words of Bell Berners-Lee – "fancy graphics technology and complex extras Facilities, these are more attractive to investors and consumers who are short-sighted.

The competition for instant function is economically effective, but its continuation may jeopardize the entire development of the blockchain industry. If companies continue to ignore interoperability, each company builds its own proprietary blockchain and attempts to market it to a hypothetical market competitor, then the ecosystems of a few years later may be very similar to non-interoperable The early days of the Internet. We will only have a collection of scattered silo blockchains, each supported by a weak network of nodes and vulnerable to attack, manipulation and concentration.

It is not too difficult to imagine a non-interoperable future for blockchain technology. All the materials and images depicting this picture exist in the early Internet doctrine and have been discussed in the first part of this article. Like today's Internet, the most important data quality in Web3 is the "end-to-end" effect. Consumers interacting with Web3 must experience seamless interaction regardless of the browser, wallet or website they use in order for the technology to be applied on a large scale. In order to achieve this ultimate goal, information must be allowed to flow in its organic, humanistic manner. It must be allowed to be free. However, today's blockchain knows nothing about the information that may exist in different blockchains. Information living on the Bitcoin network is ignorant of information living on Ethernet. Therefore, information is deprived of its natural desire and ability to flow freely.

The consequences of the isolation of information in the blockchain come directly from the history books of the Internet. The Internet is concentrated at the infrastructure level due to the pressure of scale to meet the public's enthusiasm and large-scale adoption. If the Web3 ecosystem achieves this before protocol interoperability is sufficiently popular, the same thing will happen again. Without native blockchain interoperability, third parties will intervene to manage the transfer of information from one blockchain to another, extracting value for itself in the process, and creating the technology to eliminate it. friction. They will have access to and control of this information, and they will have the ability to create artificial scarcity and expansive value. Without interoperability, the industry's vision of a blockchain that often evokes the future of the Internet is nothing. Without it, we will find that our own global network in the future is almost identical to today's dominant Web2 environment. Consumers on a daily basis will still enjoy their smooth and consistent interaction with Web3, but their data will not be secure, their identity will not be complete, and their money will not be theirs.

Looking to the future

All of this is not to say that the industry has completely forgotten or abandoned the importance of interoperability. Proofs of concepts such as BTC Relay, alliances such as the Enterprise Ethereum Alliance, and projects such as Wanchain show that some people still recognize the key value of interoperability. No matter how things develop in the short term, market pressures may motivate blockchain ecosystems to achieve interoperability. However, passive interoperability and proactive interoperability may still cause differences in where and how data is captured.

Passive interoperability-ie. It is only when the market needs it that deciding that interoperability should be a key factor in the blockchain for many years, which provides an opportunity for third parties to intervene and promote interoperability. They profit from their services and their access to user data is asymmetric.

Active interoperability-ie. On the other hand, ensure that interoperability is coded into the protocol at this new stage of the ecosystem, ensuring that data can be transmitted securely and efficiently between blockchains without the need to transfer control to an intermediary third party.

There is no doubt that there is a necessary and healthy balance between commercialization and open source interoperability. Commercialization promotes competition and innovation, motivating developers and entrepreneurs to build systems that best fit their customers. However, it turns out that this balance has been unstable in the past. As the pressure on the blockchain to deliver on promises increases, we will find that commercialization is paying more and more attention to the market preparation of the blockchain, no matter what ideology it must sacrifice in the short term.

Author: Everett Muzzy and Mally Anderson

Compile: Sharing Finance Neo

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- The currency security "does more than one move" pushes the BTC stable currency. What is the intention?

- Interpreting Facebook's encryption stable currency Libra, this article is enough.

- Thunder is loud, rain is small, Facebook is so currency

- For Facebook's currency, the industry's big coffee is so commented

- Mark Zuckerberg's personal letter: Libra released, an exciting journey begins

- Interpretation of the White Paper in Facebook: Top 10 Highlights of the Top 10, Sword Refers to Inclusive Finance

- OTC is a hotbed of money laundering, can the exchange stay out of the way?