Who are the winners in the EVM innovation wave?

Who are the EVM innovation wave winners?Author: Siddharth Rao, IOSG Ventures

About Ethereum Virtual Machine (EVM) Performance

Every operation on the Ethereum mainnet costs some Gas, and if we put all the computational requirements of running an app on the chain, either the App will crash or the user will go bankrupt.

This gave birth to L2: OPRU introduced a sequencer to bundle a bunch of transactions and then submit them to the mainnet. This not only helps the app inherit Ethereum’s security but also gives users a better experience. Users can submit transactions faster, and the fees are cheaper. Although the operation has become cheaper, it still uses the native EVM as the execution layer. Similar to ZK Rollups, Scroll, and Polygon zkEVM use or will use EVM-based zk circuits, and zk Proofs will be generated for every transaction or a large batch of transactions on their Prover. While this can allow developers to build “fully on-chain” applications, can it still efficiently and economically run high-performance applications?

What are these high-performance applications?

People first think of games, on-chain order books, Web3 social, machine learning, genome modeling, etc. All of these require a lot of computation and running on L2 will also be very expensive. Another problem with EVM is that its speed and efficiency of computation are not as good as other systems like the SVM (Sealevel Virtual Machine) now.

- Attention drawn to Ethscriptions, an Ethereum NFT protocol that competes with Ordinals, with over 30,000 minted in just a few days since launch.

- Comprehensive Review of the Polygon zkEVM Ecosystem: Slow but Steady

- Exploring the Effectiveness of Layer2 Airdrops: A Case Study of Optimism and Arbitrum

Although L3 EVMs can make computation cheaper, the structure of EVMs themselves may not be the best way to execute high computation because it cannot calculate parallel operations. When building a new layer on top, to maintain the spirit of decentralization, new infrastructure (a new node network) needs to be established, which still requires the same number of providers to scale or a whole new set of node providers (individuals / businesses) to provide resources, or both.

Therefore, every time a more advanced solution is built, the existing infrastructure needs to be upgraded, or a new layer needs to be built on top. To solve this problem, we need a post-quantum secure, decentralized, trustless, high-performance computing infrastructure that can truly efficiently use quantum algorithms to compute for decentralized applications.

Alt-L1s like Solana, Sui, and Aptos can achieve parallel execution, but they are unlikely to challenge Ethereum due to market sentiment and liquidity shortages in the market, and they lack trust as Ethereum’s network effects have established a milestone moat. So far, there is no killer of ETH/EVM. The question here is, why should all computation be on-chain? Is there an equally trustless, decentralized execution system? This is what the DCompute system can achieve.

DCompute infrastructure needs to be decentralized, post-quantum secure, and trustless. It doesn’t necessarily have to be blockchain/distributed ledger technology, but it’s crucial to verify computational results, correct state transitions, and final confirmations. This is how EVM chains operate, where secure and trustless computation can be moved off-chain while maintaining network security and immutability.

Here, we mainly ignore the issue of data availability. This article doesn’t disregard data availability because solutions like Celestia and EigenDA are already heading in that direction.

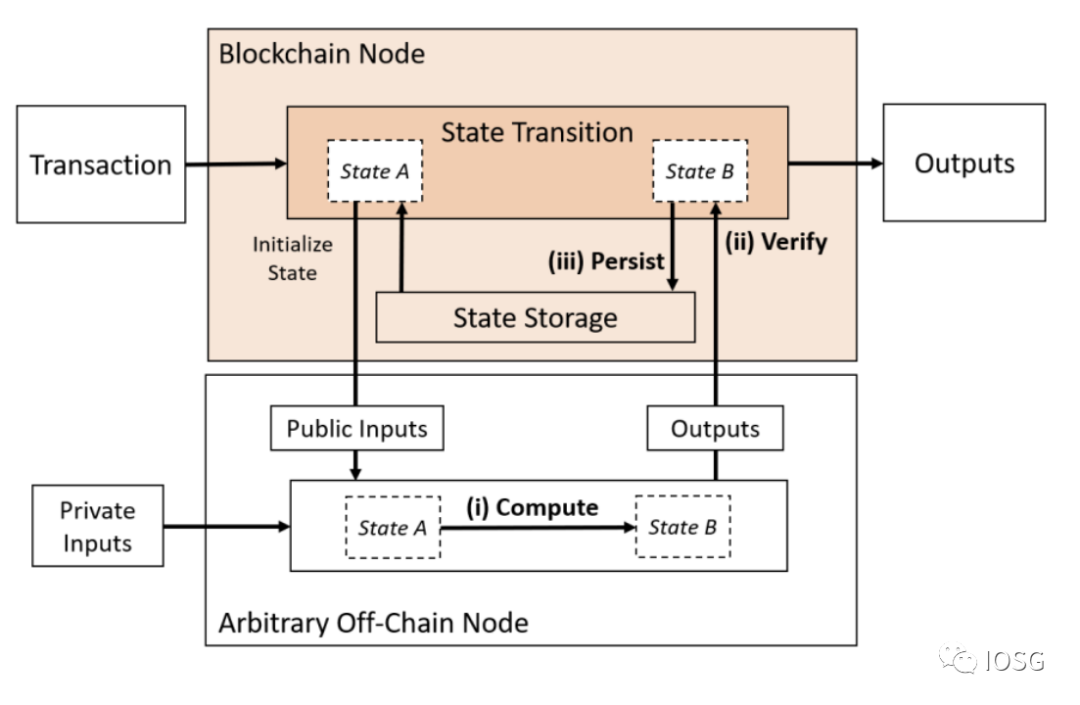

1: Only Compute Outsourced

(Source: Off-chaining Models and Approaches to Off-chain Computations, Jacob Eberhardt & Jonathan Heiss)

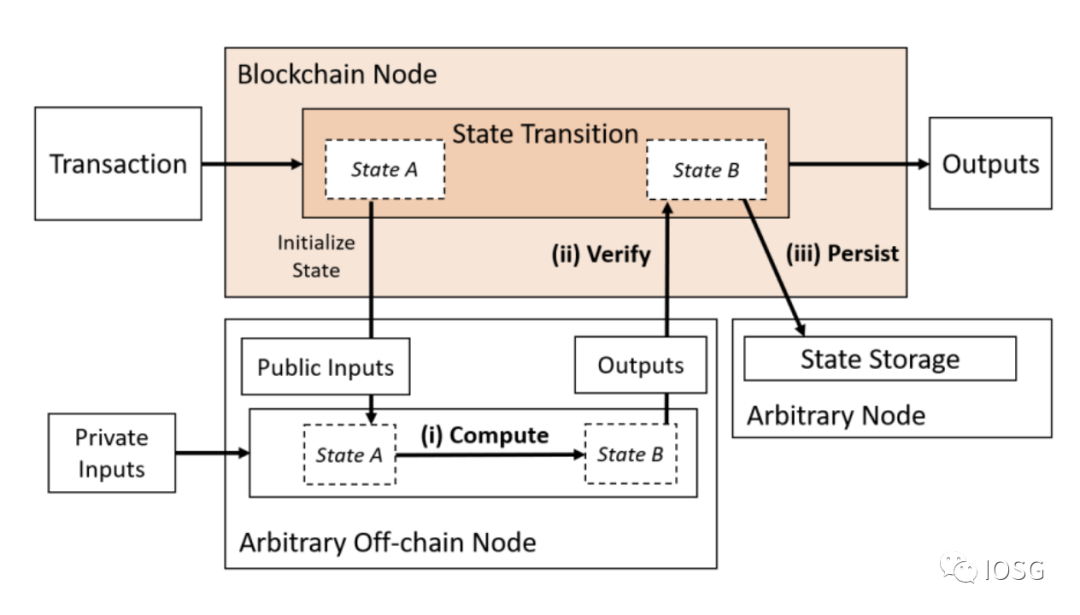

2. Compute and Data Availability Outsourced

(Source: Off-chaining Models and Approaches to Off-chain Computations, Jacob Eberhardt & Jonathan Heiss)

When we look at Type 1, zk-rollups are already doing this, but they’re either limited by EVM or require teaching developers to learn a whole new language/instruction set. The ideal solution should be efficient, effective (in terms of cost and resources), decentralized, private, and verifiable. ZK proofs can be built on AWS servers, but they’re not decentralized. Solutions like Nillion and Nexus are trying to solve the problem of general computation in a decentralized way. But these solutions are unverifiable without ZK proofs.

Type 2 combines the off-chain computational model with a separate data availability layer that remains detached, but the computation still needs to be verified on-chain.

Let’s take a look at different decentralized computation models that are partially trusted and potentially completely trustless and available today.

Alternative Computation Systems

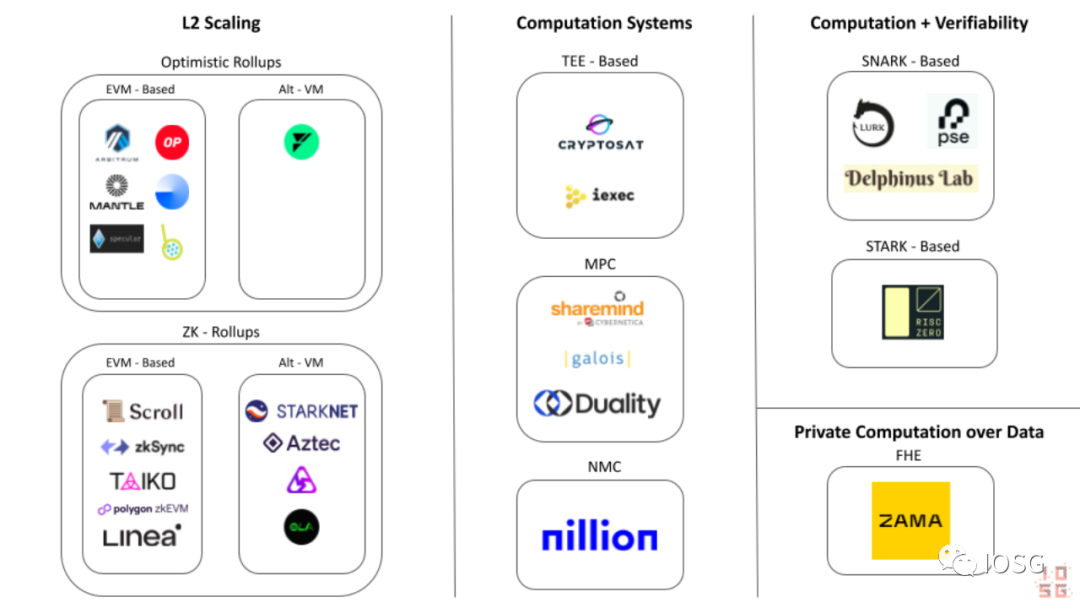

Ethereum Outsourced Computation Ecosystem Map (Source: IOSG Ventures)

- Secure Enclave Computations/Trusted Execution Environments

TEE (Trusted Execution Environment) is like a special box inside a computer or smartphone. It has its own lock and key, and only specific programs (known as trusted applications) can access it. When these trusted applications run inside the TEE, they are protected from other programs and even the operating system itself.

It’s like a secret hiding place that only a few special friends can enter. The most common example of a TEE is a secure enclave, which exists on devices we use, such as Apple’s T1 chip and Intel’s SGX, for running critical operations inside the device, such as FaceID.

Because the TEE is an isolated system, the authentication process cannot be compromised, as there is a trust assumption in the authentication. It’s like there is a secure door that you trust because Intel or Apple built it, but there are enough security breakers in the world (including hackers and other computers) who can break through this secure door. TEEs are not “post-quantum secure,” which means that quantum computers with unlimited resources can break the security of TEEs. As computers become increasingly powerful, we must keep in mind the post-quantum security when building long-term computing systems and cryptographic schemes.

- Secure Multi-Party Computation (SMPC)

SMPC (Secure Multi-Party Computation) is also a computing scheme familiar to blockchain practitioners. The roughly workflow in the SMPC network consists of the following three parts:

Step 1: Convert the input of the calculation into shares and distribute them among the SMPC nodes.

Step 2: Perform the actual calculation, usually involving message exchange between SMPC nodes. At the end of this step, each node will have a share of the calculated output value.

Step 3: Send the result shares to one or more result nodes, which run the LSS (Secret Sharing Recovery Algorithm) to reconstruct the output result.

Imagine an automobile production line, where the construction and manufacture of the car components (engine, doors, mirrors) are outsourced to Original Equipment Manufacturers (OEMs) (working nodes), and then there is an assembly line that assembles all the components together to manufacture the car (result nodes).

Secret sharing is crucial for protecting privacy in decentralized computing models. This prevents a single participant from obtaining the complete “secret” (in this case, the input) and maliciously producing incorrect output. SMPC may be one of the easiest and most secure decentralized systems. Although there is currently no fully decentralized model, it is logically possible.

MPC providers like Sharemind offer MPC infrastructure for computation, but providers are still centralized. How can privacy be ensured, and how can it be ensured that the network (or Sharemind) does not engage in malicious behavior? This is where the origin of zk proofs and zk verifiable computation comes from.

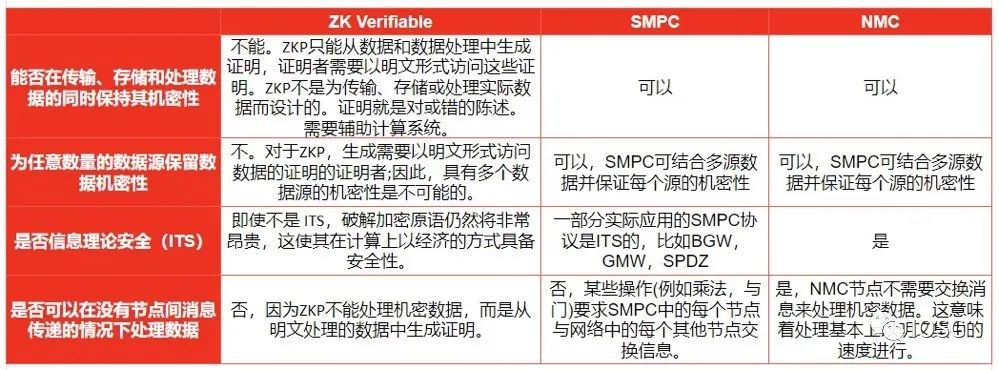

- Nil Message Compute (NMC)

NMC is a new distributed computing method developed by the Nillion team. It is an upgrade to MPC, where nodes do not need to communicate by exchanging results. For this purpose, they use a cryptographic primitive called one-time masking, which uses a series of random numbers called blinding factors to mask a secret, similar to a one-time pad. OTM aims to provide correctness efficiently, meaning that NMC nodes do not need to exchange any messages to perform computation. This means that NMC does not suffer from the scalability issues of SMPC.

- Zero-Knowledge Verifiable Computation

ZK verifiable computation is the generation of a zero-knowledge proof for a set of inputs and a function, proving that any computation performed by any system will be executed correctly. Although ZK verifiable computation is a new concept, it is already a very critical part of the Ethereum network’s scaling roadmap,

ZK proofs have various implementation forms (as summarized in the paper “Off-Chaining Models”), as shown below:

(Source: IOSG Ventures, Off-chaining Models and Approaches to Off-chain Computations, Jacob Eberhardt & Jonathan Heiss)

Now that we have a basic understanding of the implementation of zk proofs, what are the requirements for using zk verifiable computation?

- First, we need to choose a proof primitive, ideally one that generates proofs at low cost, has low memory requirements, and is easy to verify.

- Second, choose a zk circuit and design a proof for the above primitive generated through computation.

- Finally, compute the given function by providing the input provided in a given computation system/network and output the result.

The Developer’s Dilemma – The Proof Efficiency Dilemma

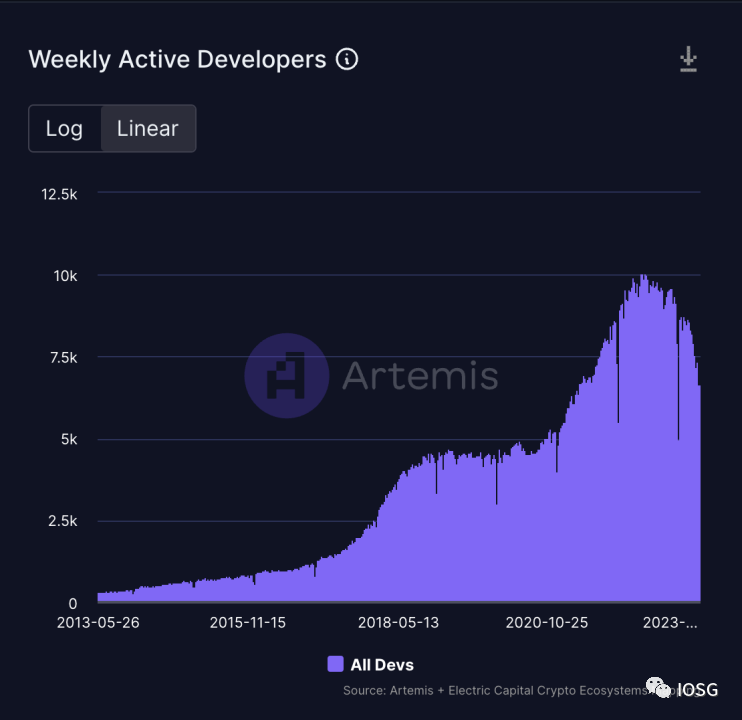

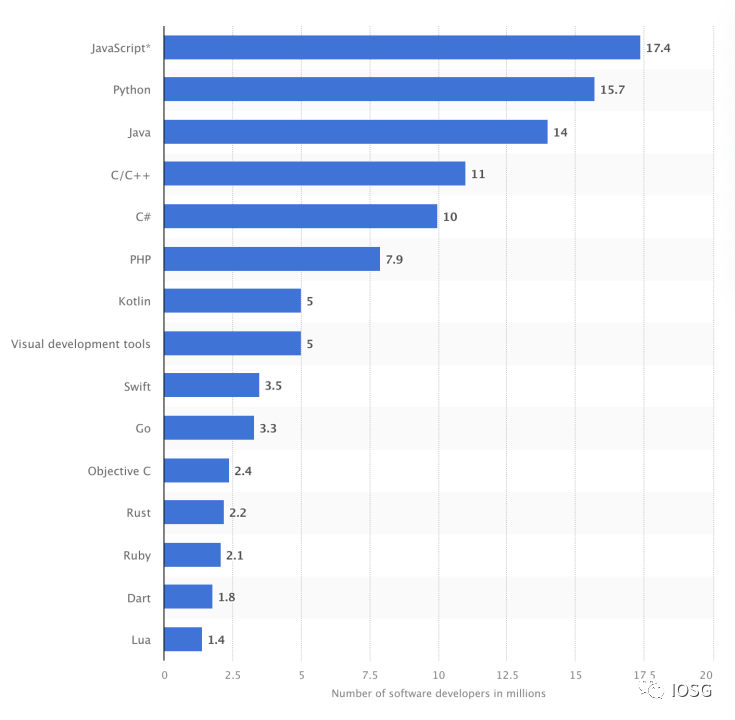

Another thing that must be said is that the threshold for building circuits is still high, making it not easy for developers to learn Solidity. Now developers are required to learn Circom to build circuits, or to learn a specific programming language (such as Cairo) to build zk-apps, which seems to be an unreachable goal.

As the above statistics show, it seems more sustainable to make the Web3 environment more suitable for development than to introduce developers to new Web3 development environments.

If ZK is the future of Web3 and Web3 applications need to be built using existing developer skills, then ZK circuits need to be designed to support algorithm execution written in languages such as JavaScript or Rust that generate proofs of computation.

Such a solution does exist, and the author thinks of two teams: RiscZero and Lurk Labs. Both teams have a very similar vision, which is that they allow developers to build zk-apps without having to go through a steep learning curve.

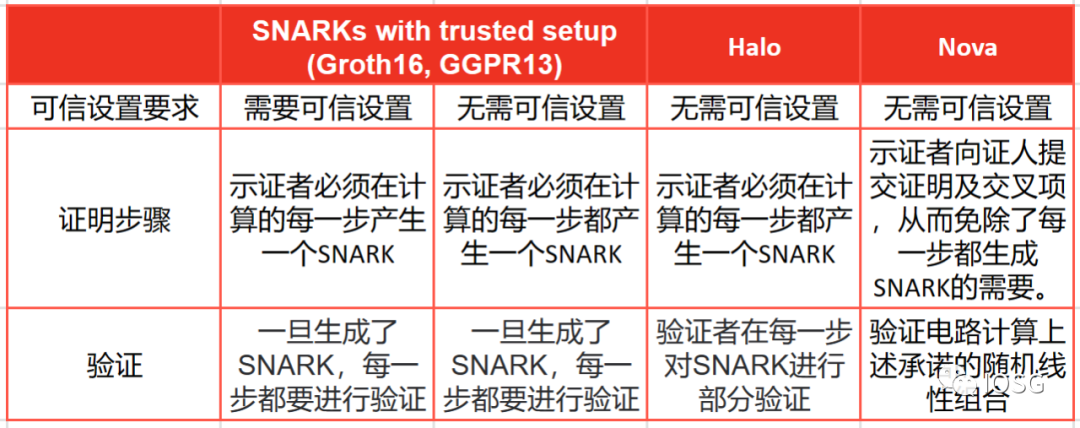

Lurk Labs is still in the early stages, but the team has been working on this project for a long time. They focus on generating Nova Proof, a universal circuit, to generate proofs. Nova Proof was proposed by Abhiram KothaBlockinglli from Carnegie Mellon University, Srinath Setty from Microsoft Research, and Ioanna Tziallae from New York University. Compared to other SNARK systems, Nova Proof has a special advantage in incremental verifiable computation (IVC). Incremental verifiable computation (IVC) is a concept in computer science and cryptography that aims to achieve the verification of a computation without having to recompute the entire computation from scratch. When the computation time is long and complex, the proof needs to be optimized for IVC.

The beauty of the Bonsai network design is that the computation can be initialized, validated, and output all on-chain. All of this sounds like utopia, but STARK proofs also bring problems – the cost of verification is too high.

Nova proof seems to be very suitable for repeated computations (its folding scheme is economically efficient) and small computations, which may make Lurk a good solution for ML inference verification.

Who are the winners?

(Source: IOSG Ventures)

Some zk-SNARK systems require a trusted setup process during the initial setup phase to generate a set of initial parameters. The trust assumption here is that the trusted setup is executed honestly and without any malicious behavior or tampering. If attacked, it may result in creating invalid proofs.

STARK proofs assume the security of low-degree testing, used to verify low-degree properties of polynomials. They also assume that hash functions behave like random oracles.

The correct implementation of the two systems is also a security assumption.

SMPC networks rely on the following:

- SMPC participants can include “honest but curious” participants who may attempt to access any underlying information by communicating with other nodes.

- The security of the SMPC network depends on the assumption that participants correctly execute the protocol and do not intentionally introduce errors or malicious behavior.

- Some SMPC protocols may require a trusted setup phase to generate encrypted parameters or initial values. The trust assumption here is that the trusted setup is executed honestly.

- The security assumption remains the same as the SMPC network, but due to the existence of OTM (Off-The-Grid Multi-Party Computation), there are no “honest but curious” participants.

OTM is a multi-party computation protocol designed to protect the privacy of participants. It achieves privacy protection by not disclosing its input data to participants in the computation. Therefore, “honest but curious” participants do not exist because they cannot attempt to access underlying information by communicating with other nodes.

Is there a clear winner? We don’t know. But each method has its own advantages. While NMC looks like a clear upgrade to SMPC, the network has not yet been launched or field-tested.

The benefit of using ZK verifiable computation is that it is secure and privacy-preserving, but it does not have built-in secret sharing functionality. The asymmetry between proof generation and verification makes it an ideal model for verifiable outsourcing of computation. If the system uses pure zk verifiable computation, the computer (or single node) must be very powerful to perform a large amount of computation. To enable load sharing and balancing while protecting privacy, secret sharing must be in place. In this case, systems like SMPC or NMC can be combined with zk generators like Lurk or RiscZero to create a powerful distributed verifiable outsourcing computation infrastructure.

Today’s MPC/SMPC networks are centralized, which is becoming increasingly important. Currently, the largest MPC provider is Sharemind, and its ZK verification layer is proven to be useful. The economic model for a decentralized MPC network has not yet been proven. In theory, the NMC model is an upgrade for MPC systems, but we have not yet seen its success.

In the ZK proof scheme competition, there may not be a winner-takes-all situation. Each proof method is optimized for specific types of computation and there is no one-size-fits-all model. There are many types of computational tasks, and it also depends on the trade-offs made by developers on each proof system. The author believes that STARK-based systems and SNARK-based systems and their future optimizations have a place in the future of ZK.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Cracking the interoperability trust problem: how will Web3 and cross-chain bridges ultimately evolve?

- Analyze the three main characteristics of the Cosmos LSM module: what are the positive implications?

- Blockchain detective ZachXBT sued by “Big Brother Ma Ji”: Received donations of over one million US dollars, supported by CZ and Justin Sun

- Sei Network: The fastest Layer1 public chain on Cosmos designed for trading?

- Encrypted Twitter’s FOMO trap and the quiet arrival of ROMO art

- Final Battle between DEX and CEX? Uniswap V4 Remakes Liquidity Growth Flywheel

- Summary of the 111th Ethereum Core Developer Consensus Meeting: Deneb Upgrade, Aggregation Deadline, Increased Maximum Effective Validator Balance…