Disassembling Rollup Economics Business Models, Interoperability, and Layer 3

Rollup economics, business models, interoperability, and layer 3 in disassembling.Author: @DavideCrapis

Translation: Huohuo/Baihua Blockchain

Have you ever wondered how Rollup generates such amazing high returns?

Base has achieved over $2 million in revenue in less than 3 weeks… Inspired by the recent post by @DavideCrapis titled “Rollup Economics 2.0,” let’s delve into the economic model of Rollup.

- Opinion The market’s focus is no longer simply on proving the superiority or inferiority of technology.

- LD Capital Puffer – Secure Signer technology empowerment, LSD track dark horse

- Key Factors for CHEX’s Success Mobile Native Embedded Technology Improving Trading Experience

Key points of this article:

1) The “old-fashioned” business model of Rollup

2) How Rollup design becomes more complex

3) Interoperability between

4) The role of Layer 3

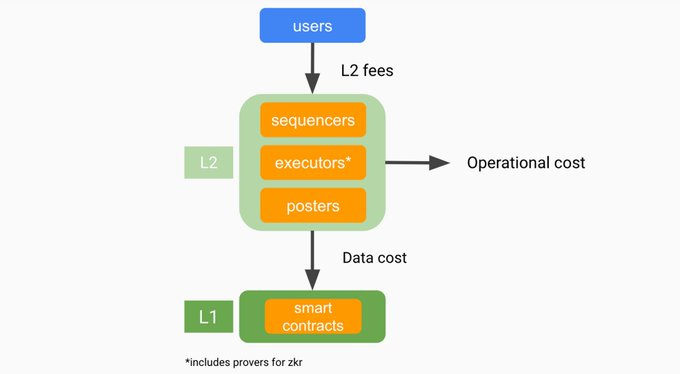

The following diagram describes the “old-fashioned” model of Rollup as a revenue generation model. As you can see, there are three main components in the system:

– Users

– Rollup operators (serializer, executor, publisher)

– Layer 1 (L1)

How it works: This flowchart shows users paying L2 fees (gas costs), operating costs (computing costs, servers, etc.), and data costs (processing transactions back to L1).

Therefore, users pay fees to generate revenue, subtracting the costs of operations and data processing, resulting in profit.

The dilemma for Rollup is: creating a balanced environment or pursuing profits?

Every DAO/core team behind it must make key design decisions around optimizing L2 fees, redistributing MEV (Miner Extractable Value), and reducing data costs.

Is it about retaining profits or giving back to the community? 🧐

This is where Rollup is becoming more complex, striving to increase security through shared governance, increase efficiency through shared economics, and improve user experience by reducing liquidity fragmentation. For example, @arbitrum has been gradually decentralizing its serializer, but recently the community found that this is not their top priority, as shown below…

In fact, Rollup faces trade-offs in three areas:

1) Serialization -> operational costs of decentralization + costs of incentivizing participants.

2) Data availability -> expensive costs of publishing data on L1.

3) State verification -> zkRollups have instant finality, but maintaining this feature also requires a lot of costs.

The team needs to make choices among various trade-offs. As a user, understanding these choices is always beneficial to me.

Security is obviously the most important. However, what if your users choose to use another cheaper and faster chain that doesn’t care much about security? This is the dilemma for builders. 🤦♂️

You see, this is why I feel sympathetic towards the builders in this field. The true decentralization ideals of some high-quality teams are often overshadowed by marketing, hype, and short-term thinking. That’s life, and it’s a fiercely competitive market. However, we will see who will emerge victorious in the long run…

Back to this dilemma, and the solution proposed by @DavideCrapis: aggregation.

Shared serialization, batch publishing, and proof are at the forefront of optimization. These measures improve efficiency by reducing data costs. For Rollup, future collaboration seems like an undisputed choice.

But does this bring security risks? What if the serializer crashes? Or if the prover cannot function properly? These are difficult questions. But one thing is certain: collaboration in Rollup can promote economic productivity. Shared services can lower costs, simplify transactions, and facilitate healthy growth.

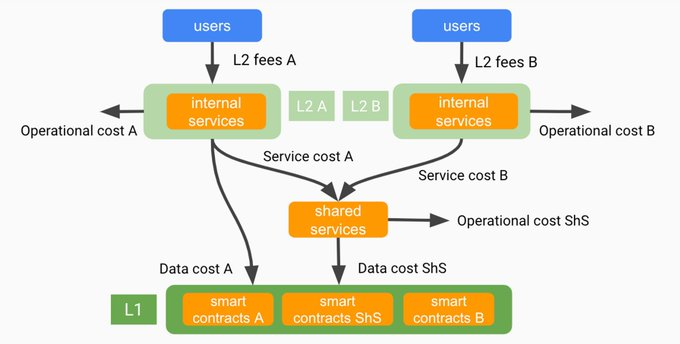

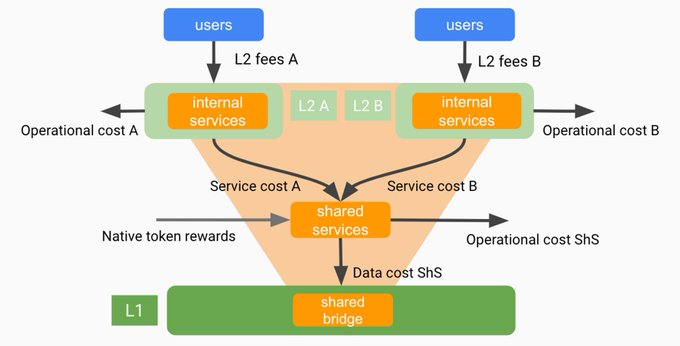

The reasoning is simple, as shown in the chart above. You now have two sets of user costs, but only one shared service cost.

The formula is simple, as described by @DavideCrapis:

The idea of the proposed “Rollup Alliance” is quite exciting. In a perfect world, Rollups within the same “alliance” would naturally embrace shared services, as the cost of direct data publishing would be distributed among them.

These Rollup shares bridge with L1, sharing the costs of all services and data publishing. This will become very powerful after EIP4844.

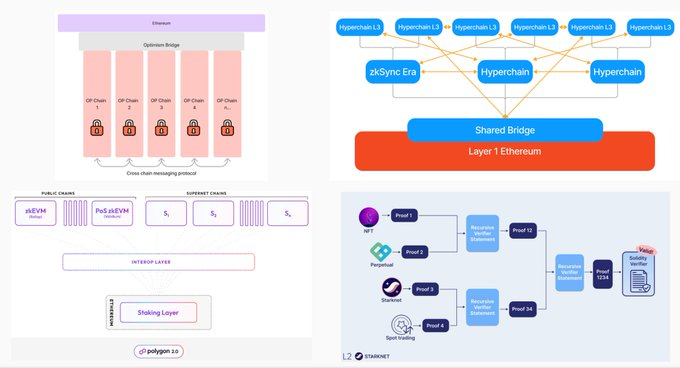

Below are examples of the architectures designed by @zksync’s Superchain, @OxPolygonDeFi’s Supernet, and @Starknet. The core concepts are: ‘infinite’ scalability, shared bridging, and cost aggregation between each individual Rollup.

Lastly, we have Layer 3. For me, these are particularly interesting for specific community-specific use cases. For example, games, social media, and so on. The reason is that they don’t require the high degree of compatibility like decentralized exchanges (DEX) or money markets do. Therefore, they can run in their own execution environments, enjoying very low costs and high transaction speeds. These Layer 3 (L3) will “roll up” to Layer 2 (L2) with the lowest bundling fees (because gas costs in Layer 2 are very cheap).

As for the economic model, the fees of Layer 3 (L3) become an additional source of income for Layer 2 (L2), increasing their overall revenue.

In addition, more users = more opportunities for the team to leverage sales of products, mint NFTs, or create profitable dApps.

As shown in the following image, more fees are charged:

Similar to costs associated with L2, this includes computational/operational costs and data costs.

For L2, it is undoubtedly a wise choice to deploy L3 to increase revenue, generate more users, and create new layers!

In the Rollup field, it is now an exciting era 🙂

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- W Labs Why are all Web3 models Ponzi schemes?

- Vitalik on AI Will not replace humans, we will eventually adapt to the new technological wave

- Can you make payments by talking to AI? The Reserve Bank of India explores innovative instant payment technology.

- Glassnode Cryptocurrency market trading volume reaches historical low, BTC is experiencing an unprecedented period of low volatility

- Exploring the Optimal Voting Technology Detailed Explanation of Vota, a Special Purpose Infrastructure for Decentralized Community Governance

- LianGuai Daily | The contract address of LianGuai Stablecoin PYUSD has been announced; OpenAI has launched the web crawler GPTBot, which can automatically collect information to improve AI models.

- Building a Virtual World How to Use Blockchain Technology to Record Time for Digital Gods?