Will the combination of the ZK and OP methods become the future of Ethereum Rollup?

Will ZK and OP become Ethereum Rollup's future?Original Title: “OP+ZK, will Hybrid Rollup become the ultimate future of Ethereum expansion?“

Original author: @kelvinfichter

Translated by: Jaleel, BlockBeats

I’ve recently become extremely convinced that the future of Ethereum Rollup is actually a hybrid of the two primary methods, ZK and Optimistic. In this article, I will try to expound on the basic points of the architecture I imagine, and why I believe this is the direction we should be heading. Please note that I spend most of my time researching Optimism, i.e. Optimistic Rollup, but I am not a ZK expert. If I make any mistakes in discussing the ZK aspect, please feel free to contact me and I will correct them.

- Has the PoW Narrative Renaissance become a dream as BCH tripled in two weeks?

- I have drawn over 100,000 robot images, and my Twitter avatar is full of these pictures. This AI painting competition is becoming very popular.

- The New Frontier of DeFi: the Rise of Repeated Collateralization, Liquidity Mining, and LSD Tokens

I’m not going to go into detail in this article about how ZK and Optimistic Rollups work. If I were to take the time to explain the essence of Rollups, this article would be too long. So this article is based on the assumption that you already have some understanding of these technologies. Of course, you don’t need to be an expert, but at least you should know what ZK and Optimistic Rollups are and how they generally work. In any case, please enjoy reading this article.

Let’s start with Optimistic Rollup

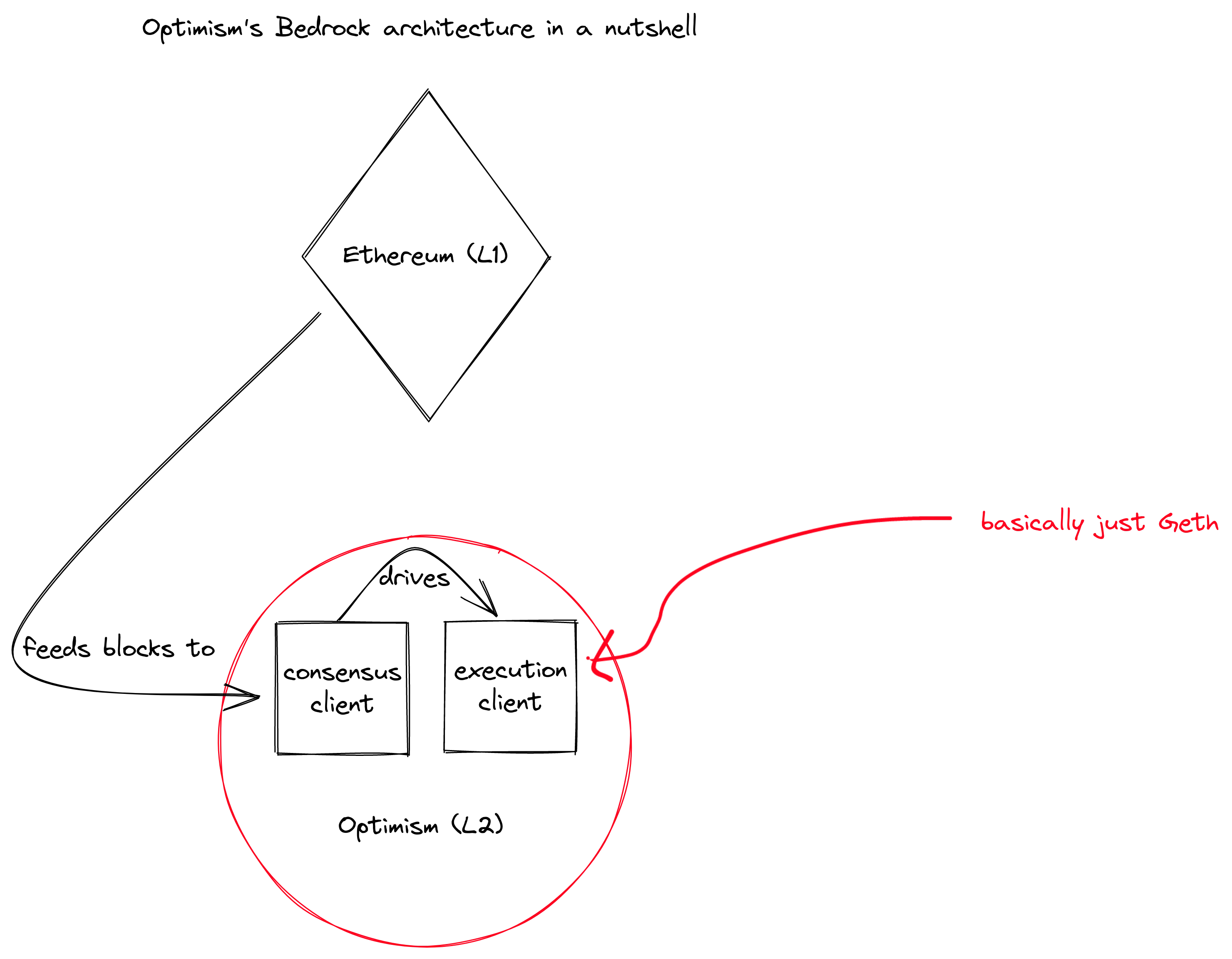

The system that combines ZK and Optimistic Rollup was originally based on the Bedrock architecture of Optimistic Rollup. Bedrock was designed to be as compatible as possible with Ethereum (“EVM equivalent”), which was achieved by running an execution client that is almost identical to an Ethereum client. Bedrock leverages Ethereum’s upcoming consensus/execution client separation model, significantly reducing the difference from EVM (of course, there will still be some changes in the process, but we can handle them).

Like all good Rollups, Optimism extracts block/transaction data from Ethereum, sorts this data in a deterministic way in a consensus client, and feeds this data to an L2 execution client for execution. This architecture solves the first half of the “ideal Rollup” puzzle, and provides us with an L2 equivalent to EVM.

Of course, the problem we need to solve now is: how to inform Ethereum about what happens inside Optimism in a verifiable way. If this problem is not solved, smart contracts cannot make decisions based on the state of Optimism. This would mean that users could deposit to Optimism, but could not withdraw their assets. While one-way Rollup is feasible in certain situations, two-way Rollup is more effective in most cases.

By providing some commitment to the state and proving that the commitment is backed by correct evidence, we can inform Ethereum of the state of all Rollups. In other words, we are proving that the “Rollup program” is executed correctly. The only substantive difference between ZK and Optimistic Rollups is the form of this proof. In ZK Rollup, you need to provide a clear zero-knowledge proof to prove the correct execution of the program. In Optimistic Rollup, you can declare a commitment without providing explicit evidence. Other users can force you to participate in a “game” of back-and-forth scrutiny and challenge by challenging and questioning your declaration, in order to determine who is ultimately correct.

I am not going to go into detail about the challenge part of Optimistic Rollup. It is worth noting that the latest technology in this area is to compile your program (in the case of Optimism, Geth EVM + some edge parts) into some simple machine architecture, such as MIPS. We do this because we need to establish an interpreter for the program on the chain, and building a MIPS interpreter is much easier than building an EVM interpreter. The EVM is also a moving target (we have regular upgrade forks) and does not fully cover everything we want to prove in the program (there are also some non-EVM things inside).

Once you have built an on-chain interpreter for your simple machine architecture and created some offline tools, you should have a fully functional Optimistic Rollup.

Turning to ZK Rollup

Overall, I believe that Optimistic Rollups will dominate in the next few years. Some people believe that ZK Rollups will eventually surpass Optimistic Rollups, but I do not agree with this view. I think that the current relative simplicity and flexibility of Optimistic Rollups means that they can gradually transition to ZK Rollups. If we can find a pattern to achieve this transition, there is no need to go through the effort of building a less flexible, more fragile ZK ecosystem, we can simply deploy to an existing Optimistic Rollup ecosystem.

Therefore, my goal is to create an architecture and migration path that allows existing modern OP ecosystems (such as Bedrock) to seamlessly transition to ZK ecosystems. I believe this is not only feasible, but also a way to surpass the current zkEVM method.

We start with the Bedrock architecture I described in my previous article. Note that I have (briefly) explained that Bedrock has a challenge game that can verify the validity of some executions of L2 programs (running EVM + some extra MIPS content). One major drawback of this approach is that we need to reserve a period of time for users to have the opportunity to detect and successfully challenge a proposal for an incorrect program result. This will add a considerable amount of time to the asset extraction process (7 days on the current Optimism mainnet).

However, our L2 is just a program running on a simple machine (such as MIPS). We can definitely build a ZK circuit for this simple mechanism. Then, we can use this circuit to prove the correct execution of the L2 program explicitly. Without modifying the current Bedrock codebase, you can start publishing validity proofs for Optimism. It’s that simple in practice.

Why is this method reliable?

Just to clarify: although I mentioned “zkMIPS” in this section, in fact, I use it as a term representing all general and simplified zero-knowledge proof virtual machines (zkVM).

zkMIPS is easier than zkEVM

Building a zkMIPS (or any type of zk virtual machine) has a significant advantage over zkEVM: the architecture of the target machine is simple and static. EVM often changes, gas prices will be adjusted, opcodes will also change, and some elements will be added or removed. MIPS-V has not changed since 1996. By focusing on zkMIPS, you are dealing with a fixed problem space. Whenever EVM is updated, you don’t need to change or even re-audit your circuit.

zkMIPS is more flexible than zkEVM

Another key point is that zkMIPS is more flexible than zkEVM. Through zkMIPS, you can change client code at will, perform various optimizations, or improve the user experience without corresponding circuit updates. You can even create a core component that turns any blockchain into a ZK Rollup, not just Ethereum.

Your task has turned into proof of time

Zero-knowledge proof time is expanded along two axes: the number of constraints and the size of the circuit. By focusing on circuits for simple machines like MIPS (rather than more complex machines like EVM), we can significantly reduce the size and complexity of the circuit. However, the number of constraints depends on the number of machine instructions executed. Each EVM opcode is broken down into multiple MIPS opcodes, which means that the number of constraints increases significantly, and your overall proof time increases significantly.

However, reducing proof time is also a problem deeply rooted in the Web2 field. Considering that the MIPS machine architecture is unlikely to change in the short term, we can highly optimize the circuit and prover without considering the future changes of the EVM. I am very confident in hiring a senior hardware engineer to optimize a well-defined problem, which may be ten times or even a hundred times the number of engineers required to build and audit a constantly changing zkEVM target. Companies like Netflix may have a large number of hardware engineers optimizing transcoding chips, and they are likely to be willing to meet this interesting ZK challenge with a pile of venture capital funds.

For circuits like this, the initial proof time may exceed the 7-day Optimistic Rollup withdrawal period. As time goes on, this proof time will only decrease. By introducing ASICs and FPGAs, we can significantly speed up proof time. With a static goal, we can build a more optimized prover.

Eventually, the proof time for this circuit will be lower than the current 7-day Optimism withdrawal period, and we can start considering removing Optimism’s challenge process. Running a prover for 7 days may still be too expensive, so we may want to wait a while longer, but this is a reasonable point. You can even run two proof systems at the same time, so that we can start using ZK proofs as soon as possible and fall back to Optimism proofs if the prover fails for any reason. When ready, Optimism proofs can be removed in a way that is completely transparent to the application, and your Optimistic Rollup becomes a ZK Rollup.

You can go care about other important issues

Running a blockchain is a complex problem that involves not only writing a lot of backend code. At Optimism, much of our work is focused on improving the user and developer experience by providing useful client tools. We also spend a lot of time and effort on “soft” issues: talking to projects, understanding their pain points, and designing incentive mechanisms. The more time you spend on chain software, the less time you have to deal with these other things. While you can always try to hire more people, organizations do not scale linearly, and each new employee adds to the cost of internal communication.

Because the work of zero-knowledge circuits can be directly applied to the running chain, you can simultaneously build the core platform and develop proof software. Because clients can be modified without changing the circuit, you can decouple your client and proof teams. An Optimistic Rollup implemented in this way may be years ahead of zero-knowledge competitors in actual chain activity.

Conclusion

To be very frank, I don’t think the zkMIPS prover has any obvious shortcomings unless it cannot be significantly optimized over time. The only real impact on the application, I think, is that the gas cost of different opcodes may need to be adjusted to reflect the increased proof time of these opcodes. If this prover really can’t be optimized to a reasonable level, then I admit I failed. But if this prover can really be optimized, the zkMIPS/zkVM approach may completely replace the current zkEVM approach. This may sound like a radical statement, but not long ago, single-step optimistic fault proofs were completely replaced by multi-step proofs.

Original link

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- The bankruptcy judge in the southern district of New York has approved Celsius’ exchange of altcoins for BTC and ETH starting from July 1st.

- Exclusive Interview with HashKey PRO: Has Reached Cooperation with Zhongan Bank, Currently Supporting SWIFT Bank Transfers in 16 Countries and Regions

- ZeroLiquid: Zero-Collateral, Zero-Interest Loan Agreement with LST as Collateral

- Unveiling the New Future of ZK-Rollup: Taiko – Pioneering the Challenge of zkEVM

- Azuki founder’s first response to the disaster: Overestimated oneself, underestimated the community, won’t sell the company

- The Five Paths of Layer 2 Evolution: Why They Converge on Superchains and L3

- Reserve Bank of New Zealand: Cryptocurrencies “do not currently require regulatory measures”