ChatGPT has become popular in the past six months hot money, giants, and regulation

ChatGPT's popularity in the past six months hot money, giants, and regulationAfter six months of AI baptism, it may be difficult to find a more appropriate term than “stress response” to describe the state of everyone in the technology industry today – tense, exciting, and stressful.

“Stress response” refers to a series of reactions that organisms produce to maintain the stability of their bodies when faced with external environmental stress or threats. It is a natural response that organisms generate to adapt to the environment and ensure survival. This response can be temporary or long-term.

On July 26th, OpenAI announced on its official Twitter account that the Android version of ChatGPT is now available for download in the United States, India, Bangladesh, and Brazil, with plans to expand to more countries in the near future. ChatGPT is expanding its channels, gaining more users and stronger user stickiness, and the wave of generative AI continues to rise.

In early July, at the 2023 World Artificial Intelligence Conference (WAIC) in Shanghai, a technician from a large-scale model startup company was shuttling around the exhibition hall, planning to find a cost-effective domestic chip solution for the company’s large-scale model training.

- Talking about Polygon’s accusation of zkSync copying code

- Why choose decentralized trading platforms

- Bob the Solver Architecture Interpretation Intent-based Transaction Infrastructure

“We have 1000 A100s, but it’s not enough at all,” she told Huxiu.

The A100 is a high-end GPU from NVIDIA and is the hardware foundation for the growth of ChatGPT. Some publicly available data shows that OpenAI used approximately 25,000 NVIDIA GPUs in the process of training the GPT series models. Therefore, to build large-scale models, it has almost become an industry practice to first evaluate how many A100 graphics cards can be obtained.

Where are the GPUs? Where is the inexpensive computing power? These are just a microcosm of the many questions at the 2023 WAIC conference.

Almost everyone who has been “stressed” in the past six months is eager to find more answers about AI at this “grand event”.

Scene of the 2023 WAIC

A technician from a chip exhibitor told Huxiu that during the days of the WAIC conference, many product managers came to their “large-scale model” booth, hoping to find product definitions for their company’s large-scale model business.

On May 28th, at the Zhongguancun Forum, the Institute of Scientific and Technical Information of China released a research report on the “Map of China’s Large-scale AI Models”, which showed that as of the end of May, there were 79 large-scale models in China with parameter scales exceeding 1 billion. In the following two months, a series of AI large-scale models were released, including Alibaba Cloud’s Tongyi Wanxiang, Huawei Cloud’s Pangu 3.0, and Youdao’s “Ziyue”. According to incomplete statistics, there are now over 100 large-scale AI models in China.

The actions of domestic companies rushing to release large-scale AI models are the best manifestation of “stress response”. The anxiety brought about by this “response” is being transmitted to almost all relevant personnel in the industry, from CEOs of Internet giants to researchers in AI research institutions, from partners of venture capital funds to founders of AI companies, and even many AI-related legal practitioners, as well as regulators in data and network security.

For those outside the industry, this may be nothing more than a temporary carnival, but how many people dare to say they are beyond AI today.

AI is opening a new era, and everything is worth reshaping with large models. More and more people are starting to think about the consequences after the diffusion of technology.

Funds are pouring in, the flywheel has appeared

Within a month of the birth of ChatGPT, the founder of AskWonder, Li Zhifei, visited Silicon Valley twice, and talked about large models with everyone he met. When talking with Huxiu, Li Zhifei bluntly said that this is his last “All in” move.

In 2012, Li Zhifei founded AskWonder, an artificial intelligence company with voice interaction and the combination of software and hardware as its core. It has experienced the ups and downs of two waves of artificial intelligence in China. During the last wave of artificial intelligence’s hottest period, AskWonder’s valuation was once pushed to the level of a unicorn, but afterwards, it also experienced a period of decline. It was not until the appearance of ChatGPT that the long dormant artificial intelligence industry was torn open.

In the primary market, “hot money is pouring in.”

This is the industry consensus when it comes to large models in the past six months. Qi Ji, the founder of Qiji Forum, believes that AI large models are a “flywheel” and the future will be an era where models are everywhere. “This flywheel has been activated,” and the biggest driving force is capital.

In early July, data released by the business information platform Crunchbase showed that companies classified as AI raised $25 billion in the first half of 2023, accounting for 18% of global financing. Although this number has decreased compared to the $29 billion in the first half of 2022, the total financing amount of various industries worldwide in the first half of 2023 has decreased by 51% compared to the same period in 2022, indicating that the proportion of financing amount in the AI field in the total financing amount worldwide has almost doubled. Crunchbase wrote in the report, “If it weren’t for the AI boom caused by ChatGPT, the financing amount in 2023 would be even lower.”

So far, the largest financing in the AI industry in 2023 is Microsoft’s $10 billion investment in OpenAI in January.

According to public data statistics by Huxiu, in the United States, Inflection AI may become the second largest startup in the field of artificial intelligence in terms of financing volume, second only to Open AI. The following are Anthropic ($1.5 billion), Cohere ($445 million), Adept ($415 million), Runway ($195.5 million), Character.ai ($150 million), and Stability AI (about $100 million).

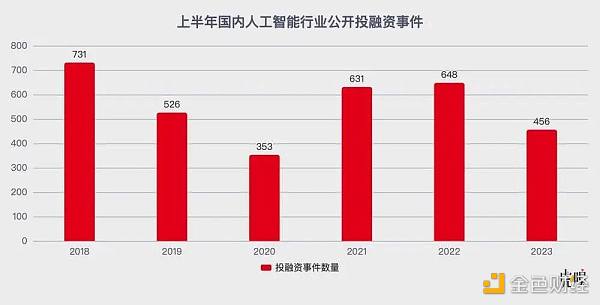

In China, there were a total of 456 public investment and financing events in the domestic artificial intelligence industry in the first half of 2023. In the five-year period from 2018 to 2022, the statistics were 731, 526, 353, 631, and 648, respectively.

Public investment and financing events in the domestic artificial intelligence industry in the first half of the year

Another event that triggered the flywheel is the release of the API interface of ChatGPT. When OpenAI first opened the API interface of ChatGPT in March, there was almost a consensus inside and outside the AI industry: the industry is about to change. As more applications access large models, a more lush forest is growing above AI.

“The development of large models and the development of applications should be separated.” Investors always have a keen sense. In the view of Chen Runze, Managing Director of Source Code Capital, AI is a logic similar to semiconductor division of labor. After the prosperity of large AI models, we will soon see a wave of prosperity in AI applications.

Earlier this year, when Chen Runze and his colleagues went to Silicon Valley, they found that half of the projects in Y Combinator, a well-known startup incubator in Silicon Valley (where Sam Altman, CEO of OpenAI, was president for many years), had transformed into generative AI. The enthusiasm for large models is no less than that in China today.

However, he also found that in the United States, both capital and entrepreneurs are more optimistic about the ecological applications based on large models, compared to entrepreneurship based on large models. After all, in this track, companies such as OpenAI have already emerged. At the same time, the United States has a strong ToB application ecosystem, so more American companies are trying to develop enterprise applications based on large models.

Chen Runze’s observations are being confirmed. Chen Ran, co-founder of the large model service platform OpenCSG, told Huxiu that today, more than 90% of companies in the Bay Area of the United States have used large model capabilities in various aspects. As for China, Chen Ran believes that many customers will also start using it before the end of the year.

Around March this year, Chen Runze and his team began to try to find companies in China that develop applications based on large models, but he found that such companies are rare. A large amount of capital has entered the artificial intelligence industry, but if you trace the flow of these funds, you will find that most of the money is still concentrated in a few leading companies.

“Even now, it is not easy to invest in 1-2 out of 10 projects related to generative AI.” In addition to Source Code Capital, Huxiu has also communicated with many hard technology investors. They all said that although they have seen many projects, truly reliable ones are rare.

This attitude on the application side is already considered normal by many industry insiders.

Yu Kai, co-founder of Sibichi, believes that the seemingly lively track is actually more of a nominal competition, and the results are nothing but two situations: “One is to raise money, purely capital-oriented; the other is companies that develop general-purpose large models, they really need to shout, otherwise others won’t know.”

Some domestic statistics are also illustrating this issue. According to data from third-party institution Xiniu, as of July 2023, there are 242 AIGC companies in China, and there have been 71 financing events in the AIGC track since January. On the other hand, there are 67 companies in the AI large model track, and there have been only 21 financing events since the release of ChatGPT.

Financing events in the AIGC track and the AI large model track since the release of ChatGPT | Data source: Xiniu Data

“There are too few good targets in the current AI market in China.” An investor told Huxiu. Good projects are too expensive, and cheap ones are not reliable. Although there have been over a hundred large AI models released in China, there are not many companies among them that have received huge financing, and they can even be counted on one hand.

Many AI investments end up being invested in people – former unicorn company founders, internet giants, and entrepreneurs with experience in large models.

|

Company Type |

Company |

Establishment Time |

Large Models and Related Products |

Funding Round |

|

Internet Company |

Baidu |

2012 |

Wenxin One Word |

Public Listing |

|

Alibaba Cloud |

2008 |

Tongyi Qianwen |

Public Listing |

|

|

Tencent AI Lab |

1998 |

Hunyuan |

Public Listing |

|

|

Huawei Cloud |

2019 |

Pangu |

Not Listed |

|

|

ByteDance |

2016 |

Volcano Ark |

Not Listed |

|

|

Jingdong Cloud |

2012 |

Yanxi |

Public Listing |

|

|

Kunlun Wanwei |

2008 |

Tiango |

Public Listing |

|

|

360 |

1992 |

360 Brain |

Public Listing |

|

Company Type |

Company |

Establishment Time |

Large Models and Related Products |

Funding Round |

|

AI Company |

Sensetime |

2014 |

RiRiXin |

Public Listing |

|

iFlytek |

1999 |

XunfeiXinghuo |

Public Listing |

|

|

Yuncong Technology |

2015 |

Congrong |

Public Listing |

|

|

DAGuan Data |

2015 |

Caozhi |

Series C |

|

|

AskCI |

2014 |

XuLieHouZi |

Series D |

|

|

ZhiPu Al |

2019 |

ChatGLM |

Series B |

|

|

LanZhou Technology |

2021 |

Mengzi |

Pre-A Round |

|

|

MiniMax |

2021 |

Glow |

Equity Investment |

|

|

MianBi Technology |

2022 |

VisCPM |

Angel Round |

|

|

ShenYan Technology |

2022 |

CPM |

Equity Investment |

|

|

LingXin AI |

2021 |

Al Utopia |

Pre-A Round |

|

|

XianYuan Technology |

2021 |

ProductGPT |

Angel Round |

|

|

SenseTime |

2007 |

DFM-2 |

IPO Termination |

|

Company Type |

Company |

Establishment Time |

Large Models and Related Products |

Funding Round |

|

Established a start-up AI company in 2023 |

Beyond Light Years |

2023 |

No |

Series A |

|

Baichuan Intelligence |

2023 |

baichuan |

Equity Investment |

|

|

Zero One Everything |

2023 |

No |

Equity Investment |

Partial Statistics of Domestic AI Large Model Related Companies

Among the AI star projects this year, Zhipu AI, Lingxin Intelligence, Shenyuan Technology, and Mianbi Intelligence are all companies incubated by Tsinghua Laboratory. Shenyuan Technology and Mianbi Intelligence were both established in 2022 and have the technical endorsement of well-known scholars in the AI industry.

Some AI companies founded by Internet industry giants after the start of the large model boom are even shorter in establishment time than these Tsinghua AI companies. Beyond Light Years, Baichuan Intelligence, and Zero One Everything were all established after this wave of large model craze began.

Wang Huiwen, co-founder of Meituan, established Beyond Light Years in early 2023 and once raised $50 million in funding, making it one of the few financing cases in the Chinese large model industry at that time. Unlike Zhipu AI and Xihu Xincheng, which are based on existing large models, Beyond Light Years started from scratch in February 2023 to build large models, the difficulty of which can be imagined. On June 29th, Meituan announced the acquisition of all the equity of Beyond Light Years, with a total consideration including approximately $233 million in cash, approximately RMB 167 million in debt assumption, and HK$1 in cash.

“At least there should be someone with a background in natural language processing, someone with practical experience in training large models, and professionals in data processing and large-scale computing clusters. If you also want to develop applications, you should also have product managers and operation talents in the corresponding fields.” Chen Runze described the standard configuration of a core team for large models in this way.

The AI Bet of Large Companies

In the past six months, news about AI from traditional Internet giants has filled the air. The investment in AI large models may seem like chasing after a hot trend, but for companies like Baidu, Alibaba, and Huawei, the bets they have placed on AI are clearly not just following the crowd.

These giants have been betting on AI for a long time, and AI is not a new topic for them. According to incomplete statistics based on data from enterprise search platform Qichacha, these major companies began investing in AI-related enterprises in 2018 to varying degrees. From the perspective of invested companies, most of them are in the field of AI applications, and although there are some AI chip companies involved, the number is not large. There are almost no companies involved in the field of large models, and most of the AI-related companies invested by these major companies are closely related to their business.

|

Major Investment Institutions |

Number of Invested Companies |

Average Shareholding Percentage |

Highest Shareholding Percentage |

Number of Companies with 100% Shareholding |

|

Alibaba |

23 |

36.25% |

100% |

5 |

|

Baidu Ventures |

25 |

5.50% |

15% |

0 |

|

Tencent Investment |

54 |

17.54% |

100% |

2 |

Investment in AI-related companies by three major internet giants|Data source: Qichacha

In 2017, Alibaba established the DAMO Academy, which covers multiple industries such as machine intelligence, Internet of Things, and financial technology. It empowers Alibaba’s various business lines with the capabilities of artificial intelligence. In 2018, Baidu proposed the “All in AI” strategy.

What is different is the emergence of generative AI, which seems to be a turning point. For technology giants with advantages in data, computing power, and algorithms, artificial intelligence is no longer just about empowering scenarios but also about taking on the role of infrastructure. After all, the emergence of generative AI means that the division of labor in the artificial intelligence industry has begun.

Represented by Baidu, Alibaba, Huawei, and Tencent, the four major cloud providers have all announced their respective AI strategies, but each has its own focus.

In the past six months, the giants have successively released their own large-scale model products. For Baidu and Alibaba, it was not too late for them to enter the field of large-scale models, basically in 2019.

Since 2019, Baidu has been developing pre-trained models and has released the ERNIE series of models for knowledge enhancement. Alibaba’s Tongyi Qianwen large-scale model also began in 2019. In addition to Baidu and Alibaba’s general large-scale models, on June 19th, Tencent Cloud released the research progress of industry large-scale models. On July 7th, Huawei Cloud released the PanGu 3.0 industry large-scale model product.

These focuses also correspond to their overall businesses, cloud strategies, and long-term layouts in the AI market.

In the past five years, Baidu’s main business profitability has fluctuated greatly. Baidu saw the problems with search-based advertising business in the domestic market very early on. In response, Baidu chose to invest heavily in AI technology to find new opportunities. Over the years, Baidu has not only invited industry giants such as Andrew Ng and Qi Lu to serve as executives but also invested more enthusiasm in autonomous driving than other major companies. With such a focus on AI, Baidu is bound to make a significant investment in this wave of large-scale models competition.

Alibaba has also shown great enthusiasm for general large models. Alibaba Cloud has always been highly anticipated, and Alibaba hopes to create the second growth curve of the group through the technological route. In the context of increasingly fierce competition in the e-commerce business and the slowdown in market growth, the new opportunities for the AI industry born from the cloud undoubtedly provide a good opportunity for Alibaba Cloud to make further efforts in the domestic cloud market.

Compared with Baidu and Alibaba, Tencent Cloud has chosen to prioritize industry-specific large models, while Huawei Cloud has publicly stated that it will only focus on industry-specific large models.

For Tencent, the main business has been growing steadily in recent years. In the uncertain stage of the future of general large models, Tencent’s investment in AI large models is relatively cautious. Ma Huateng mentioned large models in a previous earnings conference call and said, “Tencent is not eager to show unfinished products. The key is to solidly do the underlying algorithms, computing power, and data, and even more importantly, to land the scenarios.”

On the other hand, from the perspective of Tencent Group, Tencent currently has four AI Labs and also released a trillion-parameter mixed-element large model last year. Tencent Cloud’s move into the industry-specific large model field seems more like a betting strategy of “not putting all eggs in one basket.”

For Huawei, it has always been a heavy bet on research and development. In the past 10 years, Huawei’s total investment in research and development has exceeded 900 billion yuan. However, due to the development obstacles encountered in the smartphone business, Huawei’s overall strategy in many technical research and development areas may also be facing adjustments.

On the one hand, the smartphone business is the largest export of Huawei’s C-end technology. If the smartphone business does not support the general large models, the motivation for Huawei to develop general large models will obviously decrease. For Huawei, betting on industry-specific large models that can quickly land and be monetized seems to be the optimal solution in this AI game. As Huawei Cloud CEO Zhang Ping’an said, “Huawei doesn’t have time to write poetry.”

However, for tech giants, no matter how big the bet is, as long as they can get it right, they can seize the market share of infrastructure ahead of time and gain a say in the era of artificial intelligence.

Looking for nails with a hammer

For commercial companies, all decisions still come down to economic considerations.

Even for a significant investment, more and more visionary founders of companies are realizing that this is something that must be done in the future, even if there may be no return in the initial stage.

The development of AI large models requires a significant investment, but more and more founders of companies and investors believe that this is a “necessary investment,” even if there is currently no visible return.

As a result, many artificial intelligence companies that emerged in the previous wave of AI have seen new opportunities after a long period of silence.

“Three years ago, everyone said that GPT-3 was a possibility for general artificial intelligence.” Li Zhifei began researching GPT-3 with a team in 2020, when Damingwenwen was at a turning point in its development. They hoped to explore new business opportunities, but after a period of research, Li Zhifei’s large-scale model project was discontinued, partly because the model was not large enough, and also because they could not find a commercial landing scenario.

However, after ChatGPT was introduced at the end of 2022, Li Zhifei seemed to have received a shot in the arm, because like everyone else, he saw new opportunities for large-scale models. In April of this year, Damingwenwen released its self-developed large-scale model product, “Sequence Monkey”. Now, they are preparing to sprint to the Hong Kong Stock Exchange with the newly released large-scale model “Sequence Monkey”, and Damingwenwen submitted its prospectus at the end of May.

Another well-established AI company is also following suit. In July of last year, SenseTime submitted an IPO application to the Science and Technology Innovation Board, but it was rejected by the Listing Review Committee in May of this year.

Yukai confessed that even OpenAI, during the GPT-2 stage, trained for nearly a year with Microsoft’s V100, and the computational power of the A100 is several orders of magnitude higher. During the early accumulation stage of large-scale models, SenseTime also used more economical cards for training. Of course, this requires time as a cost.

Compared to self-developed large-scale models, some application-oriented companies have their own choices.

The president of an online education company, Zhang Wang (pseudonym), told Hu Xiu that in the past six months, they have spared no effort in exploring the application scenarios of large-scale models. However, they quickly discovered that there are many problems in the landing process, such as cost and investment. The company’s R&D team has 50-60 people, and since they started researching large-scale models, they have expanded the R&D team and recruited some talents in the field of large-scale models. Zhang Wang said that talents in the lower-level model field are expensive.

Zhang Wang never thought of developing a large-scale model from scratch, and considering issues such as data security and model stability, he does not plan to directly connect to APIs for applications. Their approach is to refer to open source large-scale models and train them with their own data. This is also the current practice of many application companies – on top of the large-scale model, they create a small industry-specific model with their own data. Zhang Wang and his team started with a 7 billion parameter model and reached 10 billion, and now they are trying a 30 billion model. But they have also found that as the amount of data increases, situations may arise in large-scale model training where the new version is not as good as the previous version, and parameters need to be adjusted one by one. “This is a necessary detour,” Zhang Wang said.

Zhang Wang told Hu Xiu that their requirement for the R&D team is to explore AI large-scale model scenarios based on the company’s business.

This is a method of using a “hammer” to find a “nail”, but it is not easy.

“The biggest challenge at the moment is finding suitable scenarios. In fact, there are many scenarios where even with the use of AI, the effect cannot be significantly improved.” Zhang Wang said, for example, in the classroom scenario, AI large-scale models can empower some interaction modes, including reminding students to attend class, answering questions, and tagging, but after trying out the AI large-scale model, they found that the accuracy was not good and the understanding and output capabilities were not ideal. After trying for a period of time, Zhang Wang’s team decided to temporarily abandon AI in this scenario.

Another internet service provider, Xiao’e Tong, also explored related businesses immediately after the outbreak of AI big models. Xiao’e Tong’s main business is to provide online merchants with digital operation tools, including marketing, customer management, and business monetization.

Fan Xiaoxing, co-founder and COO of Xiao’e Tong, told Huxiu that in April of this year, when more and more applications were based on generative AI, Xiao’e Tong saw the potential behind this technology. “For example, MidJourney, the efficiency improvement in image generation by generative AI is obvious.” Fan Xiaoxing said they have organized a dedicated AI research business line internally to find landing cases related to their own business.

Fan Xiaoxing said that in the process of integrating big models into business, what she considers is cost and efficiency. “The investment cost of big models is quite high.” she said.

The “nail” in the internet industry is easy to find, but the real difficulty of landing AI lies in industries such as industry and manufacturing.

Yu Kai told Huxiu that this wave of AI trend is still a spiral rise and a wave-like progress, and there has been no change in the contradiction of industrial landing. It’s just a change of appearance. So from this point of view, the rules of these two AI trends are the same, and the best way is to learn from history-“Don’t make the same mistakes as the last wave of AI trend.”

Although many vendors have called for “industry first” in the landing of AI big models, it is really difficult to match many scenarios in physical industries with the current AI big models. For example, in AI visual inspection systems applied in some industrial inspection scenarios, even if the demand for AI models is not as high as 1 billion parameters, the initial training data is still limited.

Take a simple wind power inspection scenario as an example. The inspection volume of a wind farm reaches 70,000 times, but the same crack data may only appear once. The amount of data that machines can learn is far from enough. Ke Liang, the director of the intelligent wind power hardware products of Expand Intelligence, told Huxiu that the robot for inspecting wind turbine blades still cannot achieve 100% accurate analysis of blade cracks because the amount of training and analysis data available is too small. To achieve reliable fully automatic inspection and identification, a large amount of data accumulation and manual analysis is still needed.

However, in scenarios where industrial data accumulation is good, AI big models can already assist in managing complex 3D model part libraries. A domestic aircraft manufacturing company has already landed a part library assistant tool based on the Fourth Paradigm’s “Shi Shuo” big model. It can search for 3D models through natural language among more than 100,000 3D modeling parts, search for 3D models with 3D models, and even complete the automatic assembly of 3D models. These functions require multiple steps in many CAD and CAE tools that are critical to the manufacturing industry.

Today’s large-scale models face the same problem of landing as AI did a few years ago. They still need to find nails with a hammer. Some optimistically believe that today’s hammers are completely different from the past, but when it comes to actually paying for AI, the results are somewhat different.

A survey released by Bloomberg on July 30th, called Markets Live Pulse, shows that out of 514 investors surveyed, about 77% plan to increase or maintain their investment in technology stocks in the next six months, and only less than 10% of investors believe that the technology industry is facing a serious bubble crisis. However, among these investors who are optimistic about the development of the technology industry, only half of them have an open attitude towards AI technology.

50.2% of the respondents stated that they are not currently planning to pay for AI tools, and most investment companies do not have plans to widely apply AI to trading or investment.

The Sellers of Shovels

“If you go to California to dig for gold during the gold rush in 1848, a lot of people will die, but the people who sell spoons and shovels can always make money.” said Lu Qi in a speech.

Gao Feng (pseudonym) wants to be one of these “sellers of shovels”, or more accurately, someone who can “sell good shovels in China”.

As a chip researcher, Gao Feng spends most of his research time on AI chips. In the past month or two, he has felt a sense of urgency – he wants to start a CPU company based on the RISC-V architecture. Gao Feng described the future scene to Huxiu in a tea house.

However, starting an AI chip company from scratch, whether in the chip industry or in the tech community, seems like a “fantasy”.

When the flywheel of the large-scale AI models started to spin rapidly, the computing power behind gradually couldn’t keep up with the pace of players in this track. The surging demand for computing power made Nvidia the biggest winner. But GPU is not the only solution for computing power. CPU, GPU, and various innovative AI chips make up the main computing power supply center for large-scale models.

“You can think of CPU as the downtown area, and GPU as the suburbs of development.” Gao Feng said, between CPU and AI chips, they need to be connected through a channel called PCIE, where data is passed to the AI chips, and then the AI chips send the data back to the CPU. If the amount of data in the large-scale model increases, the channel will become congested and the speed will not go up, so it is necessary to widen this road, and only CPU can determine the width of this channel, and how many lanes to set.

This means that even if China breaks through in AI chips in large-scale models, the most crucial CPU is still difficult to break through. Even in AI training, more and more tasks can be assigned to the GPU, but the CPU is still the most critical “manager” role.

Part of domestically produced chips exhibited in the 2023 WAIC large-scale model exhibition area

Since Intel produced the world’s first CPU in 1971, it has been dominating the market in civilian servers and PC markets, alongside AMD. Intel has established a strong business model barrier that includes intellectual property, technical accumulation, economies of scale, and software ecosystem, and this barrier has never diminished.

To completely abandon the X86 architecture and ARM architecture, and develop a completely independent CPU chip based on a new architecture, can be said to be “99 deaths and one life.” Longxin based on the MIPS instruction set has been working on this path for more than 20 years, not to mention the RISC-V, an open-source architecture that has not been fully developed and verified.

The instruction set is like a piece of land, and developing chips based on the instruction set is like buying land to build a house. The X86 architecture is closed source and only allows Intel’s ecosystem of chips. The ARM architecture requires payment of IP licensing fees, while RISC-V is a free and open-source architecture.

The industry and academia have already seen this opportunity.

In 2010, a research team led by two professors from the University of California, Berkeley, started from scratch to develop a new instruction set, which is RISC-V. This instruction set is completely open-source, and they believe that the CPU instruction set should not belong to any company.

“RISC-V may be a dawn for Chinese CPUs,” Gao Feng said. In 2018, he incubated an AI chip company in an institute. At that time, he said he didn’t want to miss the opportunity of AI development. This time, he still wants to seize the opportunity, and the entry point is RISC-V. In the era of large models and domestic substitutes, this demand becomes more urgent. After all, if one day Chinese companies can’t use A100, what should they do?

“If RISC-V CPUs want to replace ARM and X86, they need to have stronger performance and involve people who develop commercial operating systems on Linux in the code development,” Gao Feng said.

Gao Feng is not the first person to realize this opportunity. A chip industry investor told Hu Xiu that he had talked to the founder of a chip startup about the opportunity to use the RISC-V architecture to make GPUs. Now, there are already some companies in China that make GPUs based on the RISC-V architecture, but the ecosystem is still their biggest challenge.

“Linux has demonstrated that this path can be successful,” Gao Feng said. In the open-source operating system Linux, open-source companies like Red Hat have emerged, and many cloud services are built on the Linux system. “We need enough developers,” Gao Feng proposed a method. This path is difficult, but once it is successful, it will be a bright path.

The flywheel is spinning too fast

It’s not just Gao Feng who feels the urgency under the “stress response” of large models.

A domestic AI large model company, Lianchuang, told Hu Xiu that earlier this year, they briefly launched a dialogue large model. However, as ChatGPT gained popularity, the relevant departments have increased their focus on the security of large models and have made many rectification requirements to them.

“Before there are clear regulatory policies, we will not easily open our products to ordinary users, mainly focusing on the logic of B2B,” said Zhang Chao, CEO of Zuoshou Doctor. He believes that before the “Management Measures” are introduced, it is risky to open AI products generated by AI to C-end users. “At this stage, we are continuously iterating and optimizing, while also continuously paying attention to policies and regulations to ensure the security of technology.”

“The regulatory measures for AI generation are still unclear, and products and services of large-scale model companies are generally low-key.” A digital technology supplier released an application product based on a general large-scale model developed by a cloud vendor in June. At the press conference, the company’s technical director told Huxiu that they were required by the cloud vendor to strictly keep it confidential. If they disclose which large-scale model they used, they would be considered in breach of contract. As for why the cases need to be kept confidential, the director analyzed that a large part of the reason is to avoid regulatory risks.

In the current global context of increased vigilance towards AI, no market can accept a “vacuum period” of regulation.

On July 13, the Cyberspace Administration of China and seven other departments officially released the “Interim Measures for the Management of Generation AI Services” (hereinafter referred to as the “Management Measures”), which will be implemented from August 15, 2023.

“After the introduction of the ‘Management Measures,’ the policy will shift from problem-oriented to target-oriented development, which is our goal,” said Wang Yuwei, a partner at Guantao Law Firm. He believes that the new regulations focus on “loosening” rather than “blocking.”

Browsing the risk management libraries in the United States is a daily necessity for Wang Yuwei. “We are providing risk control and compliance solutions for commercial applications in segmented industries using large-scale models such as GPT, and establishing a compliance governance framework,” said Wang Yuwei.

The AI giants in the United States are lining up to pledge loyalty to Congress. On July 21, Google, OpenAI, Microsoft, Meta, Amazon, AI startup Inflection, and Anthropic, the seven most influential AI companies in the United States, signed a voluntary commitment at the White House. They pledged to allow independent security experts to test their systems before they are released to the public. They also promised to share data on the security of their systems with the government and academia. They will also develop systems that issue warnings to the public when images, videos, or texts are generated by AI, using a “watermark” method.

7 US AI giants sign AI commitment at the White House

Previously, at a hearing in the US Congress, Sam Altman, the founder of OpenAI, stated that a set of safety standards needs to be established for artificial intelligence models, including evaluating their potential dangers. For example, models must pass certain security tests, such as whether they can “self-replicate” and “penetrate the wild.”

Perhaps Sam Altman himself did not expect that the wheel of AI would turn so quickly, even with the risk of getting out of control.

“We didn’t realize how urgent this matter was at first,” said Wang Yuwei, until more and more company founders came to consult. He felt that this wave of artificial intelligence is undergoing a completely different change from the past.

At the beginning of this year, Wang Yuwei was approached by Wenshengtu, a company that was one of the first to access large models. This company wanted to introduce its business to China, so they wanted to understand the data compliance business in this area. Soon after, Wang Yuwei found that there were more and more consultations of this kind, and the more obvious change was that it was no longer the company’s legal department that came to consult, but the founders themselves. “With the emergence of generative AI, the existing regulatory logic is no longer applicable,” Wang Yuwei said.

Wang Yuwei, who has been working in the field of big data law for many years, found that generative AI and the previous wave of AI are undergoing more fundamental changes. For example, the previous AI was more based on algorithms for recommendation and some facial recognition, which were all targeted at a specific scenario and small models. The legal issues involved were mainly related to intellectual property and privacy protection. However, the different roles in this generative AI ecosystem, such as companies that provide underlying large models, companies that develop applications based on large models, and cloud service providers that store data, are subject to different regulations.

There is already consensus on the associated risks brought by large models, and the industry understands that commercial applications will inevitably amplify these risks. To maintain business continuity, it is necessary to pay attention to regulation.

The challenge is, “how to find a path that can both regulate well and not hinder industry development,” Wang Yuwei said.

Conclusion

For the entire industry, while deepening discussions on technology, it is also triggering more profound thinking.

As AI gradually dominates the technology industry, how can we ensure the fairness, equity, and transparency of technology? When leading companies tightly control technology and capital flows, how can we ensure that small and medium-sized enterprises and startups are not marginalized? The development and application of large models contain tremendous potential, but will blindly following the trend cause us to neglect other innovative technologies?

“In the short term, AI large models are being severely overestimated. But in the long run, AI large models are being severely underestimated.”

In the past six months, there has been a wave of AI frenzy. However, for Chinese startups and tech giants, how to maintain clear judgment and make long-term plans and investments in the heated market atmosphere will be the key to testing their true strength and foresight.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Nansen Data Which assets are crypto VCs adding?

- How can Web3 games break through their predicament? These 3 directions are worth paying attention to

- Is the ecological airdrop season coming? With the mainnet launched for more than two years, can DFINITY return to its peak?

- US SEC Chairman Current regulatory system lacks the ability to address the risks of AI

- Coinbase second-quarter earnings details announced, what information is worth paying attention to?

- Seize the opportunity of MEKE public beta test rewards to obtain benefits.

- Twitter changes its name to X in 10 days, causing a chaotic mess.