If artificial intelligence has self-awareness, how can scientists discover it?

If AI is self-aware, how can scientists detect it?Author: Mariana Lenharo, Translation: Yuan Universe Heart

For a long time, the idea of artificial intelligence gaining consciousness has existed in science fiction novels. Think back to the movie “2001: A Space Odyssey” in 1968, where the supercomputer HAL 9000 turned into a villain. With the rapid development of artificial intelligence, this possibility is becoming less and less far-fetched, and even gaining recognition from leaders in the field of artificial intelligence.

Last year, Ilya Sutskever, the Chief Scientist of OpenAI, the company behind the chatbot ChatGPT, tweeted that some of the most advanced artificial intelligence networks might have “a slight level of consciousness”.

Many researchers argue that artificial intelligence systems have not yet reached a level of consciousness. But the evolution of artificial intelligence has led them to ponder: how do we know if artificial intelligence has consciousness?

- Big Negative News for NFTs? SEC Regulates NFTs for the First Time in History

- Two SEC commissioners oppose SEC’s enforcement action on NFTs.

- Historic Moment US SEC Accuses NFTs of Being Securities (Full Text)

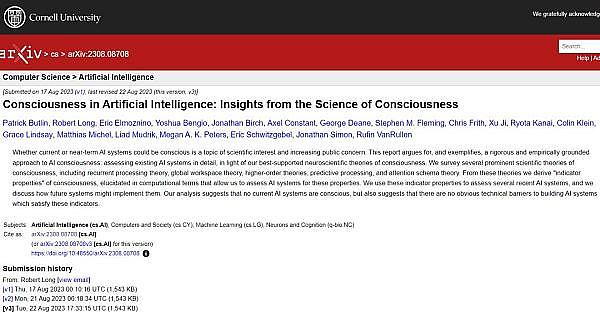

To answer this question, a team of 19 neuroscientists, philosophers, and computer scientists have proposed a set of criteria. If a system meets these criteria, it indicates a high possibility of consciousness. They published a preliminary guideline earlier this week in the arXiv preprint repository, which has not yet undergone peer review.

Robert Long, a philosopher at the AI Safety Center in San Francisco, California, and one of the co-authors, said they undertook this work because “there seems to be a lack of detailed, experientially based, thoughtful discussion on the consciousness of artificial intelligence.”

The research team states that the inability to identify whether an artificial intelligence system is conscious could have significant ethical implications. Megan Peters, a neuroscientist and co-author of the study, said that if something is labeled as “conscious,” it would “greatly change the way we, as humans, interact with it.”

Long adds that, to his knowledge, companies building advanced artificial intelligence systems have not put enough effort into evaluating the consciousness of these models and formulating corresponding plans. He said, “Although I have heard statements from some leaders of leading labs that artificial intelligence consciousness or perception is worth considering, I don’t think it’s enough.”

Nature magazine contacted the two major technology companies driving artificial intelligence development, Microsoft and Google. A spokesperson for Microsoft stated that the company’s core focus in developing AI is to assist humans in a responsible manner to enhance productivity, rather than replicating human intelligence.

The spokesperson said that since the release of GPT-4 (the latest publicly released version of ChatGPT), it is clear that “we are exploring how to harness the full potential of artificial intelligence for the benefit of society, which requires new approaches to evaluate the capabilities of these artificial intelligence models.” Google did not respond.

01. What is Consciousness?

When studying artificial intelligence consciousness, one challenge is defining what consciousness is. Peters states that, for the purposes of their research, researchers focus on “phenomenal consciousness,” which refers to subjective experience. That is, the sensations that exist in humans, animals, or AI systems (if any of them are proven to have consciousness).

There are many theories based on neuroscience that describe the biological basis of consciousness. However, there is currently no consensus on which one is “correct.” Therefore, the authors adopt a range of theories to create their framework. Their idea is that if the functions of an AI system align with multiple aspects of these theories, it is more likely to have consciousness.

They believe that this approach to evaluating consciousness is better than simply conducting behavioral tests, such as asking ChatGPT if it is conscious or challenging it and observing its response. This is because AI systems have already made incredible progress in mimicking humans.

Anil Seth, director of the Sackler Centre for Consciousness Science at the University of Sussex and a neuroscientist, believes that the team’s approach of adopting rigorously tested theories is a good choice. However, he states, “We still need more precise, thoroughly tested theories of consciousness.”

02. A Theory-Intensive Approach

To establish their criteria, the authors assume that consciousness is related to how systems process information, regardless of whether they are composed of neurons, computer chips, or other materials. This approach is known as computational functionalism. They also assume that consciousness theories based on neuroscience can be applied to artificial intelligence, which are derived from studies of the human and animal brain using brain scans and other techniques.

Based on these assumptions, the team selected six theories and extracted a series of consciousness indicators from them.

One of them is the Global Workspace Theory, which claims that humans and other animals use many specialized systems (also known as modules) to perform cognitive tasks such as vision and hearing. These modules work independently but in parallel, and integrate into a single system to share information.

Long states that “by observing the architecture of the system and the way information flows within it,” one can assess whether a particular AI system exhibits the indicators derived from this theory.

Seth is impressed with the team’s proposal. He says, “It’s very thoughtful, not grandiose, and clearly states its assumptions.” Although I disagree with some of the assumptions, it doesn’t matter because it’s possible that I’m wrong.”

The authors state that the paper is far from reaching a final conclusion on how to evaluate conscious AI systems, and they hope that other researchers can help refine their methodology. However, these criteria can already be applied to existing AI systems.

For example, the report evaluated large language models such as ChatGPT and found that these systems may possess some consciousness indicators related to the theory of global workspace. However, this work does not imply that any existing artificial intelligence system is a strong candidate for consciousness, at least not currently.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Why was my OpenSea account banned due to ‘US sanctions’?

- Arbitrum and DeFi Perfect Match?

- A Comprehensive Explanation of Chainlink Staking v0.2 Upgrade

- LD Macro Weekly Report China’s Expectations Hit Bottom, Who are the Long-Term Beneficiaries of AI

- Tornado Cash founder arrested Does privacy transaction tool have original sin?

- Dragonfly Managing Partner DeFi TVL at its Lowest Point in Two and a Half Years, But DeFi is Not Dying

- NVIDIA’s market value surpassing cryptocurrencies, Tesla, and Facebook. Are we still in the early stages of the cryptocurrency industry?