Comprehensive Explanation of the Shoal Framework: How to Reduce Bullshark Latency on Aptos?

Explanation of the Shoal Framework: Reducing Bullshark Latency on AptosOriginal source: Aptos Labs

Translation: Aptos Global

https://arxiv.org/pdf/2306.03058.pdf

- Financing Weekly Report | 18 public financing events; Gensyn, a blockchain-based AI computing protocol, completes $43 million in Series A financing, led by a16z.

- Blockchain Capital: Looking Ahead to the “Consumer Era” of Cryptocurrencies

- AI in the Web3 Era: Exploring the Infinite Potential of Blockchain and Artificial Intelligence

Overview

1) Aptos Labs has solved two critical open problems in DAG BFT, greatly reducing latency and eliminating the need for pauses in deterministic practical protocols for the first time. Overall, latency improvements for Bullshark were 40% under no-fault scenarios and 80% under fault scenarios.

2) Shoal is a framework that enhances any Narwhal-based consensus protocol (e.g., DAG-Rider, Tusk, Bullshark) through pipelining and leader reputation. Pipelining reduces DAG sorting latency by introducing an anchor point per round, while leader reputation further improves latency by ensuring anchor points are associated with the fastest validating nodes. Additionally, leader reputation enables Shoal to leverage asynchronous DAG construction to eliminate timeouts in all scenarios. This allows Shoal to provide what we call universal responsiveness, which includes optimistic responses typically required.

3) Our technology is very simple and involves running multiple instances of the underlying protocol one after another in sequence. Thus, when instantiated with Bullshark, we get a group of “sharks” racing each other.

Motivation

In the pursuit of high-performing blockchain networks, people have long focused on reducing communication complexity. However, this approach has not led to significant improvements in throughput. For example, Hotstuff, implemented in early versions of Diem, achieved only 3500 TPS, far below our goal of 100k+ TPS.

However, recent breakthroughs have come from the recognition that data propagation is the primary bottleneck of leader-based protocols and can benefit from parallelization. The Narwhal system separates data propagation from core consensus logic and proposes an architecture where all validators propagate data simultaneously, while the consensus component only orders a small amount of metadata. The Narwhal paper reports throughput of 160,000 TPS.

In previous articles, we introduced Quorum Store. Our Narwhal implementation separates data propagation from consensus and how we use it to scale our current consensus protocol, Jolteon. Jolteon is a leader-based protocol that combines Tendermint’s linear fast path and PBFT-style view changes, reducing Hotstuff latency by 33%. However, it is clear that leader-based consensus protocols cannot fully leverage Narwhal’s throughput potential. Although data propagation is separated from consensus, Hotstuff/Jolteon leaders are still limited as throughput increases.

Therefore, we decided to deploy Bullshark on top of the Narwhal DAG, a zero-communication-overhead consensus protocol. Unfortunately, compared to Jolteon, the high-throughput DAG structure supporting Bullshark brings a 50% latency cost.

In this article, we introduce how Shoal achieves a substantial reduction in Bullshark latency.

DAG-BFT Background

Let’s start by understanding the relevant background for this article. For a detailed description of Narwhal and Bullshark, please refer to the DAG meets BFT post.

https://decentralizedthoughts.github.io/2022-06-28-DAG-meets-BFT/

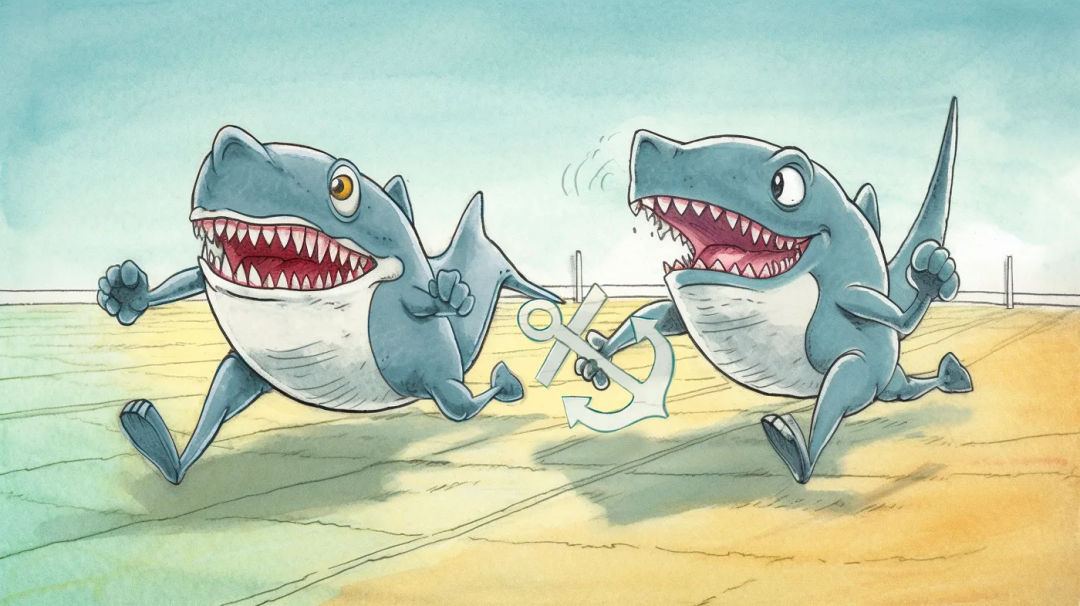

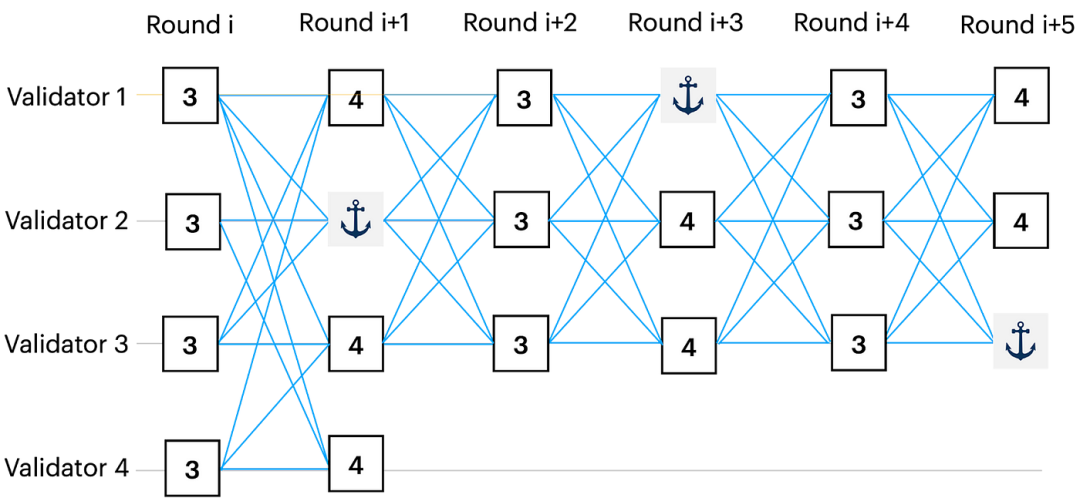

Each vertex in the Narwhal DAG is associated with a round number. To enter round r, a validator must first acquire n-f vertices belonging to round r-1. Each validator can broadcast one vertex per round, and each vertex references at least n-f vertices from the previous round. Due to network asynchrony, different validators may observe different local views of the DAG at any given time. The following is an illustration of a possible local view:

Figure 1: The causal history of vertices identified by validator 2 in round 2 is highlighted in green

A key property of the DAG is not ambiguous: If two validators have the same vertex v in their local DAG view, then they have exactly the same v causal history.

Total Order

A total order of all vertices in the DAG can be achieved without any additional communication overhead. To do so, validators in DAG-Rider, Tusk, and Bullshark interpret the structure of the DAG as a consensus protocol in which vertices represent proposals and edges represent votes.

Although the group intersection logic on the DAG structure is different, all existing Narwhal-based consensus protocols have the following structure:

1) Predetermined anchors, where every few rounds (for example, two rounds in Bullshark), there is a pre-determined leader whose vertex is the anchor;

2) Ordering anchors, where validators independently but deterministically decide which anchors to order and which to skip;

3) Order causal histories, where validators process their ordered anchor lists one by one, and for each anchor, they sort all previously unordered vertices in its causal history according to some deterministic rules.

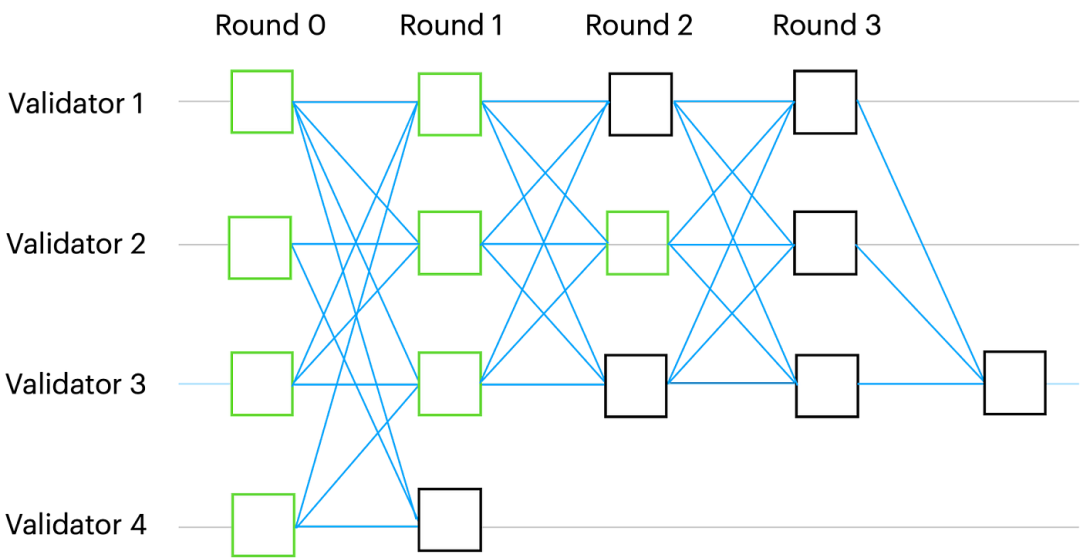

Figure 2: A schematic illustration of a possible local view of the DAG in the Bullshark protocol. In this example, the red and yellow anchoring points are ordered, while the green one (not in the DAG) is skipped. Thus, to order the DAG, the validating nodes deterministically first order the causal history of the red anchoring point, followed by the yellow anchoring point.

The key to satisfying safety is to ensure that in step (2) above, all honest validating nodes create a list of ordered anchors, so that all lists share the same prefix. In Shoal, we make the following observation for all of the above protocols:

All validators agree on the first ordered anchor.

Bullshark Latency

The latency of Bullshark depends on the number of rounds between ordered anchors in the DAG. While the most practical part of Bullshark’s synchronous version has better latency than the asynchronous version, it is far from optimal.

Question 1: Average block latency. In Bullshark, there is an anchor every even round, and the vertices in every odd round are interpreted as votes. In the common case, it takes two rounds of the DAG to order an anchor, however, the vertices in the causal history of the anchor require more rounds to wait for the anchor to be ordered. In the common case, the vertices in the odd rounds require three rounds, while the non-anchor vertices in even rounds require four rounds (see Figure 3).

Figure 3: In the common case, an anchor in round i+1 needs to be ordered in two rounds. Vertices in round i are ordered concurrently, so their latency is three rounds. However, vertices in round i+1 must wait for the next anchor to be ordered (in round i+3), so their ordering latency is four rounds.

Question 2: Fault case latency. The above latency analysis is applicable to the fault-free case. On the other hand, if the leader of a round fails to broadcast the anchor fast enough, the anchor cannot be ordered (and thus skipped), and all un-ordered vertices in the previous rounds must wait for the next anchor to be ordered. This significantly reduces the performance of the geographic replication network, especially since Bullshark timeouts are used to wait for the leader.

Shoal Framework

Shoal addresses both of these latency issues by enhancing Bullshark (or any other Narwhal-based BFT protocol) with pipelining, allowing for an anchor in each round and reducing the latency of all non-anchor vertices in the DAG to three rounds. Shoal also introduces a zero-overhead leader reputation mechanism in the DAG, which biases selection towards fast leaders.

Challenges

Under the context of DAG protocols, pipeline and leader reputation have been considered hard problems for the following reasons:

1) Previous pipelining attempts tried to modify the core Bullshark logic, which seemed inherently impossible

2) Leader Reputation, introduced in DiemBFT and formalized in Carousel, is the idea of dynamically selecting future leaders (anchors in Bullshark) based on validators’ past performance. While there is no violation of safety in these protocols from differences in leader identity, in Bullshark, it could lead to completely different orderings, which brings to the forefront the core issue that dynamically and deterministically selecting round anchors is necessary for consensus, and validators need to agree on an ordered history to choose future anchors.

As evidence of the difficulty of the problem, we note that Bullshark’s implementation, including the one currently in production, does not support these features.

Protocol

Despite these challenges, as the saying goes, the solution turned out to be hidden in simplicity.

In Shoal, we rely on the ability to perform local computation on the DAG and implement the ability to store and re-interpret earlier round information. With all validators agreeing on the core insight that the first ordered anchor is, Shoal composes multiple Bullshark instances one after the other to pipeline them, such that (1) the first ordered anchor is the switching point for the instance, and (2) the causal history of anchors is used to compute leader reputation.

Pipelining

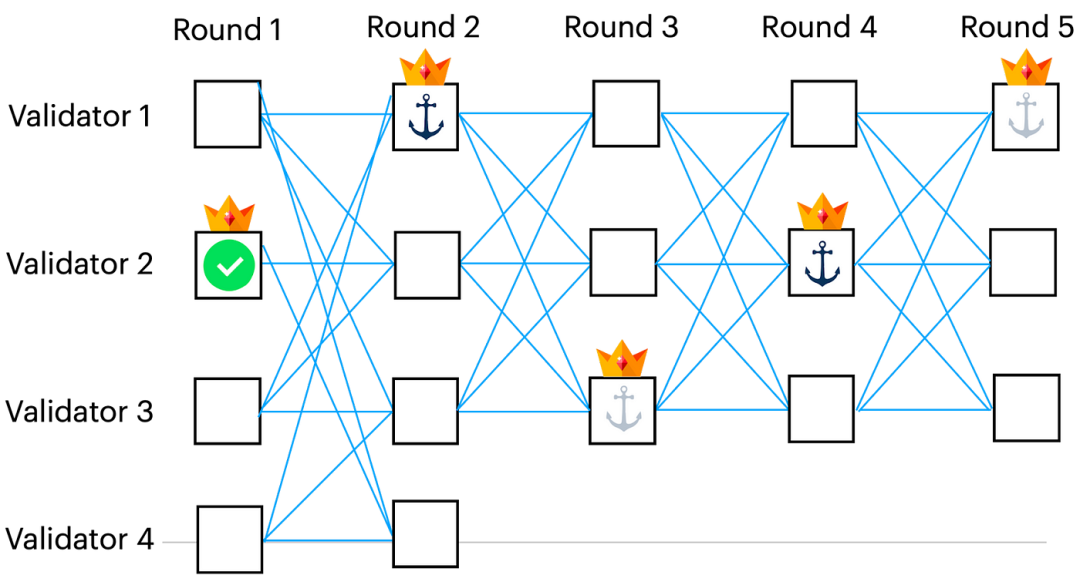

Similar to Bullshark, validators a priori agree on potential anchors, i.e., there is a known mapping F: R -> V that maps rounds to leaders. Shoal runs Bullshark instances one after the other, so for each instance, the anchor is pre-determined by the mapping F. Each instance orders one anchor, which triggers the switch to the next instance.

Initially, Shoal launches the first instance of Bullshark in the first round of the DAG and runs it until the first ordered anchor, say in round r, is determined. All validators agree on this anchor. Thus, all validators can agree on reinterpreting the DAG from round r+1 with certainty. Shoal simply launches a new Bullshark instance in round r+1.

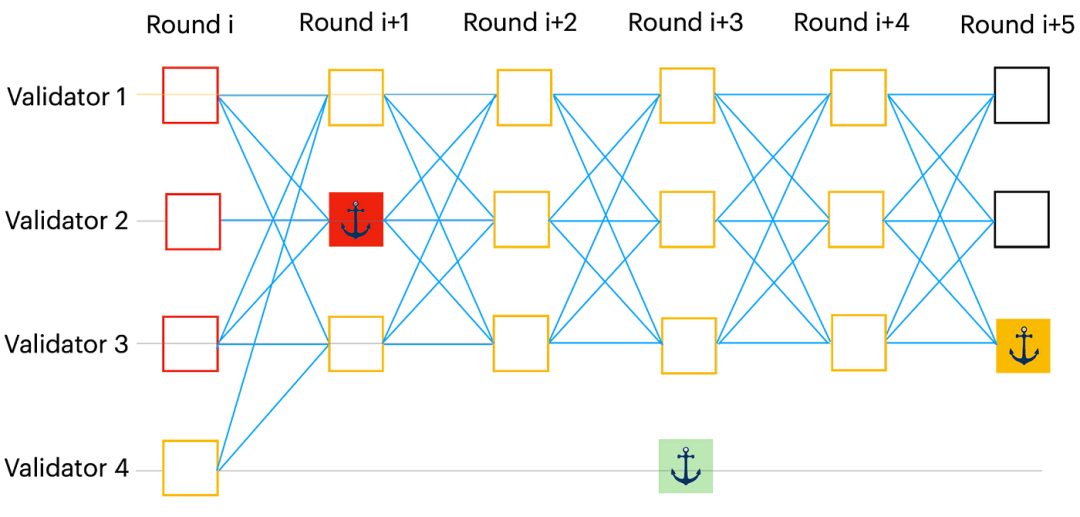

In the best case, this allows Shoal to order an anchor in each round. The anchor point of the first round is ordered by the first instance. Then, Shoal starts a new instance in the second round, which itself has an anchor point that is ordered by that instance, and then another new instance orders an anchor point in the third round, and this process continues. See the explanation in the figure below:

Figure 4: Vertices corresponding to the leader identified with F are marked with crown. The first instance of Bullshark interprets the DAG with anchor points in rounds 1, 3 and 5, and Bullshark determines the anchor point in round 1 (marked with green checkmark) as the first one ordered in the first instance. (Note that in general, this anchor point can be skipped, and some other anchor points will be ordered first.) Then, the rest of the first instance is discarded, and a new instance of Bullshark starts from round 2, with anchor points marked in rounds 2 and 4.

Leader Reputation

Skipping anchor points during Bullshark sorting increases latency. In this case, pipelining technology is helpless, because a new instance cannot be started before the previous instance orders the anchor point. Shoal ensures that it is unlikely to choose a corresponding leader to handle lost anchor points in the future by assigning a score to each validation node based on its recently active history, using a reputation mechanism. Validators who respond and participate in the protocol will receive high scores, otherwise, validation nodes will be assigned low scores because they may crash, slow down, or misbehave.

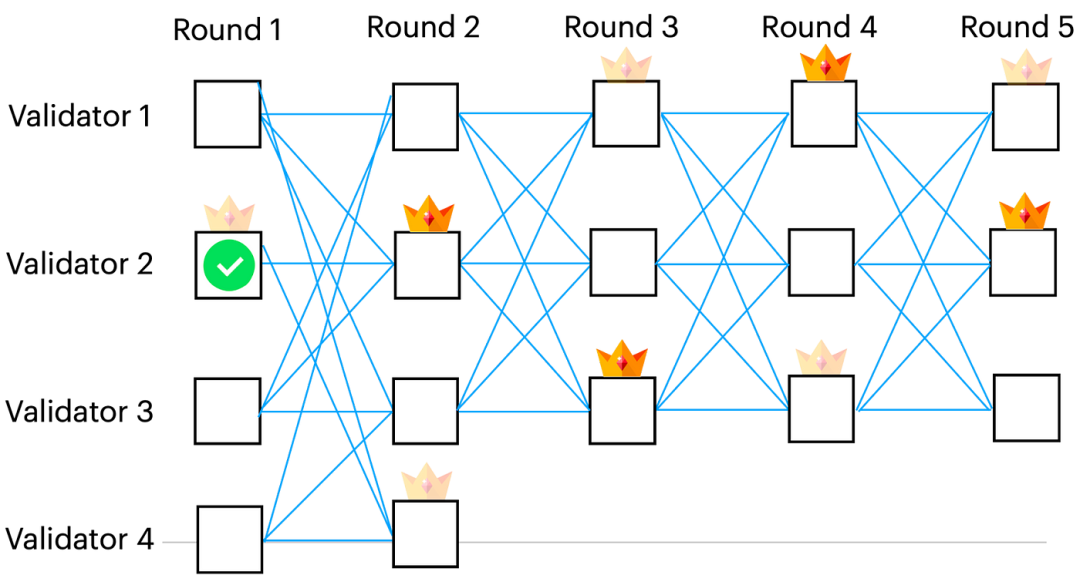

The idea is to deterministically recalculate the predefined mapping F from round to leader when the score is updated, biased towards leaders with higher scores. To achieve consensus among validators on the new mapping, they should achieve consensus on the score, thus achieving consensus on the history used to derive the score.

In Shoal, the pipeline and leader reputation can be naturally combined because they both use the same core technology, that is, DAG is reinterpreted after the first ordered anchor point is reached a consensus.

In fact, the only difference is that after sorting the anchor points in round r, the validator only needs to calculate the new mapping F’ starting from round r+1 based on the causal history of the ordered anchor points in round r. Then, the validator uses the updated anchor selection function F’ to execute a new instance of Bullshark starting from round r+1. See the following figure:

Figure 5. Vertices corresponding to leaders determined by F are marked with transparent crowns. The first instance of Bullshark ordered an anchor point in round 1, marked with a green check mark, and then calculated a new mapping F' based on the causal history of the anchor. The leaders determined by F' are marked with colored crowns.

No More Timeouts

Timeouts play a crucial role in all leader-based deterministic partially synchronous BFT implementations. However, the complexity they introduce increases the number of internal states that need to be managed and observed, which increases the complexity of the debugging process and requires more observability techniques.

Timeouts also significantly increase latency because they need to be properly configured and often require dynamic adjustment since they are highly dependent on the environment (network). The protocol pays the full timeout delay penalty for a faulty leader before transitioning to the next leader. Therefore, the timeout settings cannot be too conservative, but if the timeout is too short, the protocol may skip good leaders. For example, we observed that leaders in Jolteon/Hotstuff were overwhelmed under high load and timed out before they made progress.

Unfortunately, leader-based protocols such as Hotstuff and Jolteon inherently require timeouts to ensure progress of the protocol every time a leader fails. Without timeouts, even a crashed leader may stop the protocol forever. Since faulty leaders and slow leaders cannot be distinguished during the asynchronous period, timeouts may cause validators to view all leader changes without consensus activity.

In Bullshark, timeouts are used for DAG construction to ensure that honest leaders adding anchors to the DAG during synchronization do so fast enough to be ordered.

We observe that DAG construction provides a “clock” for estimating network speed. As long as n-f honest validators continue adding vertices to the DAG, rounds will continue to advance, in the absence of pauses. While Bullshark may not be able to order by network speed (due to leader issues), the DAG still grows at network speed, despite some leaders being faulty or the network being asynchronous. Eventually, when a non-faulty leader broadcasts an anchor fast enough, the entire causal history of the anchor will be ordered.

In our evaluation, we compared Bullshark with and without timeouts under the following scenarios:

1) Fast leader, meaning it is at least faster than other validators. In this case, both methods offer the same latency, as anchors are ordered and timeouts are not used.

2) Faulty leader, in which case Bullshark without pauses offers better latency, as validating nodes immediately skip its anchors, while paused validators wait for their timeouts before proceeding.

3) Slow leader, which is the only case where Bullshark’s timeout performs better. This is because, without pauses, anchors may be skipped, as the leader cannot broadcast it fast enough, while with pauses, validators wait for the anchor.

In Shoal, avoiding timeouts and leader reputation are closely related. Repeatedly waiting for slow leaders increases latency, and leader reputation mechanism excludes slow validators from being selected as leaders. In this way, the system leverages fast validating nodes to run at network speed in all realistic scenarios.

Note that the FLP impossibility result shows that no deterministic consensus protocol can avoid timeouts. Shoal cannot circumvent this result, as there exists a theoretically adversarial event schedule that can prevent all anchors from being commanded. Instead, Shoal falls back to timeouts after a configurable number of consecutively skipped anchors. In practice, this scenario is highly unlikely.

Common Response

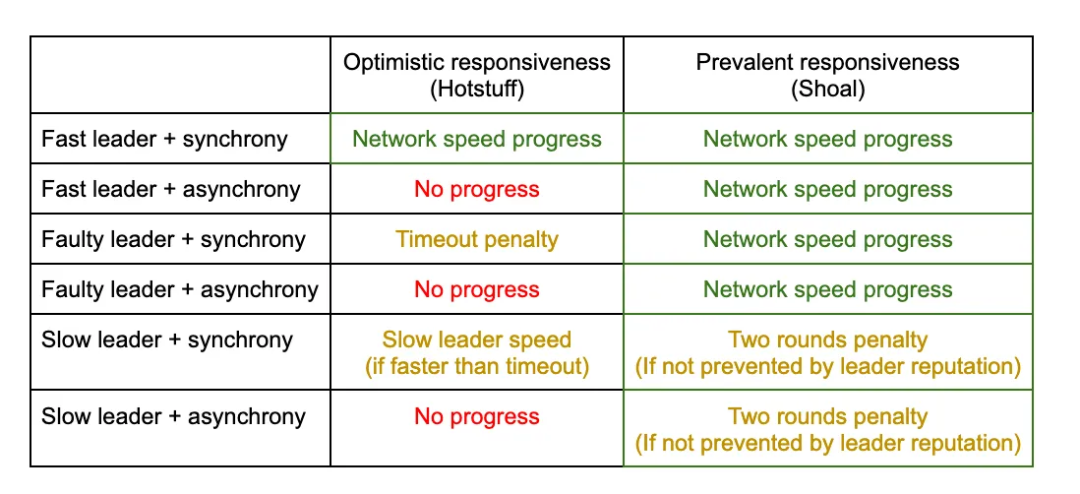

The Hotstuff paper popularized the concept of optimistic responsiveness, which while not formally defined, intuitively means that the protocol can run at network speed in good conditions including fast leaders and synchronized networks.

Shoal offers a better property called universal responsiveness. Specifically, compared to Hotstuff, Shoal continues to run at network speed even if the leader fails in a configurable number of consecutive rounds or during asynchronous periods experienced by the network. See the more detailed comparison in the table below.

Note that universal responsiveness provides strictly better progress guarantees during asynchronous periods and when the leader fails. During synchronization with a slow leader, these properties cannot be compared because it depends on how slow the leader is. However, given the reputation of the leader, slow leaders should be rare in Shoal.

Evaluation

We implemented Bullshark and Shoal on top of our Narwhal implementation of Quorum Store. A detailed comparison between Shoal, Bullshark, and Jolteon can be found in the evaluation section of the Shoal paper, where we provide some highlights here.

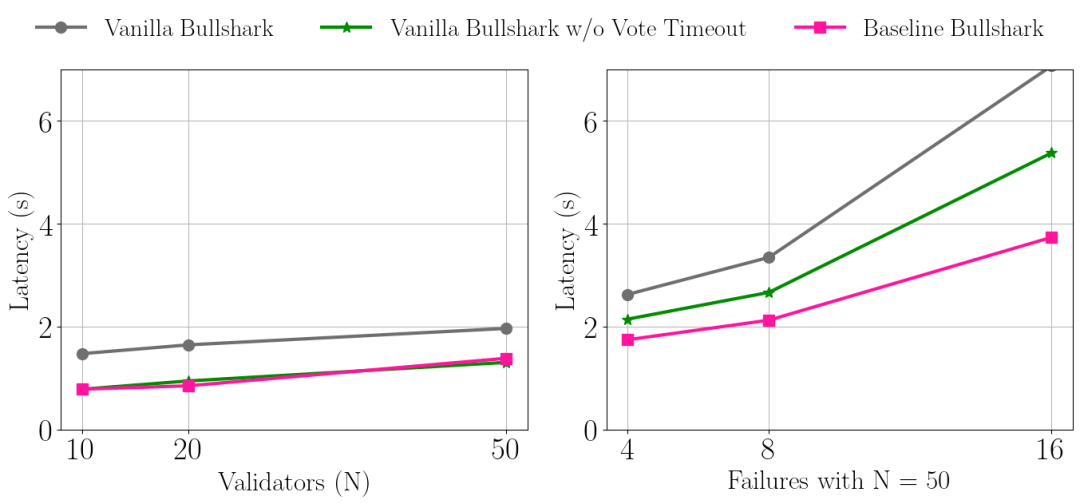

First, to demonstrate the powerful capabilities of asynchronous DAG constructions, we compare Bullshark with timeouts and without timeouts. The full Bullshark paper assumes an asynchronous network but provides a fast path mode, so timeouts are needed in all rounds. We call this version Vanilla Bullshark. We observe that for the independently partially synchronous network assumption versions, timeouts are not needed in voting rounds. We call this version Vanilla Bullshark w/o Vote timeout, and Baseline Bullshark is the version without any timeouts.

The following figure compares the effect of timeouts on Bullshark latency with and without failures. Clearly, Baseline Bullshark (no timeout) performs best when failures occur. Without failures, Baseline Bullshark is comparable to Vanilla Bullshark w/o Vote timeout. This is because, as mentioned earlier, timeouts may have an advantage in the case of a good but slow leader without a leader reputation mechanism.

Figure 6.: Effect of timeouts on Bullshark latency with (right) and without (left) failures, where there are 50 validating nodes in the failure case

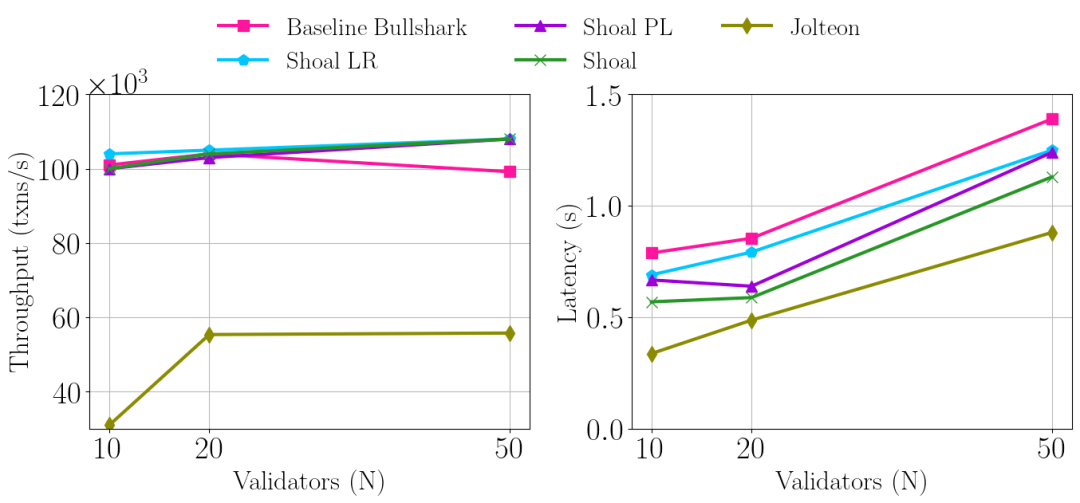

Next, we instantiate Shoal using Baseline Bullshark (no timeout) and demonstrate pipeline and leader reputation mechanism latency improvements with and without failures. For completeness, we also compare it with Jolteon in the no-failure case.

Figure 7 below shows no-failure cases, where pipeline and leader reputation can both individually reduce latency, but combining them can achieve optimal latency.

As for Jolteon, it cannot scale beyond 20 validator nodes, and even if it runs on top of Quorum Store, it can only achieve roughly half of Bullshark/Shoal throughput, which eliminates the data propagation bottleneck.

The results show that Shoal greatly improves Bullshark latency. As for Jolteon, it is important to note that we only measured consensus latency. Since Jolteon cannot run locally on top of DAG, it requires additional delay to decouple data propagation, which we did not measure. Therefore, under high loads, Shoal should match Jolteon’s end-to-end latency.

Figure 7: Throughput and latency under no-failure conditions, where Shoal PL and Shoal LR support pipeline and leader reputation only, respectively.

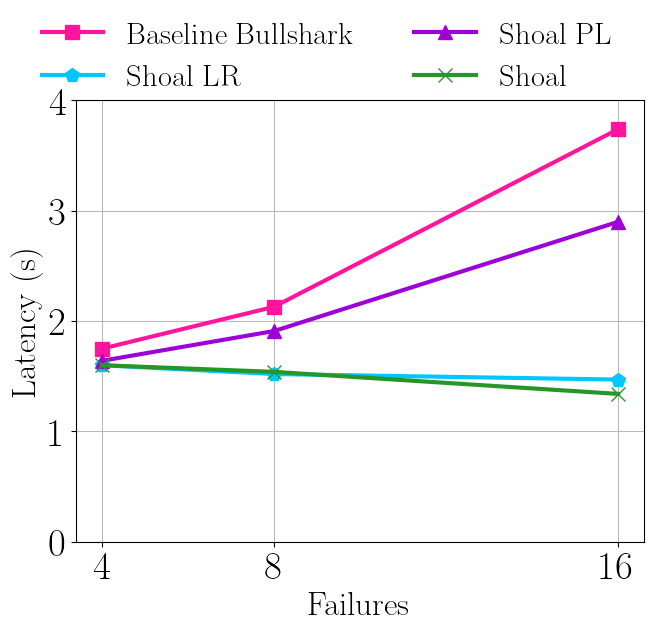

Figure 8 below shows failure cases with 50 validator nodes, where the leader reputation mechanism significantly improves latency by reducing the likelihood of failed validators being selected as leaders. Note that there were 16 failures out of 50, and Shoal’s latency was 65% lower than Baseline Bullshark.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Analysis of Lybra Finance, the LSDfi leader: How stable is it? What are the risks of “layer 2 nesting”?

- Long article by V God: What are some non-financial use cases of blockchain?

- Interpreting Gensyn: Blockchain AI Computing Protocol that Raised Over $50 Million in Funding Led by a16z

- US lawyers discuss the future of the SEC’s case against Binance and Coinbase: the outcome may be very different

- Vitalik: To achieve large-scale adoption, Ethereum must undergo three transitions: L2, wallets, and privacy.

- Web3 without AI has no soul. How ZKML Reshapes the Relationship Between AI and Blockchain

- Blockchain’s Ambition: Anti-Keynesianism, Public Life, and Transhumanism