Deep dive into decentralized proof, proof market, and ZK infrastructure.

Explore decentralized proof, proof market, and ZK infrastructure in depth.Article author: Figment Capital

Article translation: Block unicorn

- The “economic” logic behind the rise of Bitcoin

- Full Text of Bloomberg’s Interview with He Yi: “I’m the One Who Introduced CZ to Crypto Trading”

- Future Web3 New Chapter: Triple Impact of VSAP on Exchanges, Financial Markets, and TradFi

Introduction:

Zero-knowledge (ZK) technology is rapidly improving. As the technology advances, more ZK applications will emerge, thereby driving up demand for zero-knowledge proofs (ZKPs) generation.

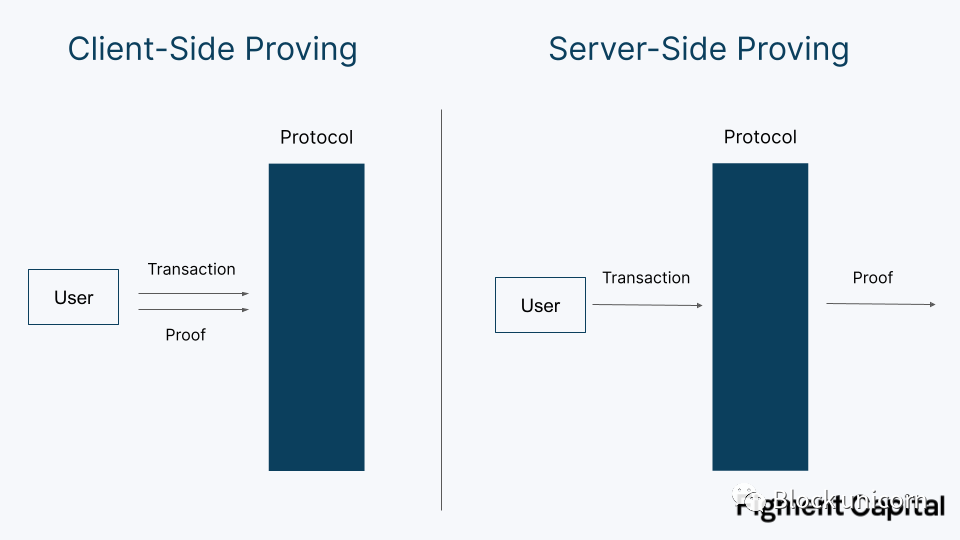

Currently, most ZK applications are protocols used for privacy protection. Proofs generated by privacy applications such as ZCash and TornadoCash are generated by users locally, as generating ZKPs requires knowledge of secret input. These computations are relatively small and can be generated on consumer-grade hardware. We call the ZK proofs generated by users client-side proofs.

While some proof generations may be relatively lightweight, other proofs require more complex computations. For example, Validium Rollups (i.e. zkRollup) may need to prove thousands of transactions in a ZK Virtual Machine (zkVM), which requires more computational resources and thus takes longer to prove. Generating proofs for these large computations requires powerful machines and fortunately, since these proofs only rely on the succinctness of the zero-knowledge proofs rather than zero-knowledge (no secret input), proof generation can be securely outsourced to external parties. We call the outsourced proof generation (outsourcing the proving computation to cloud computing or other participants) server-side proofs.

Block unicorn’s note: The difference between zero-knowledge and zero-knowledge proofs. Zero-knowledge (Zero-knowledge) is a privacy-based technology framework that refers to the confidentiality of information during communication, where the prover proves the truth of the event to the verifier without revealing any information, thus protecting privacy.

Zero-knowledge proof is a cryptographic tool used to prove the correctness of a certain assertion without revealing any additional information about the assertion. It is a technology based on mathematical algorithms and protocols used to prove the truth of a certain assertion to others without revealing sensitive information. Zero-knowledge proofs allow the proving party to provide proof to the verifying party, while the verifying party can verify the correctness of the proof but cannot obtain the specific information behind the proof.

In short, zero-knowledge is a general concept that refers to maintaining the confidentiality of information during interaction or proof, while zero-knowledge proof is a specific cryptographic technique used to achieve zero-knowledge interactive proof.

Block unicorn notes that the terms “prover” and “validator” have different meanings in the context.

Prover: refers to the entity that performs specific proof generation tasks, responsible for generating zero-knowledge proofs to verify and prove specific computations or transactions. Provers can be computing nodes running on decentralized networks or specialized hardware devices.

Validator: refers to the nodes participating in the blockchain consensus mechanism, responsible for verifying and validating the validity of transactions and blocks, and participating in the consensus process. Validators usually need to stake a certain amount of tokens as security guarantees and receive rewards based on the proportion of their staked amount. Validators may not directly perform specific proof generation tasks, but they ensure the security and integrity of the network by participating in the consensus process.

Server-Side Proving

Server-side proving is applied in many blockchain applications, including:

1. Scalability: Validity Rollup technologies such as Starknet, zkSync, and Scroll extend the capabilities of Ethereum by moving calculations off-chain.

2. Cross-chain interoperability: Proofs can be leveraged to facilitate minimal trust communication between different blockchains, enabling secure data and asset transfers. Teams include Polymer, Polyhedra, Herodotus, and Succinct.

3. Trustless middleware: Middleware projects like RiscZero and HyperOracle leverage zero-knowledge proofs to provide access to trustless off-chain computation and data.

4. Concise L1 (ZKP-based layer 1 public chain): Concise blockchains like Mina and Repyh use recursive SNARKs to enable even users with limited computing power to independently verify state.

Now, many prerequisite cryptography, tools, and hardware have been developed, and applications that utilize server-side proving are finally entering the market. In the coming years, server-side proving will grow exponentially, requiring the development of new infrastructure and operators capable of effectively generating these computationally intensive proofs.

Although centralized in the initial stages, most applications that utilize server-side proving have a long-term goal of decentralizing the role of the prover. As with other components of the infrastructure stack, such as validators and sorters, effectively decentralizing the role of the prover will require careful protocol and incentive design.

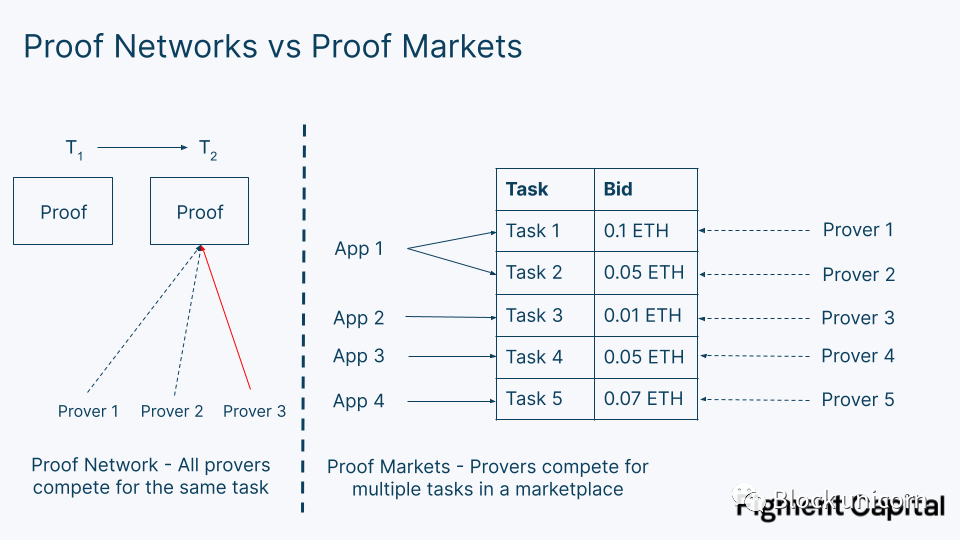

In this article, we explore the design of a proof-of-stake network. We first distinguish between a proof network and a proof market. A proof network is a collection of validators that provide services for a single application, such as an Optimistic Rollup. A proof market is an open market where multiple applications can submit requests for verifiable computations. Next, we outline the current decentralized proof network models and then share some preliminary scope on proof market design, an area that is still underdeveloped. Finally, we discuss the challenges of operating zero-knowledge infrastructure and conclude that stakers and specialized zero-knowledge teams are better suited to meet the emerging needs of proof markets than PoW miners.

Proof Networks and Proof Markets

Zero-knowledge (ZK) applications require validators to generate their proofs. Although currently centralized, most ZK applications will move towards decentralized proof generation. Validators do not need to be trusted to produce correct outputs because proofs can be easily verified. However, applications pursue decentralized proofs for a few reasons:

1. Liveness: Multiple validators ensure protocol reliability and prevent downtime when some validators are temporarily unavailable.

2. Censorship resistance: Having more validators increases censorship resistance, as a small set of validators may refuse to prove certain types of transactions.

3. Competition: A larger set of validators can enhance market pressure for operators to create faster, cheaper proofs.

This presents applications with a design decision: should they bootstrap their own proof network or outsource the responsibility to a proof market? Outsourcing proof generation to proof markets such as nil; (a project name), RiscZero, and Marlin, which are being developed, provides plug-and-play decentralized proofs and allows application developers to focus on other components of their stack. In fact, these markets are a natural extension of modular arguments. Similar to shared sorters, proof markets are effectively shared validator networks. By sharing validators between applications, they can also maximize hardware utilization; validators can be repurposed when not immediately needed to generate proofs for one application.

However, proof markets also have some drawbacks. Internalizing the role of validators can increase the utility of the native token by allowing the protocol to leverage its own token for staking and validator incentives. This can also provide applications with greater sovereignty rather than creating an external point of failure.

One important difference between proof of network and proof of market is that in proof of network, typically only one proof request at a time needs to be satisfied by the set of validators. For example, in an validity Rollup, a network receives a series of transactions, calculates the validity proof to prove they were correctly executed, and sends the proof to L1 (a layer 1 network), with a single validity proof generated by a validator selected from a decentralized set.

Decentralized Proof of Network

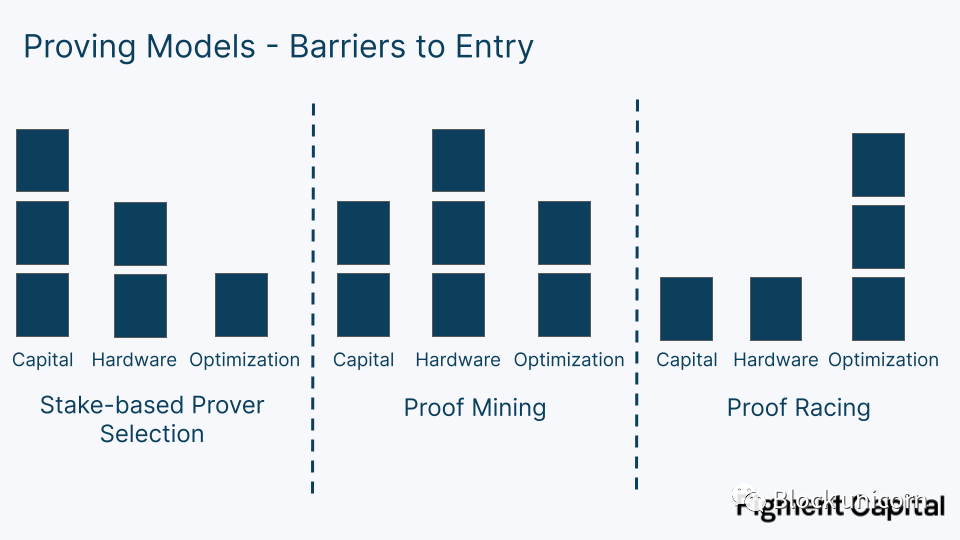

As the ZK protocols mature, many teams will be gradually decentralizing their infrastructure to improve network liveness and censorship resistance. Introducing multiple validators into the protocol adds additional complexity to the network, especially since the protocol must now decide which validator to allocate to a particular computation. There are currently three main approaches:

Stake-based validator selection: Validators stake assets to participate in the network. In each proof period, a validator is randomly selected, with their weight determined by the value of their staked tokens, and the output is computed. When selected, the validator is compensated for generating the proof. Specific penalty conditions and leader selection may vary for each protocol. This model is similar to a PoS mechanism.

Mining proofs: A validator’s task is to repeatedly generate ZKPs until they generate a proof with a sufficiently rare hash value. Doing so grants them the right to prove in the next period and receive period rewards, with validators who are able to generate more ZKPs being more likely to win periods. This type of proof is very similar to PoW mining – it requires a lot of energy and hardware resources; with a key difference from traditional mining being that in PoW, hash calculation is just a means to an end. Being able to generate SHA-256 hash values in Bitcoin has no value other than increasing network security. However, in proof mining, the network incentivizes miners to speed up ZKP generation, ultimately benefiting the network. Mining proofs were pioneered by Aleo.

Proof contests: In each period, validators compete to generate proofs as quickly as possible. The first person to generate the proof will receive the period reward. This approach is susceptible to winner-takes-all dynamics. If a single operator can generate proofs faster than others, then they should win every period. This can be mitigated by distributing proof rewards to the first N operators to generate a valid proof, or introducing some randomness to reduce centralization. However, even in this case, the fastest operator can still run multiple machines to earn additional income.

Another technique is distributed proof. In this case, instead of a single scheme winning the right to prove during a given period, the proof generation task is assigned to multiple participants who work together to generate a single output. An example is the Joint Proof Network, which splits proofs into many smaller statements that can be proved individually and then recursively proved in a tree structure to a single statement. Another example is zkBridge, which proposes a new ZKP protocol called deVirgo that can easily distribute proofs across multiple machines and has already been deployed by Polyhedra. Distributed proof is essentially easier to implement decentralization and can significantly increase the speed of proof generation. Each participant forms a computing cluster and participates in proof mining or competition. Rewards can be evenly distributed based on their contributions to the cluster, and distributed proof is compatible with any proof selection model.

The choice between stake-based provers, proof mining, and proof competition balances three aspects: capital requirements, hardware accumulation requirements, and prover optimization.

The stake-based prover model requires provers to stake capital but requires less importance on accelerating proof generation because prover selection is not based on their proof speed (although faster provers may be more likely to attract delegations). Proof mining is more balanced, requiring some capital to accumulate machines and pay energy costs to generate more proofs. It also encourages ZKP acceleration, just as Bitcoin mining encourages accelerated SHA-256 hashing. Proof competition requires the least capital and infrastructure, and operators can run a super-optimized machine to participate in the competition in each period. Although the most lightweight method, we believe that proof competition faces the highest centralization risk due to its winner-takes-all dynamics. Proof competition (like mining) also leads to redundant computation, but they provide better liveness guarantees because there is no need to worry about provers missing selected periods.

Another benefit of the stake-based model is that there is less pressure on provers to compete on performance, leaving room for cooperation among operators. Cooperation typically includes knowledge sharing, such as disseminating new technologies that accelerate proof generation, or guiding new operators on how to start proving. In contrast, proof competition is more like MEV (maximizing Ethereum value) search, where entities are more secretive and adversarial to maintain a competitive edge.

Of these three factors, we believe that speed demand will be the primary variable that affects whether a network can disperse its set of validators. Capital and hardware resources will be sufficient, but the more competitive validators are on speed, the less decentralized the network’s validation will be. On the other hand, the more incentivized speed is, the better the network will perform, all other things being equal. While the specific impact may vary, the proof networks face the same trade-off between performance and decentralization as the first-layer blockchains.

Which proof model will win?

We expect most proof networks to adopt a stake-based model, which offers the best balance between incentivizing performance and maintaining decentralization.

Decentralized proof may not be suitable for most effective summarization. A model where each validator proves a small portion of transactions and then these are recursively aggregated together would face network bandwidth limitations. The sequential nature of summarizing transactions also makes ordering difficult – proof of earlier transactions must be included before later transactions can be proven. If a validator fails to provide their proof, the final proof cannot be constructed.

Outside of Aleo and Ironfish, ZK mining will not be popular in ZK applications. It is energy-consuming and unnecessary for most applications. Proof races are also unpopular as they lead to centralization effects. The more a protocol prioritizes performance relative to decentralization, the more attractive race-based models become. However, existing accessible ZK hardware and software acceleration has already provided significant speed improvements. We expect that adopting proof race models to improve proof generation speed for most applications would only provide marginal improvements to the network and is not worth sacrificing decentralization for.

Designing Proof Markets

As more applications adopt zero-knowledge (ZK) technology, many are realizing they would rather outsource their ZK infrastructure to proof markets than handle it internally. Unlike proof networks that serve only a single application, proof markets can serve multiple applications and meet their respective proof requirements. These markets aim to be high-performance, decentralized, and flexible.

- High performance: the demand in the market indicates that there will be a diversity of proofs required. For example, some proofs will require more computation than others. Proofs that take longer to generate will need to be sped up through dedicated hardware and other optimizations, and the market will also need to provide fast proof generation services for applications and users willing to pay for them.

- Decentralization: similar to proof networks, proof markets and their applications want the market to be decentralized. Decentralized proofs increase vibrancy, censorship resistance, and market efficiency.

- Flexibility: all else equal, the proof market wants to be as flexible as possible to accommodate the needs of different applications. For example, zkBridge, which connects to Ethereum, may prefer a final proof like Groth16 to provide cheap on-chain proof verification. In contrast, zkML (where ML stands for machine learning) models may prefer a Nova-based proof scheme optimized for recursive proofs. Flexibility can also be seen in the integration process, as the market can provide a zkVM (zero-knowledge virtual machine) for verifying verifiable computation programs written in high-level languages like Rust, providing developers with an easier integration method.

Designing a proof market that is both efficient, decentralized, and flexible enough to support a variety of zero-knowledge proof (ZKP) applications is a difficult and underexplored research area. Addressing this problem requires careful incentive and technical design. Below, we share some early considerations and trade-offs in designing a proof market:

-

Incentivization and punishment mechanisms

-

Matching mechanism

-

Custom circuits vs. Zero-Knowledge Virtual Machine (zkVM)

-

Continuity vs. Aggregated proofs

-

Hardware heterogeneity

-

Operator diversity

-

Discounts, derivatives, and order types

-

Privacy

-

Progressive and continuous decentralization

Incentivization and punishment mechanisms

Proofers must have incentive and punishment mechanisms to maintain the integrity and performance of the market. The simplest way to introduce incentives is with dynamic staking and punishment. Proof-of-bidding and possibly token inflation rewards can incentivize operators.

It is possible to require a minimum stake to join the network to prevent false attacks. Validators who submit false proofs may be punished by forfeiting their staked tokens. Validators who take too long to generate a proof or are unable to generate a proof may also be punished. This punishment may be proportional to the bid placed on the proof – the higher the bid, the greater the punishment for delayed proofs (and thus greater economic significance).

In cases where punishment (for validators/proofs violating POS rules) is excessive, a reputation system can be used as a replacement. Project =nil; currently uses a reputation-based system to hold validators accountable, making it unlikely that dishonest or poorly performing validators will be matched by the matching engine.

Matching Mechanism

The matching mechanism is the problem of connecting supply and demand in the market. Designing a matching engine – that is, defining the rules for how validators and proof requests are matched – will be one of the most difficult and important tasks in the market, and the matching market can be completed through auctions or order books.

Auctions: Auctions involve validators bidding on proof requests to determine which validator will win the right to generate the proof. The challenge of auctions is that if the winning bid fails to return the proof, the auction must be restarted (you can’t immediately enlist the second highest bidder to generate the proof).

Order Books: Order books require applications to submit bids for purchasing proofs to an open database; validators must submit an asking price for selling the proof. If two requirements are met, bid and ask can be matched: 1) the bid calculation of the protocol is higher than the validator’s asking price, and 2) the validator’s delivery time is lower than the request time for the bid. In other words, the application submits a computation to the order book and defines the maximum reward they are willing to pay and the longest time they are willing to wait to receive the proof. If the validator submits an asking price and time that is lower than this requirement, they are eligible for matching. Order books are better suited for low-latency use cases because bids from the order book can be immediately satisfied.

The proof market is multi-dimensional; applications must request computations within certain price and time ranges. Applications may have dynamic preferences for proof delays, with the price they are willing to pay for proof generation decreasing over time. Although order books are efficient, they fall short in reflecting the complexity of user preferences.

Other matching models can be borrowed from other decentralized markets, such as Filecoin’s decentralized storage market using off-chain negotiation, and Akash’s decentralized cloud computing market using reverse auctions. In Akash’s market, developers (known as “tenants”) submit computing tasks to the network, and cloud providers bid on the workload. Tenants can then choose which bid to accept. Reverse auctions work well for Akash because workload latency is not important, and tenants can manually choose which bid they want. In contrast, a proof-of-stake market needs to run quickly and automatically, making reverse auctions a suboptimal matching system for proof generation.

The protocol may impose restrictions on the bid types that some verifiers can accept. For example, a verifier with a low reputation score may be prohibited from matching with high-value bids.

The protocol must prevent attack vectors caused by unlicensed verifiers. In some cases, verifiers can perform proof-delay attacks: by delaying or failing to return a proof, verifiers can expose the protocol or its users to certain economic attacks. If the attack profit is large, token penalties or reputation fines may not deter malicious verifiers. In the case of proof delay, rotating the proof generation right to a new verifier can minimize downtime.

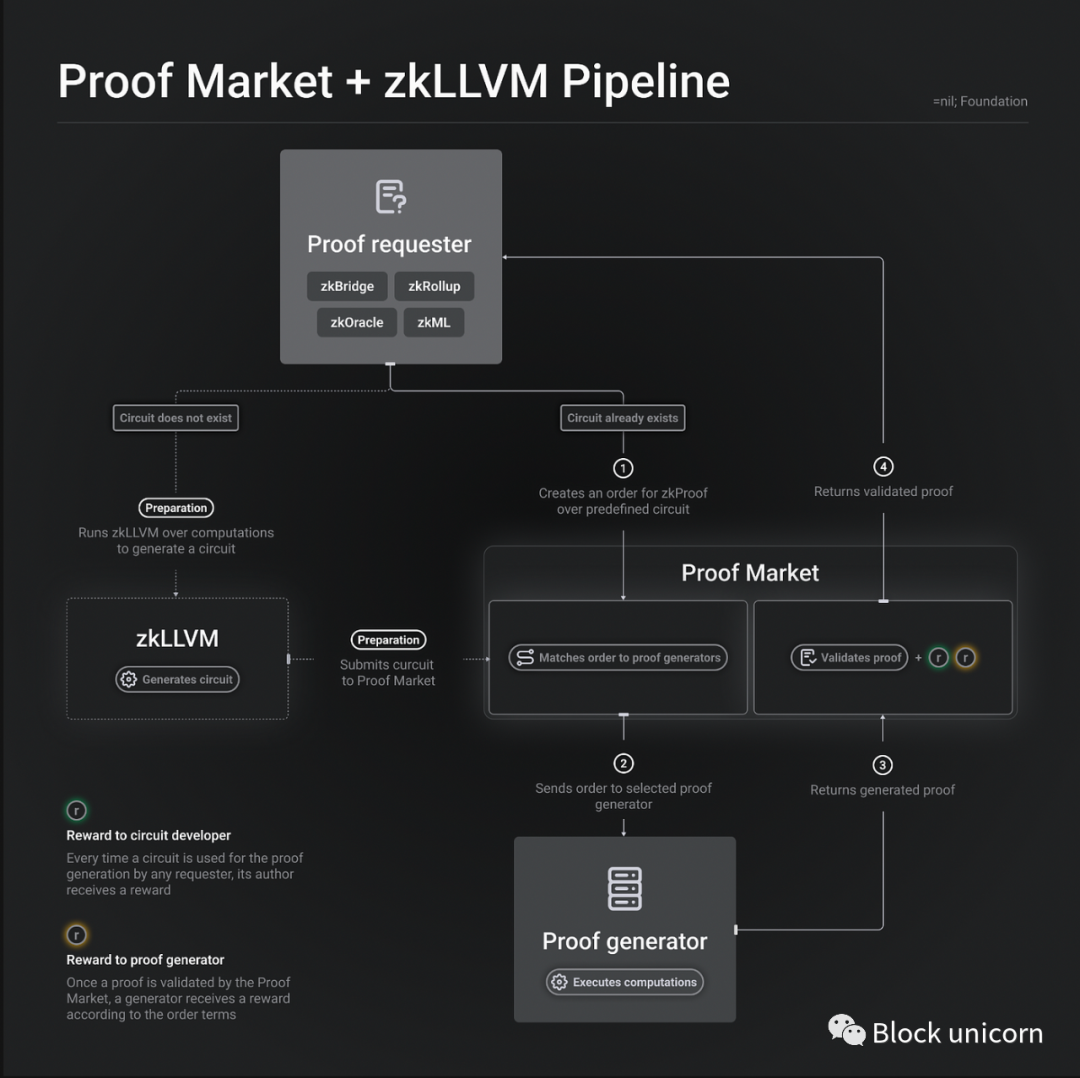

Custom Circuits vs. Zero-Knowledge Virtual Machines (zkVM)

Proof markets can provide custom circuits for each application, or they can provide a general-purpose zero-knowledge virtual machine. Custom circuits have higher integration and financial costs but can provide better performance for applications. Proof markets, applications, or third-party developers can all build custom circuits, and as a result of providing the service, they can receive a portion of the network revenue, just like with =nil;.

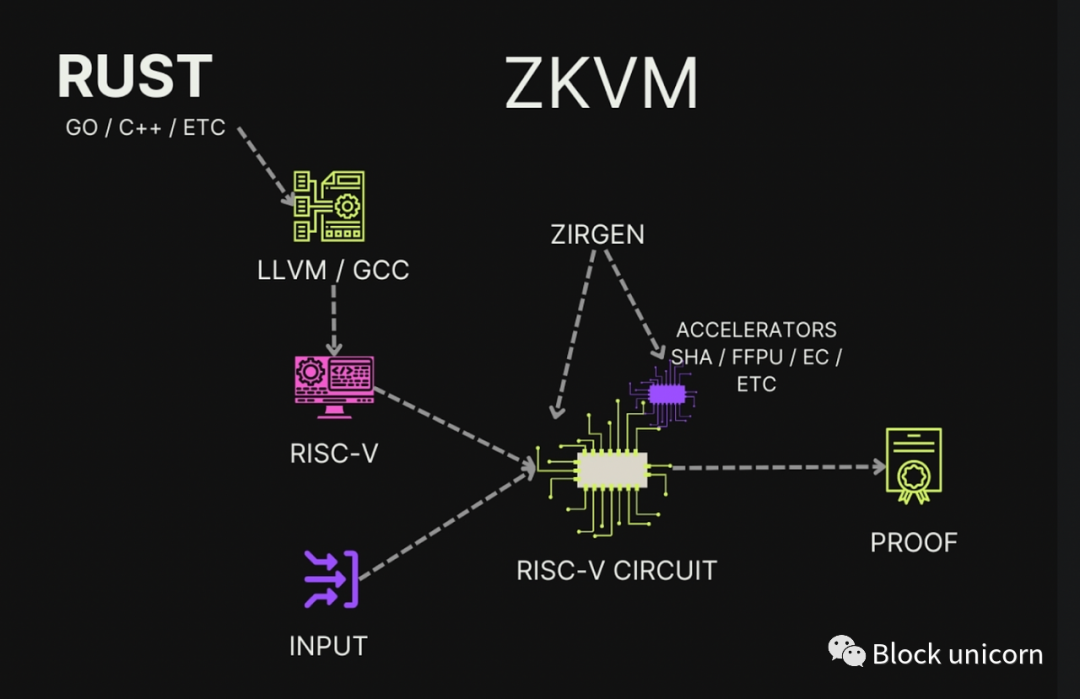

Although slower, zero-knowledge virtual machines (zkVMs) based on STARKs like RiscZero’s RISC-V zkVM allow application developers to write verifiable programs in the high-level languages Rust or C++. zkVMs can support accelerators for common zero-knowledge-unfriendly operations (such as hashing and elliptic curve addition) to improve performance. Although a proof market with custom circuits may require a separate order book, leading to the fragmentation and specialization of verifiers, a zkVM can use a single order book to facilitate and prioritize computation on the zkVM.

Single Proof vs. Aggregated Proof

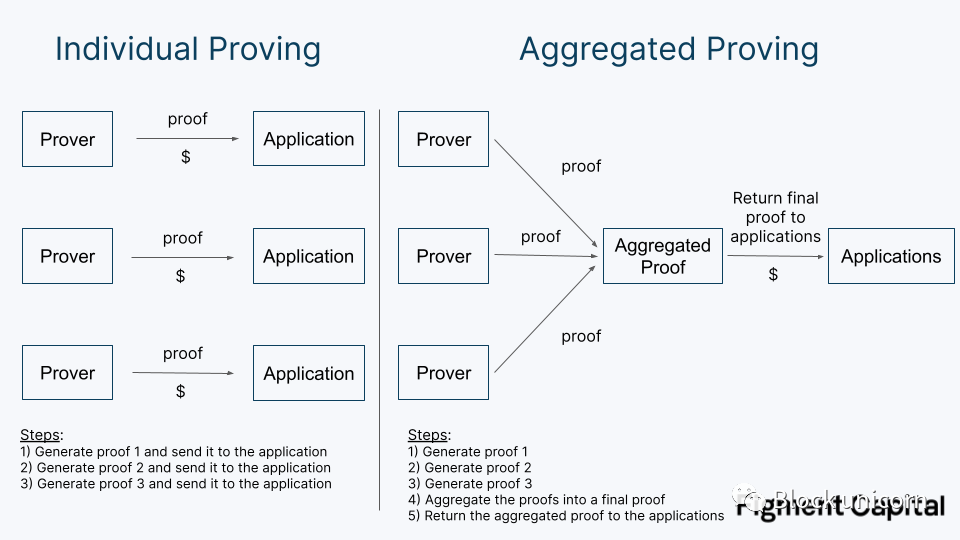

Once a proof is generated, it must be fed back to the application. For on-chain applications, this requires costly on-chain verification. Proof markets can either feed single proofs back to developers, or they can use aggregated proofs to convert multiple proofs into one before returning it, sharing the gas cost among them.

Aggregated proofs introduce additional latency, requiring the proofs to be aggregated together, which requires more computation, and multiple proofs must be completed before aggregation can occur, which may delay the aggregation process.

Proof markets must decide how to balance latency and cost. Proofs can be quickly returned temporarily at a higher cost, or aggregated at a lower cost. We expect proof markets to require aggregated proofs, but as the scale expands, their aggregation time can be shortened.

Hardware Heterogeneity

Proof-of-work for large computations is slow. So what happens when applications want to quickly generate compute-intensive proofs? Provers can use more powerful hardware, such as FPGAs and ASICs, to accelerate proof generation. While this is greatly helpful for performance, specialized hardware may limit the possible set of operators, hindering decentralization. Proof markets need to decide on the hardware that their operators will run.

Block unicorn note: FPGAs (Field-Programmable Gate Arrays) are a type of specialized computing hardware that can be reprogrammed to perform specific digital computing tasks. This makes them particularly useful in applications that require certain types of calculations (such as encryption or image processing).

ASICs (Application-Specific Integrated Circuits) are another type of specialized hardware. These are designed to perform a specific task and are very efficient at doing so. For example, Bitcoin mining ASICs are specifically designed to perform the hash calculations required for Bitcoin mining. ASICs are typically very efficient, but their tradeoff is that they are not as flexible as FPGAs because they can only be used to do the task they were designed to do.

There is also an issue around homogeneity of provers: Proof markets must decide whether all provers will use the same hardware or whether they will support different setups. If all provers use easily obtainable hardware in a fair competition field, the market may be easier to maintain decentralization. Given the novelty of zero-knowledge hardware and the need for market performance, we expect proof markets to maintain hardware agnosticism, allowing operators to run any infrastructure they choose. However, more work needs to be done regarding the impact of prover hardware diversity on prover centralization.

Operator Diversity

Developers must define the requirements for operators to enter and remain active market participants, which will affect operator diversity, including their size and geographic distribution. Some protocol-level considerations include:

Do validators need to be whitelisted or require permission? Will there be a cap on the number of validators who can participate? Do validators need to stake tokens to enter the network? Are there any minimum hardware or performance requirements? Will there be restrictions on the market share that operators can hold? If so, how will this restriction be enforced?

Markets specifically seeking institutional-grade operators may have different market entry requirements than those seeking retail participation. The proof market should define what a healthy operator mix looks like and use this as a basis for reverse engineering.

Discounts, Derivatives, and Order Types

Proof market prices may fluctuate during periods of high or low demand. Price volatility leads to uncertainty, and applications need to predict future proof market prices in order to pass these costs on to end users—a protocol does not want to charge a user a 0.01 USD transaction fee only to find out that the cost of proofing the transaction is 0.10 USD. This is similar to the problem faced by layer twos in passing on future calldata (data included that Ethereum Gas is charged for, and will be determined by data size) pricing to users. Some have proposed that layer twos could use block space futures to solve this problem: layer twos could pre-purchase block space at a fixed price, while also providing users with more stable pricing.

The same demand exists in proof markets. Protocols like validity rollups may generate proofs at a fixed frequency. If a rollup needs to generate a proof once per hour for a year, can it submit this bid all at once instead of needing to submit a new bid on short notice, which can be vulnerable to price spikes? Ideally, they could pre-order proof capacity. If so, should proof futures be offered within the protocol, or should other protocols or centralized providers be allowed to create services on top of it?

What about discounts for large or predictable orders? If a protocol brings a significant amount of demand to the market, should it receive a discount, or must it pay the public market price?

Privacy

It is possible for the market to provide private computation, even though outsourcing proof generation is difficult to do privately. An application requires a secure channel to send private input to untrusted verifiers. Once received, verifiers need a secure computation sandbox to generate proofs without leaking private input; secure enclaves are a promising direction. In fact, Marlin has already experimented with private computation on Azure using Nvidia’s A100 GPU through secure enclaves.

Progressive and Persistent Decentralization

Proof markets need to discover the best way to progressively decentralize. How should the first batch of third-party verifiers enter the market? What are the specific steps to achieve decentralization?

Related issues also include maintaining decentralization. One challenge that proof markets face is malicious bidding by verifiers. A well-funded verifier might choose to operate at a loss with a bid lower than market price, to squeeze out other operators, then expand in scale and raise prices. Another form of malicious bidding is operating too many nodes, all bidding at market price, so that random selection disproportionately assigns proof requests to this operator.

Conclusion

In addition to the above considerations, other decisions include how to submit bids and whether proof generation can be split across multiple verifiers. Overall, proof markets have a huge design space and must be carefully studied to build efficient and decentralized markets. We look forward to working with leading teams in this area to identify the most promising approaches.

Operating Zero-Knowledge Infrastructure So far, we have examined the design considerations for building decentralized proof networks and proof markets. In this section, we will evaluate which operators are best suited for participating in proof networks and share some thoughts on the supply-side of zero-knowledge proof generation.

Miners and Validators

There are two main types of infrastructure providers in today’s blockchain ecosystem: miners and validators. Miners run nodes on proof-of-work networks like Bitcoin. These miners compete to produce rare hashes. The more powerful their computers and the more computers they have, the more likely they are to produce a rare hash and earn block rewards. Early Bitcoin miners started mining on their home computers using CPUs, but as the network developed and block rewards became more valuable, mining operations became professionalized. Nodes were aggregated together to achieve economies of scale, and hardware setups became specialized over time. Today, miners use almost exclusively Bitcoin-specific integrated circuits (ASICs) to run in data centers located near cheap energy sources.

The rise of proof-of-stake (PoS) necessitates a new type of node operator: the validator. Validators play a role similar to that of miners. They propose blocks, execute state transitions, and participate in consensus. However, they don’t seek to create as many hashes (hash power) as possible to increase the chances of creating a block, as Bitcoin miners do. Instead, validators are randomly selected to propose blocks based on the value of the assets they have staked. This change eliminates the need for energy-intensive equipment and specialized hardware in PoS, allowing more widely distributed node operators to run validators, which can even be run in the cloud.

The more subtle change introduced by PoS is that it turns blockchain infrastructure into a service business. In proof-of-work (PoW), miners operate in the background, largely invisible to users and investors (can you name a few Bitcoin miners?). They have only one customer, the network itself. In PoS, validators (such as Lido, Rocket) provide network security by staking the tokens they hold, but they also have another customer: the staker. Token holders seek out operators they can trust to run infrastructure on their behalf securely and reliably, earning staking rewards. Because the income of validators corresponds to the assets they can attract, they operate like a service company. Validators brand themselves, employ sales teams, and build relationships with individuals and institutions that can stake their tokens with them. This makes staking a very different business from mining. This important difference between the two businesses is part of why the largest PoW and PoS infrastructure providers are entirely different companies.

ZK Infrastructure Companies

Over the last year, many companies have emerged that specialize in hardware acceleration for ZKP (zero-knowledge proof). Some of these companies produce hardware to sell to operators; others run their own hardware and become new infrastructure providers. The best-known ZK hardware companies currently include Cysic, Ulvetanna, and Ingonyama. Cysic plans to build ASICs (application-specific integrated circuits) that can accelerate common ZKP operations while maintaining flexibility for future software innovations. Ulvetanna is building an FPGA (field-programmable gate array) cluster to serve applications that require particularly strong proofing capabilities. Ingonyama is researching algorithmic improvements and building a CUDA library for ZK acceleration, with plans to ultimately design an ASIC.

Block unicorn comment: CUDA library: CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) developed by NVIDIA for its graphics processing units (GPUs). The CUDA library is a set of precompiled programs based on CUDA that can execute parallel operations on NVIDIA GPUs to improve processing speed. Examples include libraries for linear algebra, Fourier transforms, and random number generation.

ASIC: ASIC stands for Application-Specific Integrated Circuit. It is a type of integrated circuit designed to meet specific application requirements. Unlike general-purpose processors such as central processing units (CPUs) that can perform various operations, ASICs have their specific tasks determined at the time of design. Therefore, ASICs usually achieve higher performance or higher energy efficiency in their specialized tasks.

Who will operate the ZK infrastructure? We believe that the companies that perform well in operating ZK infrastructure will mainly depend on the prover’s incentive model and performance requirements. The market will be divided between staking companies and new ZK-native teams. For applications that require the highest performance prover or extremely low latency, ZK-native teams that can win the proof competition will dominate. We expect that such extreme requirements will be individual cases rather than norms. The rest of the market will be dominated by staking businesses.

Why are miners not suitable for operating ZK infrastructure? After all, ZK proofs, especially for large circuits, have many similarities with mining. It requires a lot of energy and computing resources and may require specialized hardware. However, we do not believe that miners will be early leaders in the proof field.

First, Proof of Work (PoW) hardware is not effectively reusable for proof work. ASICs for Bitcoin are defined to be non-reusable. GPUs (such as Nvidia Cmp Hx) commonly used for mining Ethereum before the merge are specifically designed for mining, making them perform poorly on ZK workloads. Specifically, their data bandwidth is weak, so the parallelism provided by GPUs does not bring real benefits. Miners wishing to enter the proof business will have to accumulate ZK-specific hardware from scratch.

In addition, miner companies lack brand recognition and are at a disadvantage in staking-based proofs. The biggest advantage of miners is that they can obtain cheap energy, which allows them to charge lower fees or participate in proof markets more profitably, but this is unlikely to exceed the challenges they face.

Finally, miners tend to be accustomed to static requirements. Bitcoin and Ethereum mining have not frequently or significantly altered their hash function requirements, nor have they required these operators to make other modifications to their mining setups that affect them (excluding merges). In contrast, ZK proofs require vigilance for changes in proof technology, which may impact hardware setups and optimizations.

Stake-based proof models are a natural fit for validator firms. Individuals and institutional investors in zero-knowledge applications will delegate their tokens to infrastructure providers to receive rewards. Staking businesses have existing teams, experience, and relationships that can attract a large amount of token delegation. Even for protocols that do not support delegated proof-of-stake (PoS), many validator firms offer whitelist validation services to run infrastructure on behalf of others, which is a common practice on Ethereum.

Validators cannot access cheap electricity like miners, which makes them ill-suited for the most energy-intensive tasks. The hardware setup required to effectively aggregate runs of proof-of-stake validators may be more complicated than for regular validators, but is likely suitable for validator’s current cloud or dedicated server infrastructure. However, like miners, these firms lack in-house ZK expertise and will be challenged to remain competitive in proof races. Aside from stake-based proofs, operating ZK infrastructure differs from operating validators’ business models, and lacks strong positive feedback effects from staking businesses. We expect native ZK infrastructure providers to dominate non-stake-based high-performance proof tasks.

Summary

Today, most validators are run by teams building applications that require them. As more ZK networks launch and decentralize, new operators will enter the market to meet proof needs. The identities of these operators will depend on proof selection models and the proof requirements imposed by specific protocols.

Staking infrastructure companies and native ZK infrastructure operators are most likely to dominate this new market.

Decentralized proof is an exciting new field in blockchain infrastructure. If you are a developer of applications or infrastructure providers in the ZK field, we would love to hear your thoughts and suggestions.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Future of Web3: Triple Impact of VSAP on Exchanges, Financial Markets, and TradFi

- BlackRock’s Bitcoin ETF Key Dates and Timeline for Listing

- Can you make money by running a node? How to choose a public chain? We talked to a node operator.

- Victory belongs to the long-termists: June 2023 Secondary Market Trading Strategy

- Will the growth of the NFT market in 2023 come from new capital entering or from old capital circulating?

- Conversation with three senior KOLs: Bitcoin’s four-year halving cycle, the new narrative of the next bull market, and Ethereum’s “dominant” position

- First leveraged Bitcoin ETF in the US quietly opens, with a first-day trading volume of nearly $5.5 million.