Exploring ZK/Optimistic Hybrid Rollup

ZK/Optimistic Hybrid Rollup ExplorationAuthor: kelvinfichter; Translator: MarsBit, MK

I recently came to the belief that the future of Ethereum rollups is actually a hybrid of the two main approaches (ZK and Optimistic). In this article, I will try to explain the basic architecture that I imagine and explain why I think it is the best way forward.

I don’t intend to spend too much time discussing the essence of ZK or Optimistic Rollups. This article assumes that you already have a good understanding of how these things work. You don’t need to be an expert, but you should at least know what they are and how they work at a high level. If I try to explain Rollups to you, this article will be very, very long. Anyway, enjoy reading!

Starting with Optimistic Rollup

- The Hidden Concerns of MakerDao, Not Just Exposures to RWA

- Ethereum 2023 Q2 Data Research: Gross Profit of $700 million, ETH Burn Rate Accelerates from 0.3% to 0.8%

- Fei team faces collective lawsuit, never expected to see a court account on Discord one day.

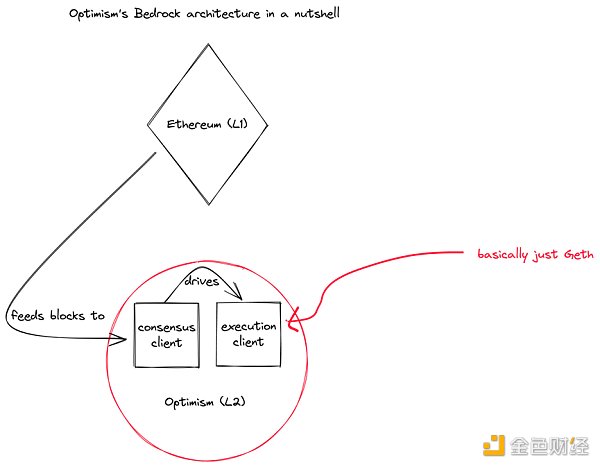

Hybrid ZK/Optimistic Rollup starts with Optimistic Rollup, which is very similar to Optimism’s Bedrock architecture. Bedrock aims to be as compatible as possible with Ethereum (“EVM Equivalent”) and achieves this goal by running an execution client that is almost identical to a normal Ethereum client. Bedrock uses Ethereum’s upcoming consensus/execution client separation model, which significantly reduces the differences from the EVM (some changes are always necessary, but we can manage this). As I write this, Bedrock Geth’s difference is a +388 -30 commit.

Like any good Rollup, Optimism obtains block/transaction data from Ethereum, sorts this data in a deterministic way within the consensus client, and then inputs this data into the L2 execution client for execution. This architecture solves the first half of the “ideal Rollup” puzzle and provides us with an EVM Equivalent L2.

Of course, we now need to solve the problem of how to tell Ethereum what is happening inside Optimism in a verifiable way. Without this feature, contracts cannot make decisions based on Optimism’s state. This would mean that users could deposit into Optimism, but could never withdraw their assets. While in some cases one-way Rollups may actually be useful, bidirectional Rollups are more useful in general.

We can tell Ethereum the state of any Rollup by giving a commitment to that state and proof that the commitment was correctly generated. In other words, we are proving that the “Rollup program” was executed correctly. The only real difference between ZK and Optimistic Rollups is the form of this proof. In ZK Rollup, you need to give an explicit ZK proof that the program was executed correctly. In Optimistic Rollup, you make a commitment, but there is no explicit proof. Other users can challenge your commitment and force you into a repeated game, and eventually you will figure out who is correct.

I won’t go into too much detail about the details of the Optimistic Rollup challenge game. It is worth noting that the latest technology of this game is to compile your program (in the case of Optimism, it is Geth EVM + some edge parts) into some simple machine architecture, such as MIPS. We do this because we need to build an interpreter for our program on the chain, and building a MIPS interpreter is much easier than building an EVM interpreter. EVM is also a moving target (we have regular upgrade forks), and it does not fully contain the program we want to prove (there are also some non-EVM things in it).

Once you have built an on-chain interpreter for your simple machine architecture and created some off-chain tools, you should have a fully functional Optimistic Rollup.

Convert to ZK Rollup

Overall, I think that for at least the next few years, Optimistic Rollups will dominate. Some people think that ZK Rollups will eventually surpass Optimistic Rollups, but I think this may be wrong. I think the relative simplicity and flexibility of Optimistic Rollups today means that they can evolve into ZK Rollups over time. If we can find a model that makes this possible, then there really is no strong motivation to deploy a less flexible and more fragile ZK system when you can simply deploy it to an existing Optimistic system and call it a day’s work.

Therefore, my goal is to create an architecture and migration path that allows existing modern Optimistic systems (such as Bedrock) to seamlessly transition to ZK systems. Here is a way that I think can not only be achieved, but can also be achieved in a way that surpasses the current zkEVM method.

We start with the Bedrock-style architecture I described above. Note that I (simply) explained how Bedrock has a challenge game that can assert L2 programs (running EVM + some extra East to turn it into ZK Rollup

Overall, I think that Optimistic Rollup will dominate in the next few years. Some people believe that ZK Rollup will eventually surpass Optimistic Rollup, but I think this may be wrong. I think the relative simplicity and flexibility of Optimistic Rollup means that they can evolve into ZK Rollup over time. If we can find a model that allows this transition to occur, then there is really no strong incentive to deploy to a less flexible and more fragile ZK system when you can simply deploy to an existing Optimistic system and end a day’s work.

However, reducing proof time is a solid problem rooted in the Web2 space. Given that the MIPS machine architecture will not change anytime soon, we can highly optimize our circuits and verification programs without worrying about EVM changes later on. I am very confident that the pool of hardware engineers who can optimize a well-defined program is at least 10 times (if not 100 times) that of the pool of recruiters who can build and audit a constantly changing zkEVM target. Companies like Netflix may have many hardware engineers working on optimizing transcoding chips, and they would be happy to take a bunch of venture capital money to tackle an interesting ZK challenge.

The initial proof time for this circuit may exceed the 7-day Optimistic Rollup withdrawal period. Over time, this proof time will only decrease. By introducing ASICs and FPGAs, we can greatly speed up proof time. With a static target, we can build a more optimized prover.

Ultimately, the proof time for this circuit will be less than the current 7-day Optimistic Rollup withdrawal period, and we can start considering canceling the Optimistic challenge process. Running a proof program for 7 days may still be too expensive, so we may want to wait a bit longer, but the point still stands. You can even run two proof systems at the same time, so we can start using ZK proofs immediately, and if the proof program fails for some reason, we can go back to Optimistic proof. When ready, it is easy to switch to ZK proof in a fully transparent manner for the applications. No other system can offer this kind of flexibility and smooth migration path.

You may be interested in other important issues.

Running a blockchain is a difficult task that involves not only writing a lot of backend code. Most of the work we do at Optimism focuses on improving the user and developer experience through useful client tools. We have also spent a lot of time and energy dealing with “soft” aspects: talking to projects, understanding pain points, and designing incentives. The more time you spend on chain software, the less time you have to think about these other things. You can always try to hire more people, but the organization does not scale linearly, and adding a new employee increases the burden of internal communication.

Since ZK circuit work can be added to an existing running chain, you can build the core platform and proof software in parallel. Moreover, since clients can be modified without changing the circuit, you can separate your client and proof teams. An Optimistic Rollup that takes this approach may be years ahead of ZK competitors in actual chain activity.

一 Some Conclusions

To be perfectly frank, I can’t see any significant downsides to this approach under the assumption that the zkMIPS prover can be optimized significantly over time. The only practical impact on applications I can see is that gas costs for different opcodes may need to be adjusted to reflect the proof time added by those opcodes. If it really isn’t possible to optimize this prover to a reasonable level, then I’ll admit defeat. If it is actually possible to optimize this prover, then the zkMIPS/zkVM approach seems vastly superior to the current zkEVM approach, to the point where it may completely obsolete the latter. This may seem like a radical statement, but not long ago, single-step optimistic fault proofs were completely replaced with multi-step proofs.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Crash and Reshape: Drawing Lessons from the History of the Gaming Industry and Looking Forward to the Future of NFTs

- Court takes over Discord community? A brief recap of the Fei team’s collective lawsuit event.

- Cregis Research: The Value of Ethereum Account Construction Archaeology and Account Abstraction

- Understanding MEV and opportunities for Oracle extractable value

- Ethereum 2023 Q2 Data Research: Gross Profit of $700 million, ETH Burn Rate Accelerated to 0.8%

- Mint Ventures: Concerns about MakerDao go beyond RWA exposure

- Introduction to Words3, the Full-Chain Game: A Word Chain Game Developed Based on MUD.