Top venture capitalists: The biggest risk of AI is not pursuing it with maximum effort

Top venture capitalists say the biggest risk of AI is not pursuing it fully.Author: Wu Xin

Recently, Mark Anderson published a battle cry on the company’s website, “Why AI Will Save the World”, boldly questioning the legitimacy of calls to regulate AI and systematically criticizing the arguments on which these calls are based.

Combining the author’s past interviews, articles, and even the views of economists and politicians he admires, this article analyzes and interprets its core content in five parts, helping readers understand why he believes that optimism is always the safest choice, while seeing the risks inherent in pessimism and skepticism. To many, these views may seem crazy and difficult to think about in this way. But as Mark Anderson said, through debate, you can roughly establish a model of others’ thinking, and you can think about problems from their perspective, making your thinking more objective and neutral.

Pessimism is almost popular in every society. ChatGPT swept the world, and Open AI CEO Sam Altman appeared at a hearing in the US Congress, calling for the regulation of AI.

- Review of the CRV Bull-Bear Battle and Current Short-Selling Cost Evaluation

- Potential Airdrop Opportunity: Taro Testnet

- MakerDAO community initiates a vote to reduce the GUSD reserves of DAI from 500 million US dollars to 110 million US dollars.

Subsequently, he signed a risk statement with Stuart Russell, Geoffrey Hinton, Yoshua Bengio, and others.

Soon after, Musk also appeared in a joint letter signed by thousands of people, calling for an immediate suspension of research in AI laboratories. At this time, Mark Anderson, the helm of the top venture capital firm a16z, always stands up and becomes the “dissident” cheerleader.

There is a paradox at the core of American culture: in theory, we like change, but when change is really achieved and presented, it will receive a lot of counterforce. I am very optimistic, especially when it comes to new ideas. Mark Anderson believes that he may be the most optimistic person he knows. “At least in the past 20 years, if you bet on the optimists, you are generally right.”

He definitely has a reason to think so. In 1994, Mark Anderson came to Silicon Valley for the first time, founded Netscape, and completed the listing in the shortest time.

Anderson, who sits barefoot on the throne, later appeared on the cover of Time magazine and became a Silicon Valley model of technology wealth mythology, attracting countless followers. To some extent, he is the optimist described by American quantum physicist David Deutsch in “The Beginning of Infinity”, hoping to achieve progress by creating knowledge, including the unforeseeable consequences brought about by these progress. Pessimism is different. They will be proud when their children follow appropriate behavior patterns and sigh for every real or imagined novelty. It tries to avoid anything that is not confirmed to be safe.

Very few civilizations have managed to survive by being more cautious about innovation. David Deutsch wrote in “The Beginning of Infinity” that in fact, most destroyed civilizations enthusiastically implemented preventative principles (avoiding anything that was not confirmed to be safe to avoid disasters). Everything has been stable and unchanged in the past hundred years, which seems to have never really happened. Skeptics are always wrong. Mark Anderson also said.

01, AI Regulation: Who Benefits? Who is Harmed?

Mark Anderson calls himself an “AI accelerationist.” A believer who hopes to accelerate AI-related social processes to resist resistance and produce huge social changes, naturally doubts the call for regulation. “There is a view that government regulation is well-intentioned, benign, and properly implemented. This is a myth.” Mark Anderson has long believed that one of the ills of the American system is regulation, with the government constantly enacting laws and regulations, resulting in many laws such as “no alcohol sales on Sundays” and “men are not allowed to eat pickles on Tuesdays.” Regulatory economist Bruce Yandle proposed a concept in the 1920s to explain the problem of government regulation: the “Bootleggers and Baptists Theory.” For example, Yandle believes that the reason why the prohibition was passed is not only due to the push of Baptists (religious background makes them believe that alcohol is harmful to society), but also due to the support of bootleggers behind them. They support the government to increase regulation to reduce competition with legal merchants-after all, since consumers cannot get drunk in the market under prohibition, they naturally turn to bootleggers. The theory of bootleggers and baptists pointed out that the Baptist provided moral support for the so-called regulation (the government did not have to find its own noble reasons), and the bootleggers secretly persuaded politicians (mixed interests), such an alliance made politicians more easily support these two groups. The theory also points out that this alliance has led to suboptimal legislation, although both groups are satisfied with the results, for the entire society, no legislation or different legislation may be better. Mark Anderson borrowed this theory to explain why regulation with some good intentions often does bad things.

“This type of reform movement often results in smugglers getting what they want – regulatory capture, isolation of competition, and cartel formation – while the well-intentioned Baptist faithful wonder where their driving force for social progress went wrong?” he wrote in a recent essay titled Why AI Will Save the World.

In the field of artificial intelligence, “Baptists” are those who truly believe that artificial intelligence will destroy humanity, and some true believers are even innovators of the technology. They actively advocate for various strange and extreme restrictions on AI. “Private liquor dealers (smugglers)” refer to AI companies, as well as people who receive money from universities, think tanks, activist groups, and media organizations to attack AI and promote panic (on the surface, they seem to be “Baptists”). “Artificial intelligence security experts”, “artificial intelligence ethicists”, and “artificial intelligence risk researchers” who are hired to make some doomsday prophecies are examples. “In practice, even if the Baptist is sincere, they are manipulated by the smugglers and used as cover to achieve their own interests,” Mark Andersen wrote in the essay. “This is what is happening in the current AI regulatory process.” “If a regulatory barrier is established for AI risks, then these private liquor dealers (smugglers) will get what they want – a cartel of AI suppliers supported by the government, protecting them from the impact of new start-up companies and open source competition.”

02. Unemployment theory and “fixed pie fallacy”

Since he is wary of regulation, Mark Andersen naturally does not agree with many arguments put forward to support regulation. But he does not deny the value of discussing some topics. For example, is technology devouring all jobs? Income inequality, and the debate about overturning human society. The belief that market activity is a zero-sum game is a common economic fallacy. That is, assuming that there is a fixed pie, one side can only gain by sacrificing the other side. The automation leading to unemployment is a “fixed pie fallacy” . Mark Andersen pointed out in the essay that “at any given time, the number of labor is fixed, either completed by machines or humans. If completed by machines, humans will be unemployed.” But this is not the case. For example, the factory owner buys a large number of machines. The machines themselves need labor to produce, thereby bringing about job opportunities that did not exist before. After the machine money is recovered, because of cost advantages, the factory owner obtains excess profits. The money can be spent in many ways – expanding the factory scale, investing in the supply chain, or buying houses and high consumption. No matter how it is spent, it provides employment opportunities for other industries. Of course, the cost advantage of this clothing factory will not be permanent. In order to compete, competitors will also start to purchase machines (machine production workers therefore get more job opportunities). As more and more coats are produced and the price is lowered, the clothing factory is no longer as profitable as before. When more and more people can afford cheaper coats, they stimulate the scale of consumption, and the entire clothing industry hires more people, even more than before using machines. Of course, it is also possible that when the price of coats is cheap enough, consumers will spend the saved money on other things, thereby increasing employment in other industries. “When technology is applied to production, productivity will also increase, input will decrease, output will increase, and the result is that the price of goods and services will decrease, and we will have additional consumption capacity to buy other things. This increases demand, promotes new products and new industries, and creates new jobs for people who have been replaced by machines. ” Mark Andersen wrote. “When the market economy operates normally and technology is freely introduced, this will be an endless upward cycle. A larger economy will emerge, with higher material prosperity, more industries, products and employment opportunities.” Mark Andersen wrote. If all existing human labor is replaced by machines, what does it mean? “The rate of productivity growth will be unprecedented, the price of existing goods and services will be close to free, the purchasing power of consumers will soar, and new demand will explode.

Entrepreneurs will create dazzling new industries, products, and services, and hire as many AI and human employees as possible to meet all these new demands. Assuming AI will again replace human labor, this cycle will repeat, driving economic growth, boosting employment to new heights, and leading to the material utopia that Adam Smith could never have imagined. Human needs are endless, and technological evolution is the process of constantly satisfying and defining these possibilities.

Each new productivity will take different forms, economist Carlota Perez, who studies technological change and financial bubbles, said, but it doesn’t necessarily mean that work overall will decrease, but rather that the definition of work changes. Conversely, if we stick to logical consistency, we should not only see all new technological advances as a disaster, but also feel equally horrified by all past technological advances. If you think machines are the enemy, then you should go back and relax, right? Following this logic, we will always go back to where everything began – self-sufficient agriculture, and if you make your own clothes, wouldn’t it be better?

03. Who is causing inequality?

In addition to machines causing human unemployment, technology triggers social injustice, which is another argument for people to call for AI regulation. “Assuming AI does indeed take away all jobs, good or bad, this will result in serious wealth inequality, as AI owners get all the economic rewards while ordinary people get nothing,” Anderson explained. To this, Anderson’s explanation is simple. Will Musk be richer if he only sells cars to the rich? Will he be richer if he only makes cars for himself? Of course not. He maximizes his profits by selling cars to the world’s largest market. Manufacturers of electricity, radio, computers, the internet, mobile phones, and search engines have all actively lowered prices until everyone can afford them. Likewise, we can now use the most advanced generative AI such as New Bing and Google Bard for free or at low cost. This is not because they are stupid or generous, but because they are greedy – expanding the market size and making more money. Therefore, contrary to technology driving wealth concentration, technology ultimately gives everyone more power and captures most of the value.

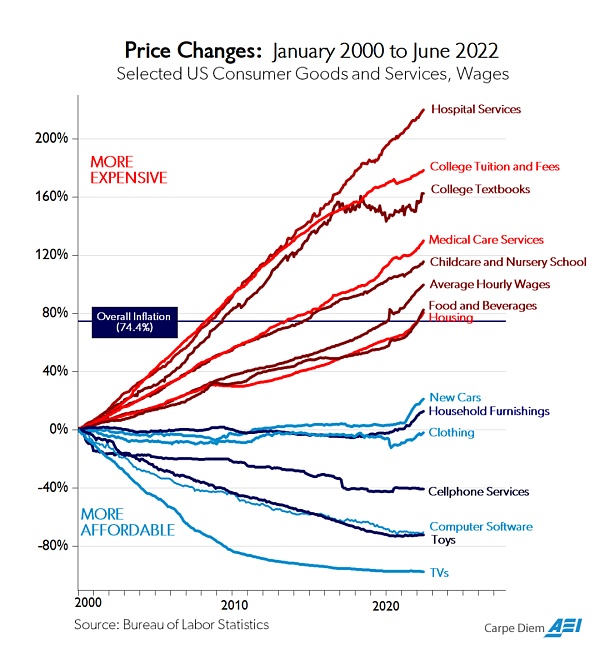

Inequality is indeed a major social problem, but it is not driven by technology, but by our unwillingness to use AI to reduce inequality. Economic sectors such as housing, education, and healthcare, which adopt AI, often face the greatest resistance. As shown in the figure below, the blue curve represents industries that allow technological innovation to improve quality and lower product prices, such as consumer electronics, automobiles, and home furnishings. In March of this year, Mark Anderson wrote in a blog Why AI Won’t Cause Unemployment.

This chart shows the price changes of several major economic sectors after adjusting for inflation.

The red part represents industries that do not allow the introduction of technological innovation (and thus lower prices). You can see that the prices of education, healthcare, and housing are going to the moon. “The red industries are subject to strict government and self-regulation. These industries are monopolies, oligopolies, and cartels, with all the obstacles to change you can imagine: formal government regulation and regulatory capture, price fixing, Soviet-style pricing, professional licenses, etc. . Now, technological innovation in these sectors is almost banned.”

We are entering such a divided world – a flat-screen TV that covers the entire wall costs only $100, and a four-year college degree costs $1 million. So, what will happen over time? Regulated non-technical product prices rise; less regulated, technology-driven product prices fall. The former is inflating, and the latter is shrinking. In extreme cases, 99% of the economy will be in regulated non-technical sectors, which is the direction we are moving in.

04, Speech regulation and slip effect

The fear that “the technology we create will eventually rise and destroy us” is deeply rooted in our culture. The answer to whether this fear is based on any rational basis, and to what extent it can be distinguished from cults, is still suspended. Anderson attributed them to a logical classification error – certain categories of things are presented as if they belong to different categories. “AI is a machine, just like your toaster, it won’t come to life,” he wrote. However, “if killer robots don’t catch us, hate speech and misinformation will.” You see, the “ghosts” that call for the regulation of social media continue to roam in the AI era. Every country, including the United States, will designate certain content on social platforms as illegal. For example, child pornography and incitement to violence in the real world. Therefore, any technology platform that promotes or generates content will be subject to some restrictions. Supporters of regulation advocate that generative AI should generate speech and thoughts that are beneficial to society and prohibit harmful speech and thoughts generated. Anderson reminded that the ” slippery slope is inevitable.” The so-called slippery slope refers to the fact that if a bad thing or problem starts, it is likely to get worse, and if it is not stopped, it will escalate and the consequences will be unimaginable. “Once a framework is in place to limit extremely bad content – such as hate speech – government agencies, radical groups, and non-governmental entities will immediately launch greater scrutiny and suppression of any speech they believe threatens society and/or their personal preferences, even in a bare criminal way.” He wrote. This phenomenon has been going on for 10 years on social media and is still heating up. In March of last year, the New York Times editorial board published an article America Has a Free Speech Problem. According to a public opinion poll conducted by the opinion/siena college of the Times, 46% of respondents said they felt less politically free than they did ten years ago. 30% of people say they feel the same way. Only 21% said they felt freer, even though the voices in the public square have expanded dramatically through social media over the past decade. “When social norms around acceptable speech constantly change, and when there is no clear definition of harm, these restrictions on speech can become arbitrary rules with disproportionate consequences.” They wrote in the article, and conservatives have used harmful speech ideas to serve their purposes. For Anderson, waking up every morning and seeing dozens of people on Twitter explaining to him in detail that he is an idiot is quite helpful: By arguing with people, you can roughly establish a model of how others think, you can think about problems from their perspective, and your thinking becomes more objective and neutral. He reminded that people who advocate that generative AI should be consistent with human values are only a very small part of the global population, “this is a feature of the coastal elites in the United States, including many who work and write in the technology industry.” “If you are against constantly strengthening the language code to impose niche morality on social media and AI, then you should also realize that the struggle over what AI is allowed to say / produce will be more important than the struggle over social media censorship. Artificial intelligence is likely to become the control layer of everything in the world. Allowing it to operate may be more important than anything else. You should realize that a small group of isolated social engineers are using the old mantle of protecting you, and their moral decisions determine how AI operates.”

05, the most real and terrible risk

If these fears and concerns are not really risks, then what is the biggest risk of AI? In his view, there is an ultimate and real risk of artificial intelligence, which may also be the most terrible and greatest risk: the United States has not won the dominant position of global artificial intelligence. Therefore, “we should push artificial intelligence into our economy and society as quickly and as hard as possible, maximally enhancing its benefits as economic productivity and human potential.”

At the end of the article, he proposed several simple plans. 1. Large artificial intelligence companies should be allowed to develop artificial intelligence as quickly and actively as possible, but not to allow them to achieve regulatory capture, nor to allow them to establish a cartel protected by the government and isolated from market competition by falsely claiming artificial intelligence risks.

This will maximize the technical and social returns of the stunning capabilities brought by these companies, which are gems of modern capitalism. 2. Artificial intelligence start-ups should be allowed to develop artificial intelligence as quickly and actively as possible. They should be allowed to compete. If start-ups do not succeed, as a presence in the market, they will also continue to inspire large companies to do their best- in any way, our economy and society will win. 3. Open source artificial intelligence should be allowed to spread freely and compete with large artificial intelligence companies and start-ups. In any case, there should be no regulatory barriers to open source.

Even if open source does not defeat companies, its widespread availability is a blessing for students around the world who want to learn how to build and use artificial intelligence as part of future technology, and it will ensure that everyone can use artificial intelligence, no matter who they are or how much money they have. 4. The government cooperates with the private sector to maximize the social defense capabilities in every potential risk area using artificial intelligence. Artificial intelligence can be a powerful tool to solve problems, and we should accept it.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- EigenLayer has been deployed on the Ethereum mainnet

- Building to collapse? Another South Korean cryptocurrency company suspends withdrawals

- June 2023 FOMC Meeting Notes: Rates Can Go Higher

- Deep Dive into Uniswap V4: A “Masterpiece” of Decentralized Exchange

- Analysis of Sui’s ecological data and token release status

- Exploring ERC-4626: Tokenized Vault Standard

- The Best Use of Cryptocurrency: Is Combining It with AI the Way Out?