Revolution of AI in Chain Games (Part 3): Electronic Games, the Hidden Driver of Technological Development

AI Revolution in Chain Games (Part 3): Electronic Games, the Driver of Tech DevelopmentElectronic games, the hidden engine of technological development

When many readers see this title, they may grin and say, “Guage, you can’t elevate electronic games to such a high level just because you like to play games.” I’m not kidding. Let’s talk about why games can drive the world’s technological development for the past 50 years.

01 Jobs and Pac-Man

Guaguas who work in the electronics industry should know that the integrated circuit industry took off in the 1970s, when Motorola introduced the 6502 processor, which had a high cost performance. Although it was only an 8-bit processor, it could already perform complex operations, and the price was very affordable. That is to say, if you make an electronic product and install this 6502 processor, you can sell it to the working class.

How to transform a new technology into a product that the general public can buy and enjoy purchasing is an important judgment standard for whether a new industry can sweep the world, which contains three elements:

- Why do I always receive “Exchange Withdrawal” messages? Learn about the classification and protection measures of Web3.0 data leakage events in this article.

- Decentralization explorers of Rollup: Polygon, Starknet, and Espresso

- As the countdown to the debt ceiling negotiations begins, Biden and Republican leaders reassure the public that there will be no default.

-

The technology must be new;

-

The technology can already be turned into a product;

-

The product is cheap enough;

Looking back at the development of epoch-making technological products in these decades, aren’t they all in line with these three elements: home computers, the Internet, mobile phones, mobile Internet, etc.?

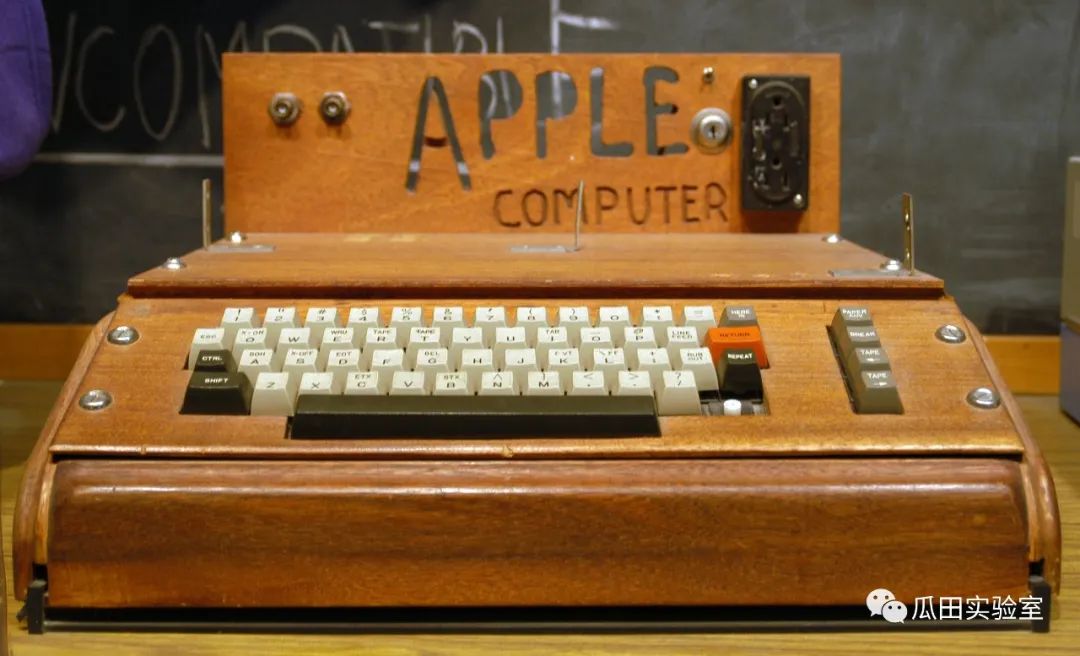

In the year when Motorola’s high-performance 8-bit processor was born, a company and a person both keenly discovered the huge business opportunities that might be hidden in it, so they each chose a direction and blossomed: that person found a good buddy and assembled the ugly electronic device below:

By the way, this is the prototype of the Apple I computer. The chosen one was one of the founders of Apple, Steve Jobs, and his good friend was Wozniak. The world’s first home computer was born like this, an ugly duckling that could sell for $500 each (according to conservative data from the US Labor Bureau, the purchasing power of the dollar in 1975 was about six times that of today, so it was equivalent to $3,000 today, which is a sky-high price). Even more incredible, 48 years after the first Apple computer was born, the company’s market value reached $2.7 trillion. If Apple were considered an independent national economy, its GDP would rank fifth in the world, higher than the United Kingdom and lower than Germany, Japan, China, and the United States. Jobs established a new technology kingdom.

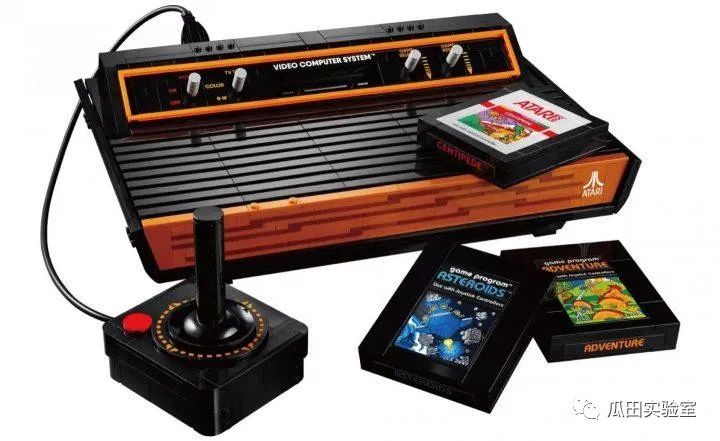

Wait, what does this have to do with games? Don’t worry, just mentioned the first direction was the emergence of home personal computers. Now, let’s talk about the second direction, a company called Atari, which added the 6502 processor to a gaming device, and after two years of research and development, finally launched the Atari 2600 game console in 1977, the first home game console.

This game console had an annual revenue of $2 billion in 1980 (equivalent to $12 billion today). Today’s players have no feeling of Atari’s glory, but the “one platform, multiple games” model it created is still being applied: the console company develops, and the games on the cartridge can be handed over to the game studio. The last level to be passed in the movie “Ready Player One” is the “Pac-Man” game played on the ice, which was a hit on the Atari 2600 game console.

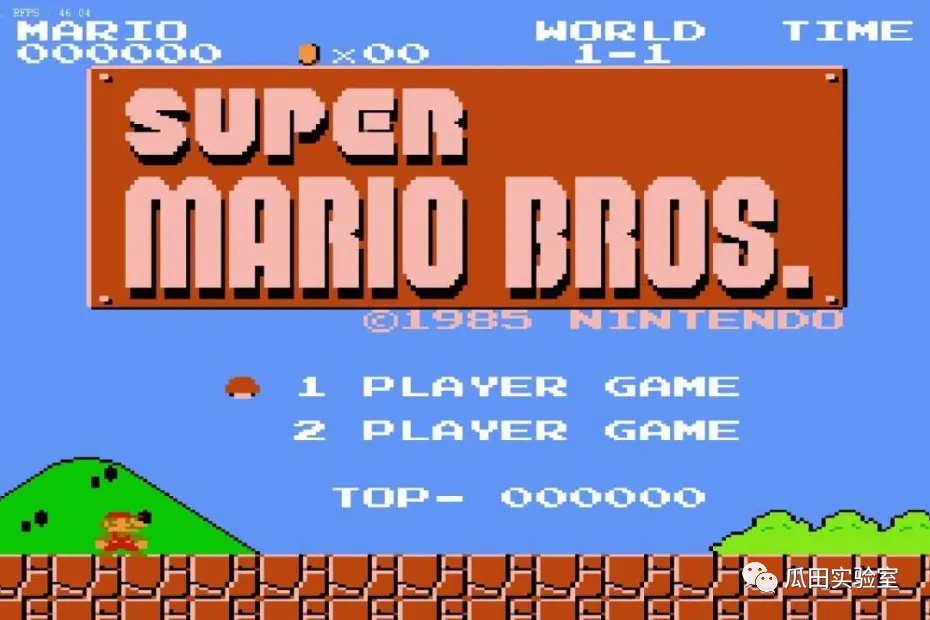

Atari’s sudden wealth allowed many technology companies at the time to see a new direction where technological capabilities could quickly turn into wealth, so the well-known Sega and Nintendo began to enter the market. In 1983, Nintendo’s red and white machine (FC game console: Famicom) swept the global game market like a tornado, and also brought us the most indelible childhood memories of “Super Mario Bros.” The red and white machine was not discontinued until 2003 and sold more than 60 million units in total. The Little Tyrant King Learning Machine familiar to our Eastern countries is an imitation of the red and white machine.

Here’s another piece of gossip: the first direction mentioned earlier was also learned by Jobs from Atari. Jobs, who had been a boss all his life, had only one working experience, which was working in Atari’s game console team, where he learned about the powerful features of the 6502 chip, which led him to start Apple.

In the late 1980s, Sega and Nintendo were in fierce competition, and the processing power of 8-bit processors was not enough, so they went for 16-bit processors! Thus, under the pressure of terminal products and market demand, chip technology research and development made rapid progress. Whoever was the first to develop it would make money, after all, this transformation path had already been opened up.

So it’s not an exaggeration to say that in the 1980s, the development of the electronic game industry drove the development of the chip and computer industry, right? The subsequent development of Nintendo’s handheld Game Boy (which sold more than 100 million units) led to the development of hardware adaptation technology, and Sony’s Play Station promoted CD and CD-ROM technology. These are all important contributions of the gaming industry to new technology, and the gaming industry provides the largest civilian consumption scene, while market demand is the lifeblood of new technology.

02 The Amazing NVIDIA

With the rise of the AI boom, NVIDIA has been frequently mentioned in the media. Small partners with experience in DIY computers and big V’s who have gotten rich from mining shouldn’t be unfamiliar with NVIDIA, it is the world’s largest graphics card manufacturer. High-end 3A games require graphics cards, and mining also requires graphics cards (actually, what is needed is the GPU on the graphics card, which has superior computing power in certain aspects), but what does it have to do with AI? Let’s take a look.

The electronic game industry has been developing for almost fifty years, and players’ demands for game graphics and videos are increasing, so the computing power of the GPU on graphics cards is constantly iterating and evolving. Later, some extension industries, such as video capture and encryption, discovered the high computing power of the GPU and gave the graphics card new capabilities. Around 2000, AI entered the deep learning stage (you can refer to the first article in this series for details, where Hinton created a way to describe neural networks called deep learning), and scientists found that the computing method of graphics cards was particularly suitable for the learning of neural networks. The more information and data fed in, the better the result. It is said that OpenAI’s breakthrough came from an internal test that used tens of thousands of NVIDIA GPU A100s, and then, with a click of God’s golden finger, a new world began to reveal its mysterious corner.

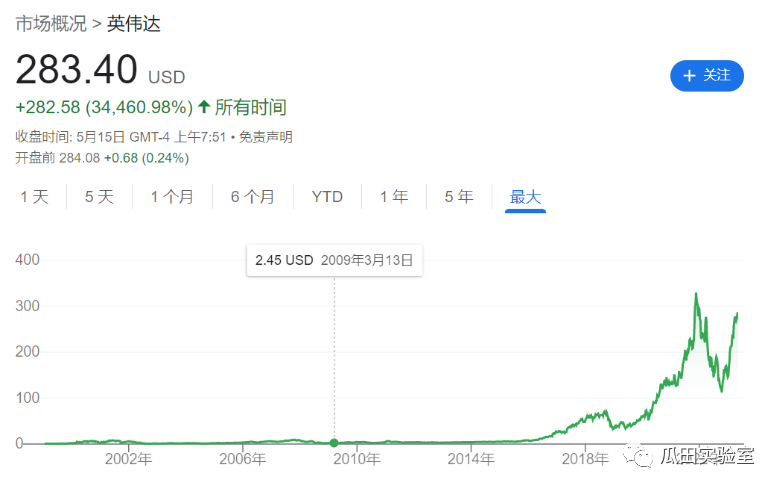

So, graphics cards have become a necessary resource for AI companies on their road to growth. Just look at Nvidia’s stock price over the past twenty years. It really took off around 2016. As we learned in the previous article, OpenAI was founded in 2015, and Nvidia rode the AI wave to make an impressive comeback. With a current market value of around $680 billion, it far surpasses other chip companies like Intel and AMD, making it one of the top tech giants behind only Apple, Microsoft, and Google.

The mainstream GPUs currently providing computing power for large AI models are Nvidia’s two models: A100 and H100. Some analysts even consider the number of A100 and H100 GPUs that an AI company owns to be an important indicator, like a “Nvidia factor”. A high Nvidia factor is like taking Viagra – it can help you get rich quickly… This is my interpretation of the “Nvidia” definition, hahaha, just rambling.

Even Musk recently announced that Twitter has acquired about 10,000 Nvidia GPUs. While everyone is envious of Nvidia’s good fortune, we cannot forget the fact that as early as 2016, Nvidia founder Huang Renxun donated an AI supercomputer DGX-1 to OpenAI to demonstrate the unique advantages of Nvidia’s GPUs in AI computing. This custom machine’s computing power can compress a year’s worth of OpenAI training time into a month. Therefore, it can be seen that Nvidia has also been laying out the AI track for a long time. A company with a market value of $680 billion did not get it by picking up money from the sky. The strategic decision-making and business negotiations behind the scenes should be very exciting and cannot be explained by simple divine intervention.

So how did Nvidia come about? Why can it span across several industries, including electronic games, industrial videos, encryption mining, and AI computing? Let’s continue to gossip:

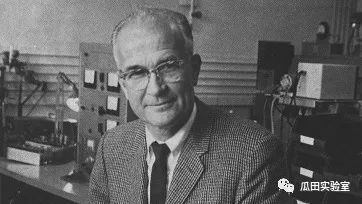

In fact, just like the Internet, integrated chips were also born out of military demand. In the 1950s, the US Department of Defense wanted to add a control unit to missiles and other weapons to hit targets more accurately. So they found a few university labs to conduct research. Among them, a group of young talents led by the Massachusetts Institute of Technology’s famous scientist Shockley (known as the father of the transistor and winner of the Nobel Prize in Physics) came up with the concept of the chip.

Shocking Lee had already succeeded and after achieving some results, his biggest hobby was to go out and enjoy AMA, enjoying flowers and applause, saying “the project team is doing things” externally, but actually spending the money of the investors of the U.S. Department of Defense. But the young talents under his command really want to do things and realize their dream of becoming rich. They have always demanded to increase research and development, reduce costs, and scale up production, so as to break through to the large civilian field and dig and dig and make a lot of money. But Shocking Lee has been suppressing them and not letting them do it.

Later, eight young people in Shocking Lee’s laboratory resigned and a new company called Fairchild was established, known as the “Fairchild Eight Defectors”. The most famous of them was Moore, who defined Moore’s Law.

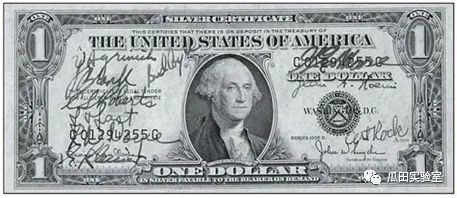

The covenant of the eight people was also particularly dreamy. They signed their names on a dollar bill, which was clear: Old Xiao, don’t draw big cakes for us anymore! What we want is green cash!

In fact, in the history of technological development, this kind of group combat often brings about revolutionary subversion, from the early Fairchild Eight Defectors that led the chip revolution, to the BlockingyBlockingl gang in the early 2000s (members founded companies such as Tesla, LinkedIn, YouTube, etc.) launching the Internet revolution, and to the group that founded OpenAI mentioned in the previous article in 2015.

Back to Fairchild, this company is epoch-making:

-

First, it defined the semiconductor industry in technology, and the entire research approach turned to silicon as a material, which can produce integrated circuits in batches;

-

Second, it defined the business model of “venture capital + incubation company”. The Fairchild Eight Defectors first found Arthur Rock, the father of Wall Street’s venture capital industry, to do the FA, and introduced the main sponsor, Sherman Fairchild, the name of the Fairchild company comes from the name of the main sponsor’s father. This kind of kneel and flattery is effective. This Sherman Fairchild is very prestigious and is the largest individual shareholder of IBM. The first order of Fairchild after its establishment came from IBM. From then on, this type of venture capital business model became popular, and it is still popular today. That is, investors do not just give money, but also get involved in company management and operations, and use their resources to help entrepreneurs succeed. In short, deeper binding is needed (which is also what W Labs is doing, haha).

-

Third, it promoted the emergence of Silicon Valley. Fairchild slowly became the Huangpu Military Academy of the semiconductor industry. After several years of cooperation between the Fairchild Eight Defectors and the main sponsor Fairchild, the conditions behind were not negotiated, and they also went out to work alone, and also led another batch of colleagues. Everyone has established a new company and works around the Fairchild office, and this area has become today’s Silicon Valley. Among these new companies are Intel and AMD. The young people who left Fairchild jointly established more than 100 semiconductor companies, and Fairchild has become a godly incubator.

Going back to NVIDIA, the person who founded AMD came out of NVIDIA, while NVIDIA’s boss Huang Renxun was an engineer at AMD and later started his own business, NVIDIA. These companies and people have a lot of connections. It is said that Huang Renxun and the current person in charge of AMD, Su Zifeng, even have some distant relatives.

The idea behind NVIDIA’s founding was: You Intel and AMD go compete on CPUs, I’ll specialize in GPUs. Anyway, as computer games become more and more sophisticated, traditional CPU’s computing power is increasingly insufficient. So I’ll separate the graphics processing part and make a new chip, defining it as a GPU, and install it on a graphics card. This was still a blue ocean at the time.

In the early days, the gaming industry contributed 100% of NVIDIA’s revenue. After making money, Huang Renxun increased the R&D efforts and extended GPUs to other areas, including the industrial sector. In recent years, NVIDIA has also worked on AI, always maintaining the competitiveness of its products. This approach is very similar to Huawei’s: R&D comes first, and the market follows closely. The graphics processing industry is becoming more and more popular. In addition to AI, even the drones on the battlefield use GPUs for graphics recognition and processing.

So taking NVIDIA as an example, as the most resource-intensive software, games have driven the progress of hardware performance in reverse. At the same time, the gaming industry is the best link between the B-end and the C-end, and users are willing to pay for the pleasure of playing games.

So once again, the game is the behind-the-scenes driver of technological progress over the past half century.

To be continued. This series is collectively created by the W Labs “AI Chain Game Research Team”. Thanks to the team members Guage, Jiaren, Baobao, Brian, Xiaofei, and Huage for their hard work and dedication! This article was written by Guage.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Is Binance joining ORC-20, marking the end of BRC-20?

- Understanding the business model and product components of Centrifuge, one of the leading companies in RWA

- Deep Analysis: Exploring Hooked’s Ambitious Goals in Web3 Education through Collaboration with Animoca

- Is the ultimate goal of AI Web3? “Father of ChatGPT” launches encrypted wallet World App

- Why has Cosmos become the first choice for many developers in application chains?

- Dark Side of NFT History: Reviewing the Darkest Moments of 5 Blue Chip Tokens

- Conversation with Coinbase Protocol Director: How is Base, as a highly anticipated new Layer2, building its ecosystem step by step?