a16z In-Depth Analysis What New Gameplays Will AI Create?

a16z Analysis AI's New GameplaysSource/a16z

Compilation/Nick

Early discussions about the revolutionary impact of generative AI in games primarily focused on how AI tools could improve the efficiency of game creators, making game production faster and on a larger scale than before. In the long run, we believe that AI can not only change the way games are created, but also the nature of the games themselves.

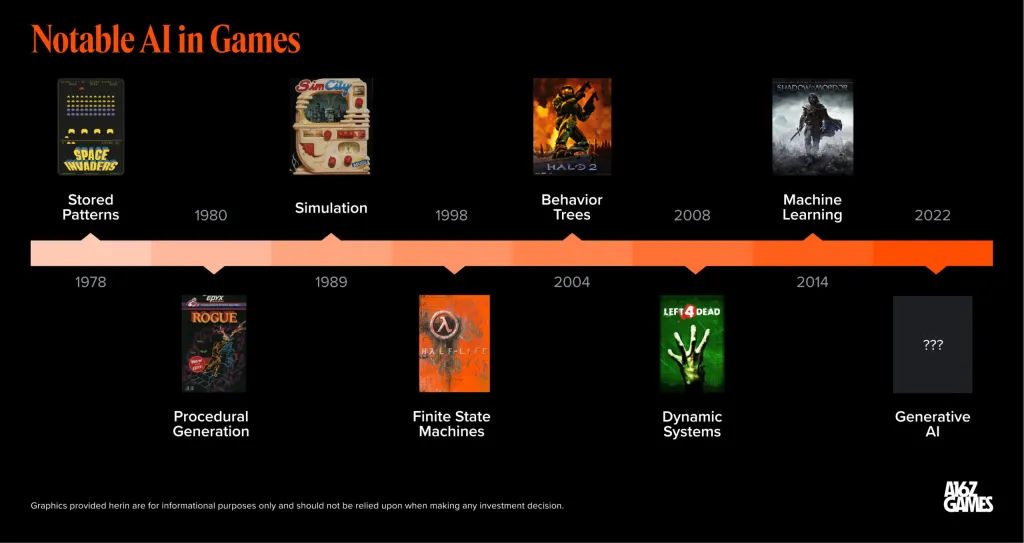

AI has always been instrumental in generating new forms of games. From the procedurally generated dungeons in “Rogue” (1980) to the finite state machines in “Half-Life” (1998), and the AI game director in “Left 4 Dead” (2008). Recently, advancements in deep learning technology have enabled computers to generate new content based on user prompts and large datasets, further changing the rules of the game.

- Another animal coin gains popularity, this time it’s the real version of the on-chain hamster race Hamsters.gg.

- LianGuai Daily | Google Cloud is planning more Web3-centered products; Celsius reaches a key settlement, customers may receive compensation by the end of this year.

- Former CEO of stablecoin TUSD sues the company, claiming to have been ousted during negotiations for acquisition by Tron.

Although still in its early stages, we have already seen some interesting AI-driven areas in gaming, including generative agents, personalization, AI storytelling, dynamic worlds, and AI co-pilots. If successful, these systems can be combined to create emerging AI games that can retain a loyal player base.

Generative Agents

The simulation game genre was pioneered by Maxis’ SimCity in 1989, allowing players to build and manage virtual cities. Today, the most popular simulation game is “The Sims,” with over 70 million players worldwide managing virtual people called “Sims” and letting them live their daily lives. Designer Will Wright once described “The Sims” as an “interactive dollhouse.”

Generative AI, powered by large language models (LLMs), can drive more realistic behaviors for agents, significantly advancing the development of simulation games.

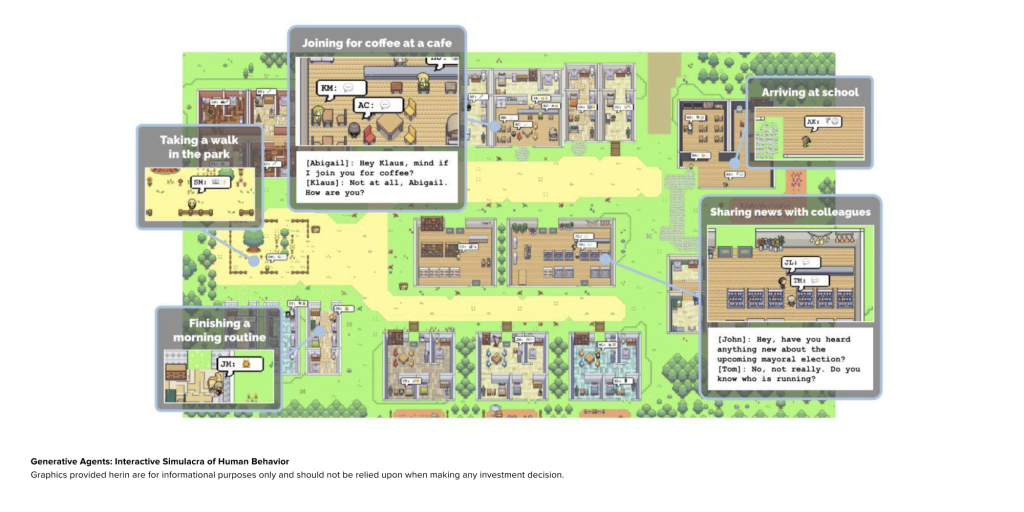

Earlier this year, a research team from Stanford University and Google published a paper on applying LLM to agents in games. Led by doctoral student Joon Sung LianGuairk, the research team introduced 25 Sims-like agents into a pixel art sandbox world, using ChatGPT and an extended LLM architecture to store complete records of the agents’ experiences in natural language, synthesizing these memories into higher-level reflections, and dynamically retrieving them for behavior planning.

These results offer a fascinating glimpse into the potential future of simulation games. Starting from a user-specified prompt, such as an agent wanting to host a Valentine’s Day party, the agents independently distribute party invitations, form new friendships, invite each other on dates, and coordinate to attend the party on time two days later.

This behavior is made possible because LLM is trained on social network data, so their models include the basic elements of human conversation and behavior in various social environments. In interactive digital environments like simulation games, these reactions can be triggered to create lifelike behavior.

From the player’s perspective, the end result is a more immersive gaming experience. The joy of playing “The Sims” or the colony simulation game “RimWorld” largely comes from the unexpected things that happen. With intelligent agent behavior from social networks, we may see simulation games not only showcase the imagination of game designers, but also reflect the unpredictability of human society. Watching these simulation games can bring endless entertainment, like watching the next generation of “The Truman Show,” a form of entertainment that pre-made TV or movies cannot achieve today.

Using our desire for imaginative games like “Dollhouse,” the agents themselves can also be personalized. Players can design an ideal agent based on themselves or fictional characters. “Ready Player Me” allows users to generate their own 3D avatars through selfies and import them into over 9000 games/applications. AI character platforms like Character.ai, InWorld, and Convai can create custom NPCs with their own background stories, personalities, and behavior control.

With natural language capabilities, the way we interact with intelligent agents is also expanded. Developers can now use Eleven Labs’ text-to-speech models to generate realistic voices for their intelligent agents. Convai recently partnered with NVIDIA to launch a well-known demo where players can have natural voice conversations with an AI ramen chef NPC, generating real-time dialogues and matching facial expressions. The AI companion app Replika already allows users to interact with their companions through voice, video, and AR/VR. In the future, one can imagine a simulation game where players can stay connected with their intelligent agents through phone or video calls while on the go, and then dive into a more immersive game when they return to their computers.

However, there are still many challenges to be addressed before we see a fully generated version of “The Sims.” LLM’s training data has inherent biases that may be reflected in the behavior of intelligent agents. The cost of running large-scale simulations for real-time service games around the clock may not be economically feasible, as running 25 agents in 2 days can cost research teams thousands of dollars in computing expenses. Efforts to offload model workloads onto devices are promising but still relatively early. We may also need to establish new norms around quasi-social relationships with intelligent agents.

But one thing is clear, there is currently a huge demand for generative intelligent agents. In our recent survey, 61% of game studios plan to try using AI NPCs. We believe that as intelligent agents enter our everyday social sphere, AI companions will soon become commonplace. Simulation games provide a digital sandbox in which we can interact with our favorite AI companions in interesting and unpredictable ways. In the long run, the nature of simulation games is likely to change, with these intelligent agents being more than just toys, but potential friends, family members, colleagues, advisors, and even lovers.

Personalization

The ultimate goal of personalized gaming is to provide a unique gaming experience for each player. For example, let’s start with character creation – from the original “Dungeons & Dragons” tabletop game to Mihoyo’s “Genshin Impact”, character creation is a cornerstone of almost all role-playing games (RPGs). Most RPGs allow players to customize their appearance, gender, level, etc. from preset options. So how can we go beyond presets to generate a unique character for each player and game process? The personalized character builder that combines LLM with text-to-image diffusion models can achieve this.

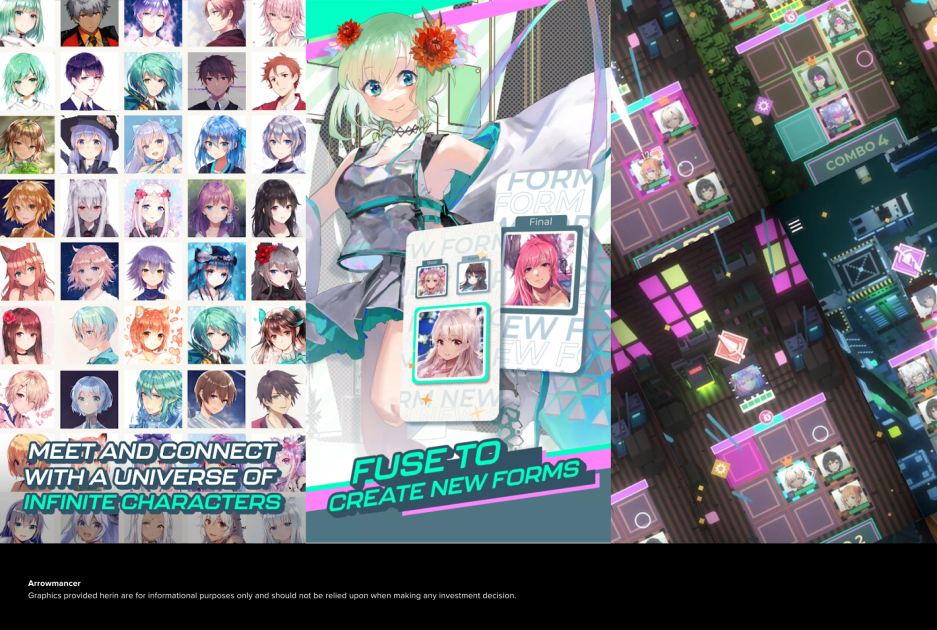

“Arrowmancer” by Spellbrush is a GAN-based anime model-driven RPG game customized by the company. In Arrowmancer, players can generate a set of unique anime characters, including artistic and combat abilities. This personalization is also part of its monetization system, where players import AI-created characters into custom gacha banners, where they can obtain duplicate characters to strengthen their teams.

Personalization can also extend to items in the game. For example, AI can help generate unique weapons and armor that can only be obtained by completing specific tasks. Azra Games has built an AI-driven asset pipeline that can quickly conceive and generate a large number of in-game items and world item libraries, paving the way for a more diverse gaming experience. The renowned AAA developer Activision Blizzard has established the Blizzard Diffusion system, which is a replica of the image generator Stable Diffusion and can help generate concept art for various characters and outfits.

The text and dialogue in the game can also be personalized. Signs in the world can reflect the titles or positions that players have obtained. NPCs can be set as LLM intelligences with unique personalities and adjusted based on the player’s behavior. For example, dialogues can be changed based on the player’s past interactions with the intelligences. We have already seen the successful implementation of this concept in an AAA game, Monolith’s “Shadow of Mordor”, which has a nemesis system that dynamically creates interesting background stories for villains based on the player’s actions. These personalized elements make each gaming experience unique.

Game publisher Ubisoft recently unveiled Ghostwriter, a dialogue tool powered by LLMs. Today, publishers can use this tool to automatically generate dialogues that help simulate the life world around players.

From the player’s perspective, AI enhances the immersion and playability of games. In immersive open-world games like “The Elder Scrolls V: Skyrim” and “Grand Theft Auto V”, role-playing mods have remained popular, indicating players’ potential demand for personalized stories. Even today, the number of players on role-playing servers in “Grand Theft Auto V” is consistently higher than the original game. We believe that in the future, personalized systems will become an indispensable real-time operation tool to attract and retain players in all games.

AI Narrative

Of course, a good game is not just about characters and dialogue. Another interesting scenario is using generative AI to tell better and more personalized stories.

“Dungeons & Dragons” is the pioneer of personalized storytelling in games. In this game, a person called the “dungeon master” prepares to tell a story to a group of friends, who each play a character in the story. The resulting story is both improvised theater and an RPG game, meaning that each game is unique. As a signal of the demand for personalized storytelling, “Dungeons & Dragons” is more popular than ever, with sales of digital and analog products reaching new highs.

Today, many companies are applying LLM to the story mode of “Dungeons & Dragons”. The opportunity lies in allowing players to freely spend their time in their favorite player creations or IP universes, guided by a patient AI storyteller. AI Dungeon, launched by Latitude in 2019, is an open, text-based adventure game where AI plays the dungeon master. Users have also fine-tuned OpenAI’s GPT-4 version to play “Dungeons & Dragons” and achieved remarkable results. Character.AI’s text adventure game is one of the most popular modes of the application.

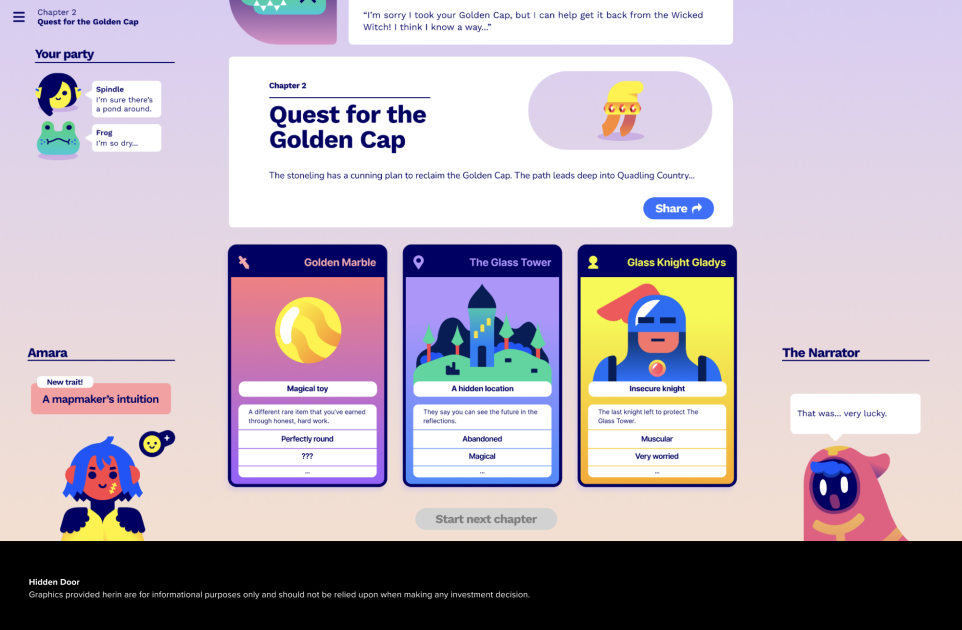

Hidden Door goes a step further by training machine learning models based on specific source materials, such as “The Wizard of Oz”, allowing players to adventure in established IP universes. In this way, Hidden Door collaborates with intellectual property owners to achieve a new, interactive form of brand extension. As soon as fans finish watching a movie or reading a book, they can continue their adventures in their favorite worlds through customized activities similar to “Dungeons & Dragons”. The demand for fan experiences is booming. In May alone, the websites of Archiveofourown.org and WattLianGuaid, the two largest online fan fiction libraries, received over 354 million and 146 million visits respectively.

NovelAI has developed its own LLM Clio, using it to tell stories in sandbox mode and help human writers overcome writing obstacles. For the most discerning writers, NovelAI allows users to fine-tune Clio based on their own works or even the works of famous authors like H.P. Lovecraft or Jules Verne.

It is worth noting that there are still many obstacles before AI storytelling production is fully ready. Today, building an excellent AI storyteller requires a large number of manually set rules to create well-defined storylines. Memory and coherence are crucial, as the storyteller needs to remember what happened earlier in the story and maintain consistency in facts and style. Explainability remains a challenge for many closed-source code systems that operate as black boxes, and game designers need to understand how the system behaves in order to improve the gaming experience.

However, while overcoming these obstacles, AI has become the “co-pilot” of human storytellers. Today, millions of writers use ChatGPT for inspiration in their stories. Entertainment studio Scriptic has integrated DALL-E, ChatGPT, Midjourney, Eleven Labs, and Runway with a human editing team to create interactive choose-your-own-adventure shows, which are currently streaming on Netflix.

Dynamic World Building

While text-based storytelling is popular, many players also want to see their stories come to life in a visual way. One of the biggest opportunities for generative AI in gaming may be in creating immersive living worlds that players can spend countless hours in.

The ultimate vision is to be able to dynamically generate levels and content in real-time as players progress in the game. The sci-fi novel “Ender’s Game” features a typical example of this type of game called the “Mind Game.” The Mind Game is an AI-guided game that adjusts in real-time based on each student’s interests, with the game world constantly changing based on the student’s actions and any other psychological information inferred by the AI.

Today, the closest to an “intelligent game” may be Valve’s Left 4 Dead series, which uses AI to dynamically adjust the pace and difficulty of the game. The AI director doesn’t set the spawn points for enemies (zombies), but instead places them in different locations based on each player’s status, skills, and position, creating a unique experience for each playthrough. The director also sets the game atmosphere through dynamic visual effects and music. Valve founder Gabe Newell refers to this system as “procedural narrative.” EA’s critically acclaimed remake of Dead Space uses a variant of an AI director system to maximize the horror effect.

While this may seem like a plot from a sci-fi novel today, one day, with improvements in generative models and access to enough computing power and data, it may be possible to create an AI director that can not only create scares but also shape the world itself.

It’s worth noting that the concept of machine-generated levels in games is not new. From Supergiant’s Hades to Blizzard’s Diablo to Mojang’s Minecraft, many of today’s most popular games use procedural generation techniques, where levels are randomly created using equations and rule sets run by human designers, ensuring that each playthrough is unique. A whole set of software libraries has been established to assist with procedural generation. Unity’s SpeedTree helps developers generate virtual foliage that you may have seen in the forests of Pandora in Avatar or the landscapes of Elden Ring.

A game could combine a procedural asset generator with LLM in its user interface. The game Townscaper uses a procedural system that quickly turns player input of two pieces of information (the position and color of blocks) into beautiful townscapes. Imagine adding LLM to the user interface of Townscaper, providing natural language prompts to help players iterate and create more intricate and stunning works.

Many developers are also excited about the potential of using machine learning to enhance program-generated content. One day, designers may be able to use models trained on existing levels with similar styles to repeatedly generate viable initial drafts of levels. Earlier this year, Shyam Sudhakaran led a team at the University of Copenhagen to create MarioGPT – a GPT2 tool that can generate Super Mario levels using models trained on the original levels of Super Mario 1 and Super Mario 2. Academic research in this field has been ongoing for some time, including a project in 2018 that used generative adversarial networks (GANs) to design levels for the first-person shooter game “DOOM”.

By combining generative models with procedural systems, the speed of asset creation can be greatly accelerated. Artists have started using text-to-image diffusion models for AI-assisted concept art and storyboarding. Jussi KempLianGuaiinen, a visual effects supervisor, described in this blog post how he built the world and characters for a 2.5D adventure game with the help of Midjourney and Adobe Firefly.

3D generation technology has also received a lot of research. Luma uses Neural Radiance Fields (NeRFs) to allow consumers to create realistic 3D assets from 2D images captured on an iPhone. Kaedim uses a combination of AI and manual quality control to create 3D meshes that can be put into production immediately, with over 225 game developers currently using it. CSM recently released a proprietary model that can generate 3D models from videos and images.

In the long run, using AI models for real-time world building is the most important. In our view, the entire game will no longer need to be rendered in the future, but will be generated at runtime using neural networks. NVIDIA’s DLSS technology can already generate new high-resolution game frames in real-time using consumer-grade GPUs. Perhaps one day, you can click the “interactive” button in a Netflix movie and step into a world that is generated in real-time and tailored to the player in every scene. In the future, games will be no different from movies.

It is worth noting that a dynamically generated world itself is not enough to create an excellent game, as evidenced by the reviews of “No Man’s Sky”. The potential of a dynamic world lies in its combination with other game systems, such as personalization and intelligent agent generation, to create innovative storytelling forms. After all, the most striking part of a “mind game” is how it shapes Ed, not the world itself.

AI as a “Co-pilot”

While we have previously introduced the application of generative agents in simulation games, there is another emerging use case, which is AI acting as a co-pilot in games, guiding us in playing games and even fighting alongside us in certain situations.

For novice players of complex games, the role of AI co-pilots is immeasurable. For example, UGC sandbox games like Minecraft, Roblox, or Rec Room provide a rich environment where players can build almost anything they can imagine as long as they have the right materials and skills. However, the learning curve is high, and most players find it difficult to find a way to get started.

AI co-pilots can turn any player into a master builder in UGC games by providing step-by-step guidance based on text prompts or images and guiding players to overcome mistakes. The concept of “master builders” in the LEGO world is a good reference point, where these rare individuals have the talent to see blueprints for any creation they can imagine when needed.

Microsoft has already started developing AI assistance systems for “Minecraft,” using DALL-E and Github Copilot to allow players to inject assets and logic into “Minecraft” sessions through natural language prompts. Roblox is actively integrating AI-generated tools into the Roblox platform with the mission of enabling “every user to become a creator.” From using Github Copilot for coding to using ChatGPT for writing, the effectiveness of AI co-pilots in collaborative creation has been validated in many fields.

In addition to collaborative creation, LLM trained on human gameplay data should be able to understand behavioral patterns in various games. Through proper integration, intelligent agents can act as partners when the player’s friends are not available or play as the opposing side in positive confrontation games like “FIFA” and “NBA 2k.” Such intelligent agents can participate in the game at any time, being friendly regardless of victory or defeat, without blaming the player. With adjustments based on our personal gaming history, these intelligent agents can greatly outperform existing bots, playing the game in our own way or complementing our gameplay.

Similar projects have already been successful in restricted environments. The popular racing game “Forza” developed a “Drivatar” system that uses machine learning to create an AI driver for each human player, mimicking their driving behavior. Drivatars are uploaded to the cloud and can be called upon to compete with other players when human partners are offline, even earning victory points. Google DeepMind’s AlphaStar was trained based on a dataset of “up to 200 years” of gameplay data from StarCraft II and created an intelligent agent that can compete against and beat human esports professionals.

AI co-pilots, as a game mechanic, can even create entirely new game modes. Imagine “Fortnite,” but every player has a “master builder” wand that can instantly build sniper towers or fire boulders based on prompts. In this game mode, victory may depend more on the wand’s capabilities (prompts) rather than aiming with firearms.

The perfect AI “companion” in games has always been a memorable part of many popular game series. For example, Cortana in the Halo universe, Ellie in The Last of Us, or Elizabeth in BioShock Infinite. For competitive games, beating up computer robots will never go out of style – from blasting aliens in Space Invaders to stomping opponents in StarCraft, it eventually evolved into its own game mode called “Co-op Commander”.

As games evolve into the next generation of social networks, we expect AI co-pilots to play an increasingly important social role. Adding social features can increase the stickiness of games, which has been well proven, and players with friends have a retention rate that can be increased up to 5 times. In our view, every game in the future will have an AI co-pilot.

Conclusion

We are still in the early stages of applying AI to games, and many legal, ethical, and technological barriers need to be addressed before these ideas can be put into practice. Currently, the ownership and copyright protection of games that use AI-generated assets is largely unclear unless developers can prove ownership of all the data used to train the models. This makes it difficult for owners of existing intellectual property to use third-party AI models in their production pipelines.

Compensating the original authors, artists, and creators behind the training data is also a major issue. The current challenge is that most AI models are trained on publicly available internet data, much of which is copyrighted works. In some cases, users can even use the generated models to reproduce an artist’s style. It is still too early to properly address the issue of compensation for content creators.

Currently, the cost of most generative models is too high to run on a global scale in the cloud 24/7, which is what modern game operations require. To reduce costs, application developers may need to find ways to offload model workloads to end-user devices, but this will take time.

However, it is currently obvious that game developers and players have a great interest in generative AI for games. While there is also a lot of hype, we see many talented teams working tirelessly to create innovative products in this field, which is very exciting for us.

The opportunity lies not only in making existing games faster and cheaper, but also in creating a completely new type of AI game, which was previously impossible. We are not yet sure what form these games will take, but we know that the history of the game industry has always been a history of technology driving new forms of gameplay. With generative agents, personalization, AI storytelling, dynamic world building, and AI co-pilots, we may soon see the first “endless” game created by AI developers.

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- Curve rescue the nation? A detailed explanation of how Opensea’s new Deals feature solves the liquidity problem of NFTs.

- Exclusive Interview with Xian Diyun, Acting CEO of Zhongan Bank Virtual Assets Will Become a New Growth Point

- What new things has XMTP brought to Web3 social with its partnership with Coinbase and Lens?

- Quick Look at Velodrome V2 Further Enhancing Asset Efficiency, Strengthening OP Super Chain Vision

- LianGuai Observation | Opepen’s Rekindling A Community’s Co-creation and Win-win

- LianGuai Daily | RISC Zero raises $40 million; Manta Network developer p0x labs raises $25 million.

- Elon Musk establishes x.AI, aiming to snipe OpenAI or tell a new story of capital?