What is the security foundation of the post-POS era? A quick look at the newly proposed theory in the Ethereum community, Proof of Validator

Exploring the security foundation of the post-POS era Ethereum's Proof of Validator theoryToday, a new concept called Proof of Validator quietly emerged in the Ethereum research forum.

This protocol mechanism allows network nodes to prove that they are Ethereum validators without revealing their specific identities.

What does this have to do with us?

- An Overview of Scroll’s Early Ecosystem What Opportunities Exist for Leading Players in zkEVM?

- The wave of Layer1&Layer2 has arrived, taking stock of the token bridging steps and potential opportunities of various new chains.

- How much selling pressure remains after the hacker account has liquidated 300,000 BNB?

In general, the market is more likely to focus on the surface narratives brought about by certain technological innovations on Ethereum, and rarely delve into the technology itself in advance. For example, the market only remembers the narratives of London, LSD-Fi, and re-staking when it comes to Ethereum’s Shanghai upgrade, merge, transition from PoW to PoS, and scalability.

But do not forget that performance and security are of paramount importance to Ethereum. The former determines the upper limit, while the latter determines the bottom line.

It is obvious that on the one hand, Ethereum has been actively promoting various scalability solutions to improve performance; but on the other hand, on the path of scalability, in addition to cultivating oneself, it is also necessary to guard against external attacks.

For example, if a validation node is attacked and data becomes unavailable, then all the narratives and scalability plans built on Ethereum’s staking logic may be affected. However, this kind of impact and risk is hidden behind the scenes, making it difficult for end users and speculators to perceive and sometimes even care about.

The Proof of Validator mentioned in this article may be a key security puzzle on Ethereum’s path to scalability.

Since scalability is imperative, it is an unavoidable security issue to reduce the risks that may be involved in the scalability process, and it is also related to each and every one of us in the industry.

Therefore, it is necessary to understand the full picture of the newly proposed Proof of Validator. However, due to the fragmented and hardcore nature of the full text in technical forums, and the involvement of many scalability plans and concepts, DeepChain Research Institute has integrated the original post and sorted out the necessary information to interpret the background, necessity, and potential impact of Proof of Validator.

Data Availability Sampling: The Breakthrough in Scalability

Before formally introducing Proof of Validator, it is necessary to understand the current logic of Ethereum’s scalability and the risks it may entail.

The Ethereum community is actively advancing multiple scalability plans. Among them, Data Availability Sampling (DAS) is considered the most critical technology.

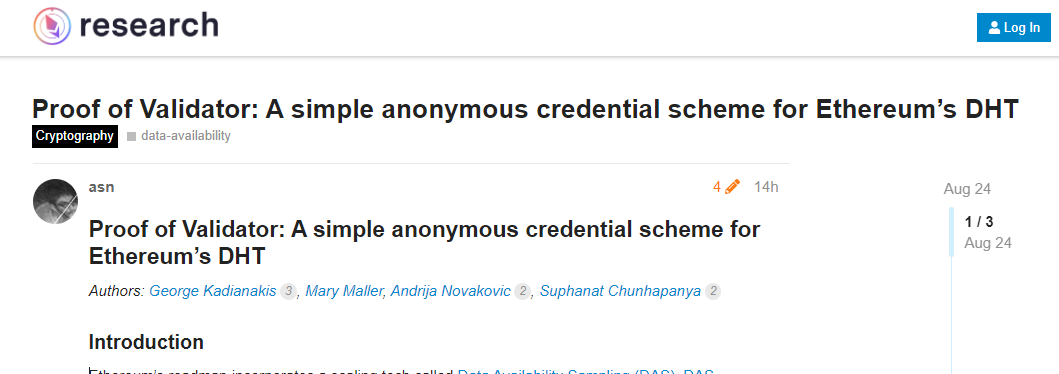

The principle is to divide the complete block data into several “samples,” and network nodes only need to obtain a few samples related to themselves to verify the entire block.

This greatly reduces the storage and computational burden on each node. To put it in a more understandable example, it is similar to conducting a survey sample. By accessing different individuals, we can summarize the overall situation of the entire population.

Specifically, the implementation of DAS can be briefly described as follows:

- Block producers divide block data into multiple samples.

- Each network node only obtains a small number of samples of interest, rather than the complete block data.

- Network nodes can verify the availability of the complete block data by obtaining different samples through random sampling.

Through this sampling, even if each node processes only a small amount of data, together they can fully verify the data availability of the entire blockchain. This can greatly increase the block size and achieve rapid scalability.

However, this sampling scheme has a key problem: where are the massive samples stored? This requires a decentralized network to support it.

Distributed Hash Table (DHT): The Home of Samples

This gives Distributed Hash Table (DHT) the opportunity to shine.

DHT can be seen as a huge distributed database that maps data to an address space using hash functions, and different nodes are responsible for storing and accessing data in different address ranges. It can be used to quickly search for and store samples among a massive number of nodes.

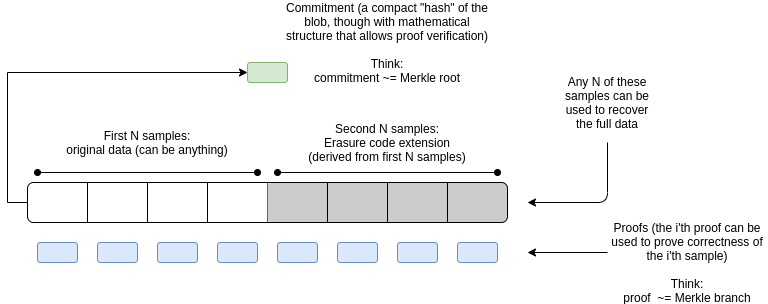

Specifically, after dividing the block data into multiple samples, DAS needs to distribute these samples to different nodes in the network for storage. DHT can provide a decentralized method for storing and retrieving these samples, and the basic idea is as follows:

- Use a consistent hash function to map samples to a huge address space.

- Each node in the network is responsible for storing and providing data samples within a specific address range.

- When a specific sample is needed, the corresponding address can be found through hashing, and the node responsible for that address range in the network can be located to obtain the sample.

For example, according to certain rules, each sample can be hashed to an address, where Node A is responsible for addresses 0-1000 and Node B is responsible for addresses 1001-2000.

So, the sample with the address 599 will be stored in Node A. When this sample is needed, the address 599 is hashed in the same way, and the node responsible for that address, Node A, is located in the network to obtain the sample.

This approach breaks the limitations of centralized storage and greatly improves fault tolerance and scalability. This is exactly the network infrastructure required for DAS sample storage.

Compared with centralized storage and retrieval, DHT can improve fault tolerance, avoid single point of failure, and enhance network scalability. In addition, DHT can also help resist attacks such as “sample hiding” mentioned in DAS.

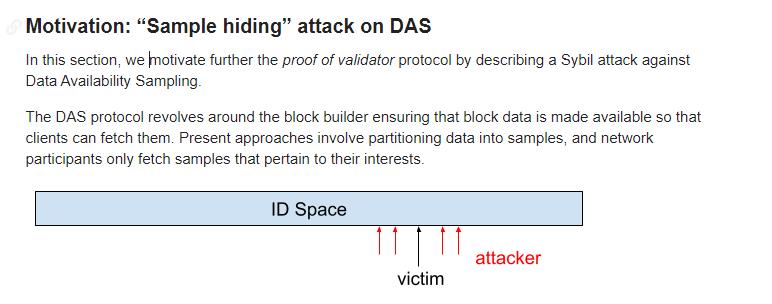

Pain Point of DHT: Sybil Attack

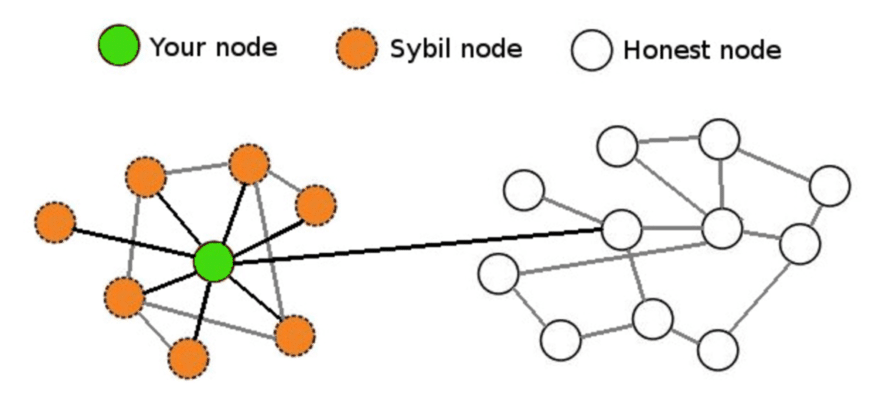

However, DHT also has a fatal weakness, which is the threat of Sybil attacks. Attackers can create a large number of fake nodes in the network, overwhelming the surrounding real nodes.

By analogy, an honest vendor is surrounded by rows and rows of counterfeit goods, making it difficult for users to find the genuine ones. This allows attackers to control the DHT network, rendering the samples unavailable.

For example, to obtain a sample for address 1000, one needs to find the node responsible for that address. However, when thousands of fake nodes created by attackers surround it, the requests will be continuously redirected to fake nodes, preventing them from reaching the actual node responsible for that address. As a result, the sample cannot be obtained, and both storage and verification fail.

To solve this problem, a highly trusted network layer needs to be established on the DHT, involving only validator nodes. However, the DHT network itself cannot identify whether a node is a validator.

This severely hinders the scalability of DAS and Ethereum. Is there any way to resist this threat and ensure the trustworthiness of the network?

Proof of Validator: ZK Scheme Safeguarding Scalability Security

Now, let’s get back to the main point of this article: Proof of Validator.

In the Ethereum technical forum, George Kadianakis, Mary Maller, Andrija Novakovic, and Suphanat ChunhaLianGuainya jointly proposed this scheme today.

The overall idea is that if we can come up with a way to only allow honest validators to join the DHT in the previous section’s scalability scheme, then malicious actors attempting a Sybil attack must also stake a significant amount of ETH, significantly increasing the cost of their malicious behavior.

In other words, the idea can be summarized as: I want to know that you are a good person without knowing your identity, and I want to be able to identify bad actors.

In this limited information proof scenario, zero-knowledge proofs are clearly useful.

Therefore, Proof of Validator (referred to as PoV) can be used to establish a highly trusted DHT network composed only of honest validator nodes, effectively resisting Sybil attacks.

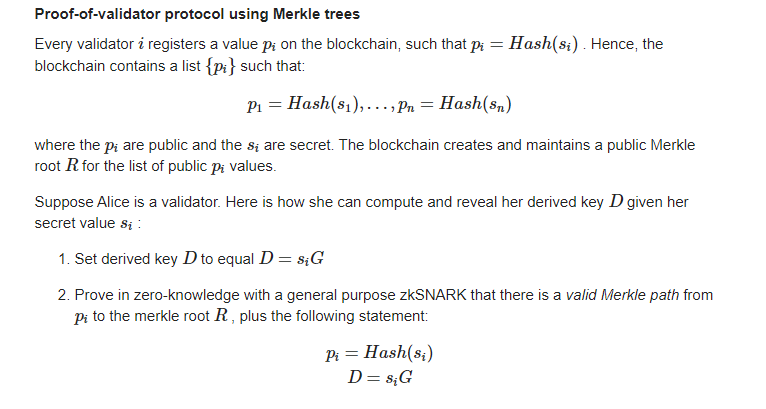

The basic idea is to have each validator node register a public key on the blockchain and then use zero-knowledge proof technology to prove that they know the private key corresponding to this public key. This is equivalent to presenting their identification card to prove that they are validator nodes.

In addition, for the resistance against DoS (Denial of Service) attacks on validator nodes, PoV also aims to hide the identity of validators on the network layer. In other words, the protocol does not want attackers to be able to distinguish which DHT node corresponds to which validator node.

So how exactly is this done? The original post used a lot of mathematical formulas and derivations, which will not be repeated here. Instead, we will provide a simplified version:

In terms of implementation, Merkle trees or lookup tables are used. For example, using a Merkle tree, it can be proved that the registered public key exists in the Merkle tree of the public key list, and then it can be proved that the network communication public key derived from this public key is a match. The whole process is implemented using zero-knowledge proofs and will not reveal the actual identity.

Skipping these technical details, the ultimate effect achieved by PoV is:

Only nodes that have passed identity verification can join the DHT network, greatly enhancing its security and effectively resisting Sybil attacks, preventing samples from being deliberately hidden or modified. PoV provides a reliable underlying network for DAS and indirectly helps Ethereum achieve rapid scalability.

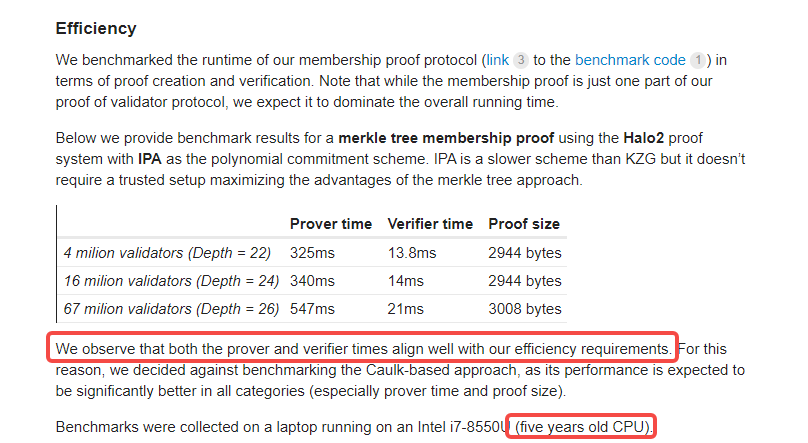

However, PoV is still in the theoretical research stage, and whether it can be implemented remains uncertain.

Nevertheless, several researchers of this post have conducted experiments on a small scale, and the results show that PoV performs well in terms of the efficiency of presenting ZK proofs and the efficiency of validators receiving proofs. It is worth mentioning that their experimental equipment is just a laptop with a 5-year-old Intel i7 processor.

Finally, PoV is still in the theoretical research stage, and whether it can be implemented remains uncertain. However, regardless of the outcome, it represents an important step towards higher scalability for blockchain. As a key component in Ethereum’s scaling roadmap, it deserves continuous attention from the entire industry.

PoV original post link: [link]

We will continue to update Blocking; if you have any questions or suggestions, please contact us!

Was this article helpful?

93 out of 132 found this helpful

Related articles

- a16z Partner 4 Theories Driving the Creator Economy

- Taking speculation to the extreme? While everyone is obsessed with ‘personal stocks,’ friend.tech options contracts quietly go live.

- Enhancing user experience, reducing interaction steps, how can ‘intent-based transactions’ support the next wave of Web3 narrative?

- Wreck League Research Report Bored Apes Teams Up with Animoca to Create a 3A-Level PVP Fighting Blockchain Game

- Analyzing Friend.Tech from First Principles

- Attracting 100,000 users in a month and ranking third in terms of protocol income, how much longer can the decentralized social application Friend.tech stay popular?

- OPNX Development History Tokens soar by a hundredfold, becoming a leading bankruptcy concept?